Meta is gearing up to join the AI chips race

- Ultimately, Meta wants to break free from Nvidia’s AI chips while challenging other tech giants making their silicon.

- Meta expects an additional US$9 billion on AI expenditure this year, beyond the US$30 billion annual investment.

- Will Artemis mark a decisive break from Nvidia, after Meta hordes H100 chips?

A whirlwind of generative AI innovation in the past year alone has exposed major tech companies’ profound reliance on Nvidia. Crafting chatbots and other AI products has become an intricate dance with specialized chips largely made by Nvidia in the preceding years. Pouring billions of dollars into Nvidia’s systems, the tech behemoths have found themselves straining against the chipmaker’s inability to keep pace with the soaring demand. Faced with this problem, industry titans like Amazon, Google, Meta, and Microsoft are trying to seize control of their fate by forging their own AI chips.

READ NEXT

More of Meta’s missteps released

After all, in-house chips would enable the giants to steer the course of their own destiny, slashing costs, eradicating chip shortages, and envisioning a future where they offer these cutting-edge chips to businesses tethered to their cloud services -creating their own silicon fiefdoms, rather than being entirely dependent on the likes of Nvidia (and potentially AMD and Intel).

The most recent tech giant to announce plans to go solo is Meta, which is rumored to be developing a new AI chip, “Artemis,” set for release later this year.

The chip, designed to complement the extensive array of Nvidia H100 chips recently acquired by Meta, aligns with the company’s strategic focus on inference—the crucial decision-making facet of AI. While bearing similarities to the previously announced MTIA chip, which surfaced last year, Artemis seems to emphasize inference over training AI models.

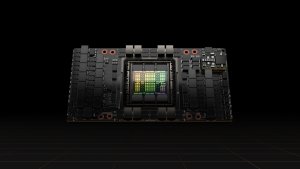

H100 Tensor Core GPU. Source: Nvidia.

However, it is worth noting that Meta is entering the AI chip arena at a point when competition has gained momentum. It started with a significant move last July, when Meta disrupted the competition for advanced AI by unveiling Llama 2, a model akin to the one driving ChatGPT.

Then, last month, Zuckerberg introduced his vision for artificial general intelligence (AGI) in an Instagram Reels video. In the previous earnings call, Zuckerberg also emphasized Meta’s substantial investment in AI, declaring it as the primary focus for 2024.

2024: the year of custom AI chips by Meta?

In its quest to empower generative AI products across platforms like Facebook, Instagram, WhatsApp, and hardware devices like Ray-Ban smart glasses, the world’s largest social media company is racing to enhance its computing capacity. Therefore, Meta is investing billions to build specialized chip arsenals and adapt data centers.

Last Thursday, Reuters got hold of an internal company document that states that the parent company of Facebook intends to roll out an updated version of its custom chip into its data centers this year. The latest iteration of the custom chip, codenamed ‘Artemis,’ is designed to bolster the company’s AI initiatives and might lessen its dependence on Nvidia chips, which presently hold a dominant position in the market.

Mark Zuckerberg, CEO of Meta, testifies before the Senate Judiciary Committee on January 31, 2024 in Washington, DC. (Photo by Anna Moneymaker/GETTY IMAGES NORTH AMERICA/Getty Images via AFP).

If successfully deployed at Meta’s massive scale, an in-house semiconductor could trim annual energy costs by hundreds of millions of dollars, and slash billions in chip procurement expenses, suggests Dylan Patel, founder of silicon research group SemiAnalysis. The deployment of Meta’s chip would also mark a positive shift for its in-house AI silicon project.

READ NEXT

Meta in the hotseat… again

In 2022, executives abandoned the initial chip version, choosing instead to invest billions in Nvidia’s GPUs, dominant in AI training. The upside of that strategy is that Meta is poised to accumulate many coveted semiconductors. Mark Zuckerberg revealed to The Verge that by the close of 2024, the tech giant will possess over 340,000 Nvidia H100 GPUs – the primary chips used by entities for training and deploying AI models like ChatGPT.

Additionally, Zuckerberg anticipates Meta’s collection to reach 600,000 GPUs by the year’s end, encompassing Nvidia’s A100s and other AI chips. The new AI chip by Meta follows its predecessor’s ability for inference—utilizing algorithms for ranking judgments and user prompt responses. Last year, Reuters reported that Meta is also working on a more ambitious chip that, like GPUs, could perform training and inference.

Zuckerberg also detailed Meta’s strategy to vie with Alphabet and Microsoft in the high-stakes AI race. Meta aims to capitalize on its extensive walled garden of data, highlighting the abundance of publicly shared images and videos on its platform and distinguishing it from competitors relying on web-crawled data. Beyond the existing generative AI, Zuckerberg envisions achieving “general intelligence,” aspiring to develop top-tier AI products, including a world-class assistant for enhanced productivity.