Tiny VLMs bring AI text plus image vision to the edge

|

Getting your Trinity Audio player ready...

|

Large language models capable of providing statistically likely answers to written text prompts are transforming knowledge work. AI algorithms enable tasks to be performed faster and reduce the need for specialist skills, which can be unnerving to witness for highly trained and experienced staff. But there’s magic to how neural networks can level the playing field and help users to understand their world. And a great example of this is the rise of vision language models (VLMs), which co-locate image features alongside multi-dimensional word embeddings.

So-called multimodal AI – neural networks capable of generating results based on multiple input types such as text and images – adds to the number of ways that deep learning can be used. OpenAI’s GPT-4 with Vision will answer questions about images – for example, if the user submits a photo or web link. Given a picture of the contents of a fridge, it can suggest ideas for dinner.

You can picture VLMs as image recognition on steroids. Multimodel AI models can do much more than just recognize features in a scene. Algorithms can gather insights from security footage, prompt maintenance crews to take action, analyse customer behaviour, and much more besides (as our test results show – scroll down to see the responses).

VLMs can provide a second pair of eyes that never get tired. However, these superpowers come at a cost and historically have required heavy lifting to happen behind the scenes in the cloud. Generative AI algorithms have gorged themselves on the contents of the internet in the name of fine-tuning their model weights.

But not all of those parameters are created equal, and developers are finding ways to trim AI models down to a more memory-efficient size for specific tasks. It means that algorithms can run locally at the edge and suit fully remote use cases such as drone inspections – for example, where connectivity cannot be guaranteed.

Releasing moondream2 – a small, open-source, vision language model designed to run efficiently on edge devices. Clocking in at 1.8B parameters, moondream requires less than 5GB of memory to run in 16 bit precision. pic.twitter.com/ySfmK4mJbN

— vik (@vikhyatk) March 4, 2024

One of a new breed of tiny VLMs capable of running at the edge is Moondream, which is advertised as being able to run anywhere, even on mobile devices and Raspberry Pi’s. The code to run the tiny AI text and vision model is available on GitHub, and there’s even a live demo environment so that you can jump straight in and put the VLM to the test.

Tiny VLM test results

To discover what VLMs can do and highlight potential use cases for local AI models capable of answering questions about images, TechHQ submitted a variety of photos to the Moondream2 live demo hosted on Huggin Face.

Image 1

Harbour scene. Photo: JT.

Input: Is it safe to walk straight ahead?

Response: No, it is not safe to walk straight ahead. There is a dock with a red life ring attached to it, and a few boats are visible in the water. You need to be cautious and look out for any obstacles or hazards such as the dock, boats, or other watercraft, before walking forward.

Image 2

Stairway. Photo: JT.

Input: Is there something for me to hold on to?

Response: Yes, there is a railing on the stairs, and you can hold on to it while climbing to the top. The railing is attached to the wall, providing support for those ascending or descending the steps.

Input: Can you see an open window?

Response: Yes, there is an open window on the building in the background, and the sun is shining brightly illuminating the scene.

Image 3

Pedestrian crossing. Photo: JT.

Input: Is it safe for me to use the pedestrian crossing?

Response: No, it is not safe for you to use the pedestrian crossing, as the image shows a black car approaching the crossing. The car is likely not paying attention to the red light, which indicates that it’s not safe for pedestrians to cross at that moment.

Image 4

Road signs. Photo: JT.

Input: Should I go left or right to get to the airport?

Response: Right.

Input: Which way to the marina?

Response: Left.

Image 5

Bus stop. Photo: JT.

Input: Is there anywhere to catch a bus?

Response: Yes, there is a bus stop in the image, with benches for passengers to sit on and a covered area. A traffic cone is also present, and a bridge can be seen in the background.

Input: Where can I shelter if it rains?

Response: You can shelter at the bus stop if it rains, as there is a bus shelter nearby with benches and a roof providing protection from the elements.

Image 6

Luxury yacht. Photo: JT.

Input: What can you see?

Response: A helicopter is positioned on a ship, with a metal fence in the foreground. The background features a variety of elements, including buildings, trees, a road, a water body, and the sky.

Image 7

Coffee shop. Photo: JT.

Input: Is there anywhere to get a warm drink around here?

Response: Yes, there is a coffee shop nearby. The image shows a red building with the words “New Cut Coffee” on it, and a black fence. A black car is parked in the lot, and a white building is visible in the background. The sky is blue overhead.

Input: Does the coffee shop sell sandwiches?

Response: Yes.

Input: What else is there to eat at the coffee shop?

Response: In addition to coffee, the New Cut Coffee shop offers sandwiches and pastries. The image also features a building and a vehicle in the background.

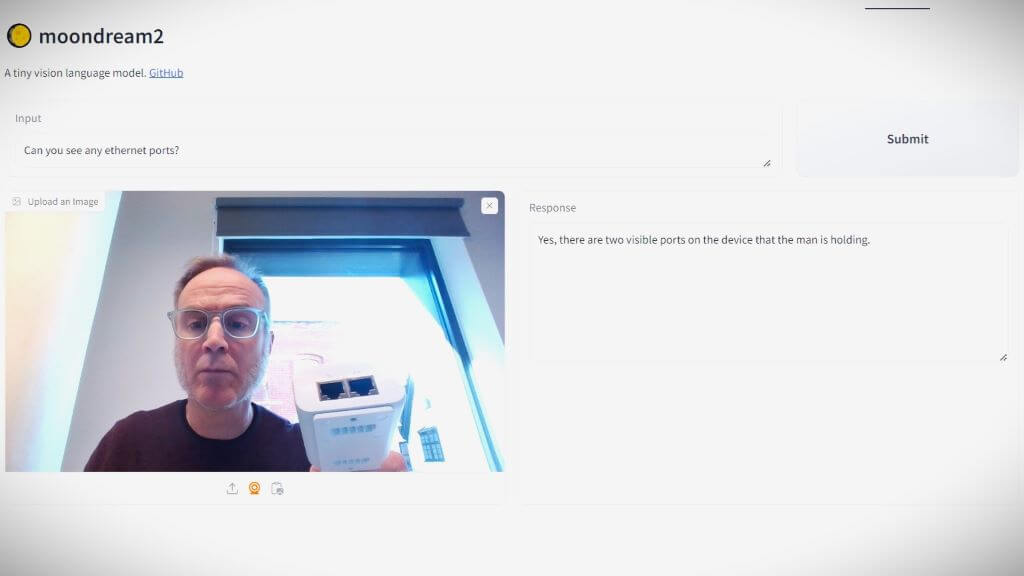

Image 8

Web cam mode: the tiny VLM live demo can accept images via a connected webcam if browser permissions are given. Screengrab: JT.

Input: Can you see any ethernet ports?

Response: Yes, there are two visible ports on the device that the man is holding.

Verdict on tiny VLM testing

It’s hard not to be impressed with the performance of Moondream2 – a VLM that’s initialized with weights from SigLIP and Phi 1.5, and then trained using synthetic data generated by Mixtral.

Evaluating the responses generated from TechHQ’s test sequence of images and text inputs, it’s clear that Moondream2 gets more right than it gets wrong, and is capable of noticing fine details that weary human eyes may miss. Also, as mentioned, the AI model’s small footprint is another winning feature – paving the way for inference to take place on edge devices that could include drones or robots.

VLMs could turn out to be incredibly useful in helping robots orientate themselves in the world and being able to run algorithms locally eliminates the need for network access, or at least provides redundancy in the case of outages.

A smart idea for smart glasses

Alongside its launch of the Quest 3 VR headset, Meta also announced a collaboration with Ray-Ban to produce an updated line of smart glasses. The product, which features Qualcomm’s weight-distributed Snapdragon AR platform, has some local voice control capabilities – for example, to instruct the spectacles to take images using the built-in ultra-wide 12MP camera. Users simply have to say, “Hey Meta, take a picture.”

Being able to couple those photos with a VLM running locally would turn the Ray-Ban smart glasses into a much more compelling product, and could bring scenes to life for visually impaired wearers without the need for pairing with a smartphone.

Vision assistance powered by edge-compatible VLMs could dramatically enhance the capabilities of digital camera-equipped devices. As the webcam image in our test sequence highlights, there’s the potential for algorithms to help with maintenance and repair tasks, distributing knowledge and expertise across the globe.

AI is doing for knowledge work what robotics has done for manufacturing, and it’s just the beginning.