GenAI unveilings at AWS re:Invent 2023: AI chips to chatbot

|

Getting your Trinity Audio player ready...

|

- AWS unveils new features & products at re:Invent 2023.

- Includes Trainium2 and Graviton4 AI chips, Amazon Q Chatbot, Titan Image Generator preview.

- Plus, Guardrails for Amazon Bedrock.

In a year marked by infinite discussions on generative AI in tech conferences, AWS re:Invent 2023 seamlessly continues the trend. The conference kicked off on November 27 and has already showcased integrating AI tools and services.

Amazon has been actively working to dispel the notion that it lags behind in the AI race. Over the past year, following the release of ChatGPT by OpenAI, major players like Google, Microsoft, and others have raised their stakes, introducing chatbots and making substantial investments in AI development. Selipsky’s keynote this year highlighted AWS’s comprehensive investment in the AI lifecycle, emphasizing high end infrastructure, advanced virtualization, petabyte-scale networking, hyperscale clustering, and tailored tools for model development.

In a keynote lasting nearly 2.5 hours, Selipsky conveyed AWS’s focus on meeting the diverse needs of organizations engaged in building AI models.

Adam Selipsky, AWS CEO, during his keynote address, sharing about innovations in data, infrastructure, & AI/ML. Source: AWS’s livestream.

Trainium2 & Graviton4: AI model training chips

Amazon took the stage at the re:Invent conference to introduce its latest chip generation for model training and inferencing, combatting the growing demand for generative AI on GPUs, with Nvidia’s offerings in short supply.

AWS’s first chip, AWS Trainium2, aims to provide up to four times improved performance and two times better energy efficiency than its predecessor, Trainium, introduced in December 2020. Amazon plans to make it accessible in EC Trn2 instances, organized in clusters of 16 chips. Trainium2 is scalable and can reach deployments of up to 100,000 chips in AWS’ EC2 UltraCluster product.

The company said this provides supercomputer-class performance with up to 65 exaflops of compute power. (“Exaflops” and “teraflops” measure how many floating-point operations a chip can perform per second.) This power will enable AWS customers to train large language models with 300 billion parameters in weeks rather than months.

AWS Graviton4 and AWS Trainium2 processors. Source: AWS

“Trainium2 chips are designed for high-performance training of models with trillions of parameters. This scale enables customers to train large language models with 300 billion parameters in weeks rather than months. The cost-effective Trn2 instances aim to accelerate advances in generative AI by delivering high-scale ML training performance,” Amazon said in a press release. “With each successive generation of chip, AWS delivers better price performance and energy efficiency, giving customers even more options—in addition to chip/instance combinations featuring the latest chips from third parties like AMD, Intel, and NVIDIA—to run virtually any application or workload on Amazon Elastic Compute Cloud (Amazon EC2).”

Amazon did not specify the release date for Trainium2 instances to AWS customers, except for indicating they will be available “sometime next year.” The second chip introduced was Graviton4.

Graviton4 marks the fourth generation delivered in five years and is “the most powerful and energy-efficient chip we have ever built for a broad range of workloads,” David Brown, vice president of Compute and Networking at AWS, said. According to the press release, Graviton4 provides up to 30% better compute performance, 50% more cores, and 75% more memory bandwidth than current generation Graviton3 processors.

AWS & Nvidia deepening ties at re:Invent 2023

AWS CEO Adam Selipsky and Nvidia’s CEO Jensen Huang at the re:Invent 2023.

AWS’s strategy doesn’t solely rely on selling affordable Amazon-branded products; similar to its online retail marketplace, Amazon’s cloud platform will showcase premium products from other vendors including Nvidia.

After Microsoft unveiled its Nvidia H200 GPUs for the Azure cloud, Amazon made parallel announcements at the Reinvent conference. AWS disclosed plans to offer access to Nvidia’s latest H200 AI graphics processing units alongside its own chips, including the new models.

“AWS and Nvidia have collaborated for over 13 years, beginning with the world’s first GPU cloud instance. Today, we offer the widest range of Nvidia GPU solutions for workloads including graphics, gaming, high-performance computing, machine learning, and now, generative AI,” Selipsky said. “We continue to innovate with Nvidia to make AWS the best place to run GPUs, combining next-gen Nvidia Grace Hopper Superchips with AWS’s EFA powerful networking, EC2 UltraClusters’ hyper-scale clustering, and Nitro’s advanced virtualization capabilities.”

A detailed explanation of AWS and Nvidia’s collaboration can be found in this standalone article.

Amazon Q: a chatbot for businesses

In the competitive landscape of AI assistants, Amazon has entered the fray with its offering, Amazon Q. Developed by the company’s cloud computing division; this workplace-focused chatbot is

distinctively tailored for corporate use, steering clear of consumer applications. “We think Q has the potential to become a work companion for millions and millions of people in their work life,” Selipsky told The New York Times.

Amazon Q is designed to assist employees with their daily tasks, from summarizing strategy documents to handling internal support tickets and addressing queries related to company policies. Positioned in the corporate chatbot arena, it will contend with counterparts like Microsoft’s Copilot, Google’s Duet AI, and OpenAI’s ChatGPT Enterprise.

Titan Image Generator and Guardrails for Bedrock

Aligning itself with the multitude of tech giants and startups that have ventured into this domain, Amazon is introducing an image generator. Unveiled at AWS re:Invent 2023, Amazon said the Titan Image Generator is now in preview on Bedrock for AWS users. As part of the Titan generative AI models, it can generate new images based on text or customize existing ones.

#AWS is putting the “art” back into “artificial intelligence” with the new Amazon Titan Image Generator. 🎨✨🖼️ #MachineLearning #AI

Learn more. 🔗 https://t.co/pOzi2TaPPk pic.twitter.com/NvRdCtLuiu

— Amazon Web Services (@awscloud) November 29, 2023

“[You] can use the model to easily swap out an existing [image] background to a background of a rainforest [for example],” Swami Sivasubramanian, VP for data and machine learning services at AWS, said onstage. “[And you] can use the model to seamlessly swap out backgrounds to generate lifestyle images, all while retaining the image’s main subject and creating a few more options.”

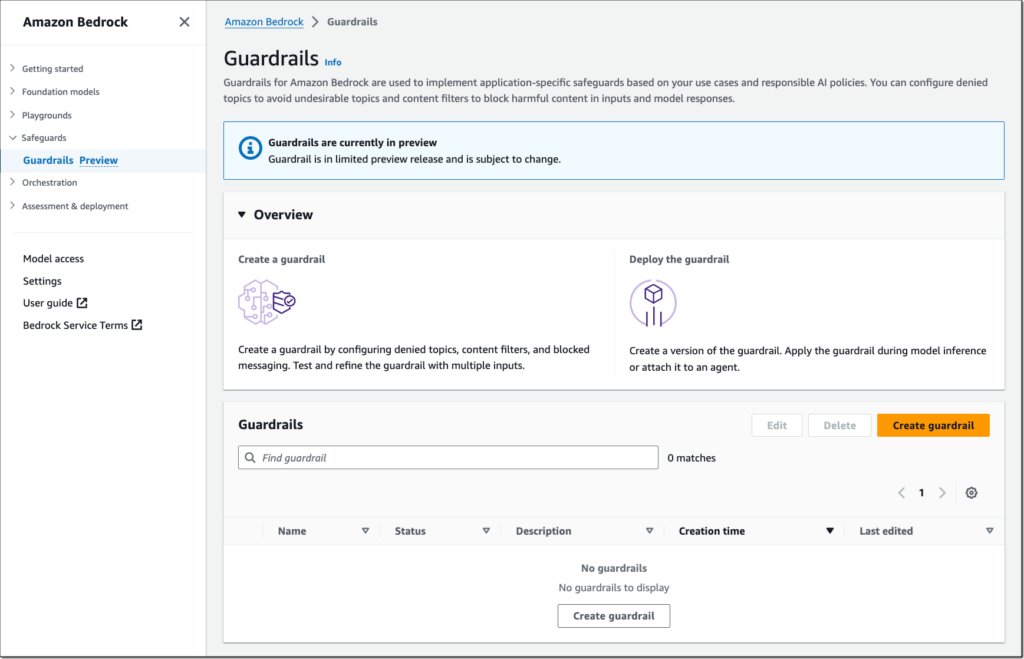

AWS also unveiled Guardrails for Amazon Bedrock, enabling consistent implementation of safeguards ensuring user experiences align with company policies. “These guardrails facilitate the definition of denied topics and content filters, removing undesirable content from interactions,” AWS noted in a blog post.

AWS customers can now develop a tailored generative model, designed specifically to perform in their unique domain, with fine-tuning of Command on Amazon Bedrock. Source: AWS

Applied to all large language models in Amazon Bedrock, including fine-tuned models and Agents, guardrails deploy preferences across applications, promoting “safe innovation while managing user experiences.”