The interests of humanity put in rich white hands

• OpenAI CEO Sam Altman had a wobble, but is back in charge of OpenAI.

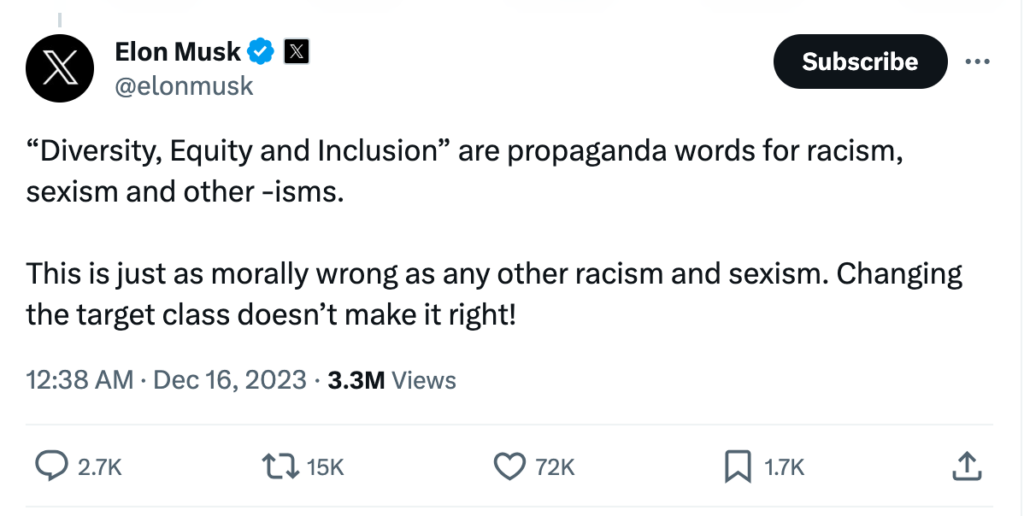

• Meanwhile, Elon Musk is demanding diversity programs die out.

• Is the cutting-edge of technology safe in the hands of the homogenous white tech bros?

What do we actually know about who’s in charge of the technology that’s taking over the world?

In the year since it released the chatbot that shook up nearly every industry, OpenAI has carved out its place among the most powerful companies in the world. ChatGPT began a revolution, marking a tipping point for the AI technology that’s become so ubiquitous as to not need its acronym expanded.

Awareness of AI has so increased this year that the Cambridge Dictionary – the world’s most popular online dictionary for the English language – updated the definition of “hallucinate” to reflect its new use as a verb: when an artificial intelligence hallucinates, it produces false information.

To top it off, “hallucinate” was named Word of the Year. The Cambridge Dictionary website attributes the “surge of interest” in AI this year to “an abundance of tools being released for public use, such as ChatGPT, Bard [and] DALL-E.”

What indeed?

When ChatGPT became available to the public, it was the starting pistol for the AI race. Still, despite hundreds of other offerings, including some from tech giants like Google and Amazon, OpenAI – backed, of course by Microsoft (perish the thought that this is a fair race for the little guys) came in first almost every time.

The ubiquity of new technology (at least, new insofar as the public was able to access it for the first time) might allow an assumption that the playing field was evened for startups hoping to get a foot in ahead of Big Tech.

However, as the MIT Technology Review puts it: there is no AI without Big Tech. Thanks to platform dominance and the self-reinforcing properties of the surveillance business model, the biggest and most powerful tech companies own and control the “ingredients” necessary to develop and deploy large-scale AI.

Many AI startups simply license and rebrand AI models sold by tech giants or their partner startups.

That fact is critical to any discussion of the future of AI. Although “hallucinate” being word of the year shows how widely aware of AI’s shortfalls we all are, its potential is also hugely recognized – overstated, even. When looking to a future that’s inseparable from AI, examining exactly where the technology will come from is crucial.

Centering in on OpenAI, after what CNN called a “brief corporate explosion” last month, the company recently saidd that it’s back to focusing on its core mission – ensuring artificial general intelligence “benefits all of humanity” – with a reconstituted board of directors.

This year we also witnessed AI’s inherent bias, borne of course from the biases of its predominantly western creators. After an initial wave of racism and sexism churned out by chatbots, Big Tech outsourced the work of rectifying such inclinations to Sama.

Sama handles a lot of the dirty work for Big Tech, and the industry’s reliance on underpaid workers was exposed. Meta’s content moderation methods, for example, were scrutinized after the company was sued by a moderator with PTSD, but that didn’t stop OpenAI contracting Sama for its own moderation needs.

The traumatic nature of PG-ifying GPT-3 eventually led Sama to cancel all its work for OpenAI in February 2022, eight months earlier than planned.

It seems that tasks deemed ‘below’ the responsibilities of a tech exec are sent to workers in countries without strict workers’ rights (although it’s heartening to know that in May, 150 workers met in Nairobi to establish the first Africa Content Moderators Union).

There’s really only one question: who is in charge of the tech that’s changing the world?

OpenAI, Sam Altman, and a corporate soap opera

A week after Sam Altman was ousted as OpenAI CEO, he was reinstated. The reasoning behind his initial firing has been kept quiet, but the company said at the time that “the board no longer has confidence in his ability to continue leading.”

After a whole bunch of corporate politics, the shakeup ultimately created a new board of with major names in the business and technology sectors: including Treasury Secretary Larry Summers, Quora CEO Adam D’Angelo, and former Salesforce co-CEO Bret Taylor.

OpenAI, Sam Altman and these guys. We love this intro to the board from Hackernoon.

The board’s only women directors left the company during the OpenAI Altman saga, leaving three white men in charge. Ah, just like old times…

It’s easy to imagine the men on the OpenAI board as trendy nerds, quarter-zip sweaters undone, suit trousers crumpled over sneakers that cost more than some peoples’ rent. And the board’s own Larry Summers could explain his theory as to why no women made it into the room: innate differences in sex mean fewer women succeed in science and maths.

True, he did make that claim almost two decades ago (back in the allegedly enlightened early years of the 21st century), but the lack of diversity on the board is certainly at odds with the idea of AI benefitting everyone.

Increasingly, inside and out of the tech world, people are questioning OpenAI’s ability to carry out its goal without including people from diverse backgrounds on its overseeing body. Even lawmakers in Washington are taking notice. Similarly to the world of politics, people are asking themselves how far these guys can be trusted to encompass and enact change that benefits a broad spectrum of spectrums – racial, sexual, gender, sexuality and more. How far can they dial down their own privilege and serve a broader world population of tech-users.

“We strongly encourage OpenAI to move expeditiously in diversifying its board,” Reps. Emanuel Cleaver (D-Mo.) and Barbara Lee (D-Calif.) wrote to Sam Altman and the board earlier this week in a letter that was obtained by CNN.

“The AI industry’s lack of diversity and representation is deeply intertwined with the problems of bias and discrimination in AI systems,” the lawmakers added.

Margaret Mitchell, the AI researcher who founded Google’s Ethical AI team (before being fired amid controversy at the company) said she doesn’t have faith that OpenAI, as [she] currently understand[s] it, is well placed to create technology that ‘benefits all of humanity.’”

“If we’re trying to achieve technology that reflects the viewpoints of predominantly rich, white men in Silicon Valley, then we’re doing a great job at that,” Mitchell said. “But I would argue that we could do better.”

Taylor, the chair of the board, told CNN through a representative that, “Of course, Larry, Adam and I strongly believe diversity is essential as we move forward in building the OpenAI board.”

In the blog post written by Altman announcing his return as CEO, he lays out three “immediate priorities,” one of which is “building out a board of diverse perspectives.” That, he writes, will be handled by Brett, Larry and Adam.

As at many companies in Silicon Valley, there’s a tried and true response to any whisper of bias: OpenAI touts an ongoing “investment in diversity, equity and inclusion.”

Has DEI already died?

Diversity, equity and inclusion is, at least in theory, what ensures equal access to the workplace. Besides the whole “creating a better, fairer world” thing, DEI ensures more productive, more profitable workplaces. The number of studies that prove this makes it a fact.

Mr. Musk feels he hasn’t been given a fair chance…

From 2015 until 2020, this message was heard loud and clear by businesses. During that period, LinkedIn data shows the number of roles labelled “Head of Diversity” more than doubled.

Then, in the last couple of years, a shift came: as the tech industry felt the end of lockdown-fuelled surges, layoffs across (and including!) the board meant companies cut anything that wasn’t business-critical.

All too often that meant the DEI team, which has historically struggled to integrate into the business-critical fabric of a company.

That wouldn’t mean businesses were necessarily anti-DEI, simply that the hatches were being battened down and a bare-bones operation begun. Despite the profitability bonuses that DEI programs regularly bring to companies’ bottom lines, the card could be played that killing such programs was a necessary sail-trimming exercise and that when better economic conditions prevailed, they would be reinstated.

Until this week, anyway.

This week, Elon Musk – never in recent years a figure to shy away from saying the quiet part out loud – announced that he wanted to cancel DEI, claiming it’s another type of “discrimination.” The arch-capitalist dogma of that statement makes it clear that it’s not about economics (at least in Musk’s case), it’s about having zero interest in equity.

On December 15th, he tweeted that “DEI must DIE,” following up with the declaration that “The point was to end discrimination, not replace it with different discrimination.”

The Arch Tech Bro speaks. Because apparently, no one can stop him.

The goal of DEI is actually to overcome systemic discrimination by making some opportunities (including training, mentorship and hiring programs) more accessible for historically marginalized groups.

That does mean excluding some white men born into extreme wealth – or, as Musk would put it, “reverse discrimination.”

Musk recently told Joe Rogan that he bought Twitter – rebranded as X – in 2022 to stop what he calls the “mind virus” of progressivism. After firing his content moderators, reinstating the accounts of controversial public figures (including most recently, bankrupted conspiracy theory and energy drink shill, Alex Jones) and posting a slew of his own opinions, Musk’s X lost nearly 60% of US advertizing on the platform.

Major US companies including Apple, Disney and IBM suspended their ads on X and the boycott could cost the company as much as $75 million in advertizing revenue by the end of the year.

Although a bad economic choice, Musk’s transparency about his views and what he’ll allow on his site enables companies to withdraw and, perhaps more importantly, means users of the site know what it stands for.

That clarity means at the very least that users are prepared for the hate-speech they might encounter on X and have the option to disengage from the platform entirely.

On December 6th, Sam Altman was named TIME’s CEO of the year. In an interview that subtly references his diet and fiancé (DEI points for being vegetarian? For his engagement to Oliver Mulherin?), the “surreal” experience of being fired and rehired by OpenAI is laid out.

From what little is known about the circumstances of his firing, Sam Altman’s greatest crime is putting profit before safety – which is arguably a big enugh crime for the figure steering a world-altering technology.

While no one is saying that AI as it currently exists presents an existential threat to humanity, there’s a rapidly forming school of thought that believes the technology is being rushed forward for the sake of staying ahead in the economic AI race.

Apparently, Altman is affable, brilliant, uncommonly driven, and gifted at rallying investors and researchers alike around his vision of creating artificial general intelligence (AGI) for the benefit of society as a whole.

Yet, he’s also been accused of manipulating people, being dishonest and “seeking power in a really extreme way.”

Ultimately, the systems that will shape our future are owned and controlled by a small group wielding complete power – 720 OpenAI employees threatening to follow Sam Altman to Microsoft, for example – and, somehow, celebrity status.

We’d be inclined to lean away from discussion that centers AI as a world-dominating force with malignant potential, but as a technology that’s already entered almost every kind of business, it’s important to scrutinize those who own it. While by no means all white tech bros are as carelessly crass as Musk about their attitude to DEI, it’s worth keeping a keen eye on how generative AI evolves from here – and whether that evolution includes diversity, equity and inclusion to any degree that moves the dial.