VMware Explore: private AI is the next frontier for enterprises.

- Private AI was born out of VMware’s need, which the company realized would resonate well with other enterprises.

- With a private AI architecture, businesses can run their preferred AI models, proprietary or open-source, near their data.

- Private AI is expected to have huge take-up across the industry.

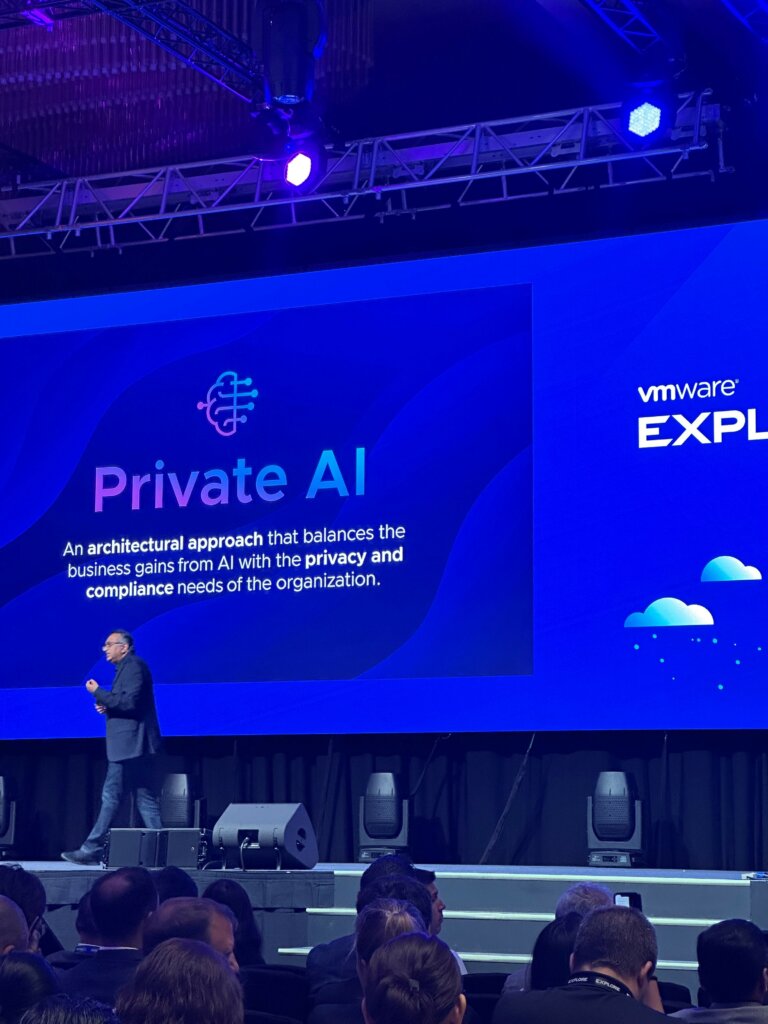

The 2023 VMware Explore in Singapore’s concluded last week, and as anticipated, the conversations were mainly centered on AI, multi-cloud, and the edge. But while generative AI was heavily spoken about, VMware was more thrilled to discuss private AI – an architectural approach to AI within an organization’s practical privacy and compliance needs.

Simply put, VMware has found a way to bring compute capacity and AI models to where enterprise data is created, processed, and consumed, whether in a public cloud, enterprise data center, or at the edge. “When we started looking into generative AI, our lawyers had to stop us from using ChatGPT,” CEO Raghu Raghuram said in his keynote address.

“These are hard problems to solve. Because if you think about what goes into designing an AI model, each stage has significant privacy implications. So we took our team of AI researchers and created VMware AI labs and put them to work on solving this problem,” Raghuram said, adding that most enterprises endure the same issues.

VMware CEO, Raghu Raghuram during his keynote at VMware Explore Singapore 2023.

“Many of the CEOs I speak with are actively asking their legal teams to dig in and collaborate with IT to define a new set of privacy standards built for the complex nuances of generative AI. It’s a complicated undertaking, to say the least. Unless you solve these privacy problems, you won’t be able to enjoy the advantages of generative AI,” he said.

During that time, VMware AI Labs collaborated closely with the company’s General Counsel, Amy Fliegelman Olli, and her legal team. Together, their engineers and lawyers sorted through the intricacies of choosing an AI model, training it using domain-specific data, and managing the inferencing phase in which employees interact with the model.

“That is when the company came up with an answer – private AI. It is an architectural approach, meaning it’s an approach that can be done by others as well, addressing the privacy issues while delivering exciting business cases,” Raghuram highlighted.

So, what is private AI?

Throughout the two-day Explore event in Singapore, there was a common understanding as to the issues VMware was addressing: generative AI is now making the privacy challenge both more consequential and more complex. “Data is the indispensable ‘fuel’ that powers AI innovation, and the job of keeping proprietary data private and protected has intensified,” Raghavan told reporters following his keynote.

VMware’s plan from the get-go was to design a new approach that balanced the tremendous business value of AI with privacy safeguards it could trust. Raghavan further shared how enterprises are being asked to address three key privacy issues: “First, how do you minimize the risk of intellectual property ‘leakage’ when employees interact with AI models? Second, how do you ensure that sensitive corporate data will not be shared externally?”

According to VMware’s CEO, the third issue enterprises want to address is how they can maintain complete control over access to their AI models. In contrast to public AI models, that can expose businesses to various risks, private AI is an architecture built from the ground up to give businesses greater control over how they select, train, and manage their AI models. “That level of control and transparency is precisely what every legal team is now demanding,” Raghuram emphasized.

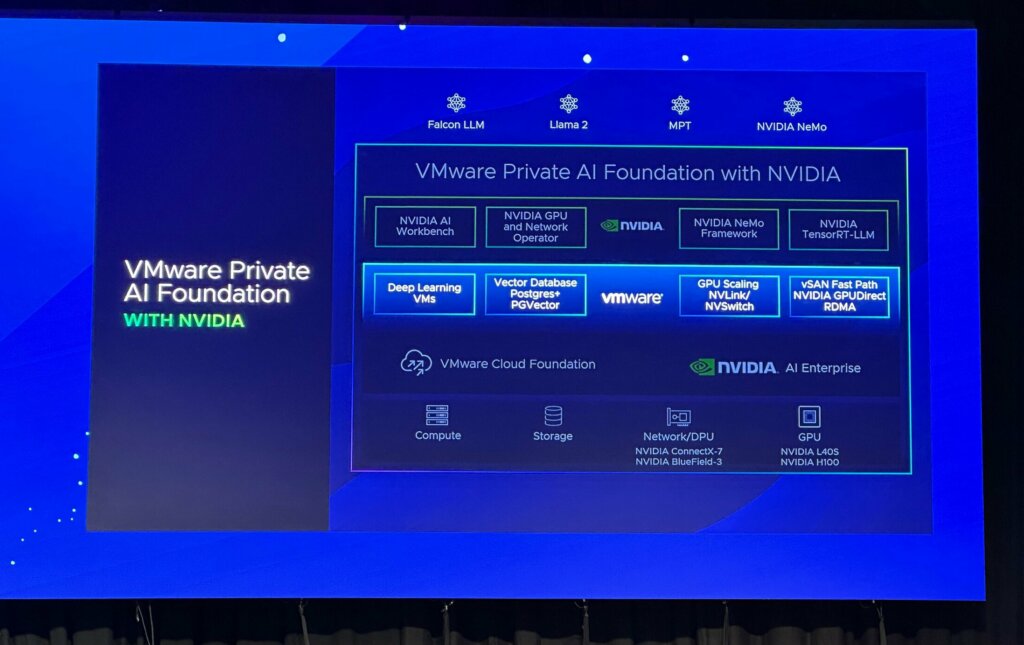

VMware Private AI Foundation with Nvidia

During the first Explore event in Las Vegas in August, VMware unveiled private AI, which allows businesses to create generative AI in their data centers, rather than relying on the cloud. Private AI brings AI models to the locations where data is generated, processed, and utilized. Concurrently, VMware also disclosed a partnership with Nvidia, the leader in the AI chips sector.

The VMware Private AI Foundation with Nvidia is intended for release in “early 2024.” It consists of a set of integrated AI tools that will allow enterprises to run proven models trained on their private data in a cost-efficient manner. These models will be deployable in data centers, on leading public clouds, and at the edge.

The partnership between the companies will allow enterprises access to proven libraries that are vetted by both VMware and Nvidia, along with the ability to better use the relatively small number of GPUs that are available, and finally protect corporate data and intellectual property when releasing trained models into production.

VMware Private AI Foundation with NVIDIA, extending the companies’ strategic partnership to ready enterprises that run VMware’s cloud infrastructure for the next era of generative AI.

What does the VMware-Nvidia collaboration mean?

In addition to Nvidia exploring a fresh path for diversification, this collaboration would be highly advantageous for VMware. The company stands to gain significantly as the partnership seeks to ensure compatibility with major companies such as Dell, Lenovo, HPE, and more, making it straightforward for customers to implement the solution per their specific needs. This could lead to widespread adoption across various industries.

Furthermore, Nvidia is equipping VMware with advanced computing capabilities. During his keynote address at VMware Explore Las Vegas, Nvidia’s CEO Jensen Huang unveiled a significant advancement that empowers VMware to achieve optimal hardware performance while preserving security, manageability, and the ability to migrate resources seamlessly across different GPUs and nodes.

Nvidia’s keynote at VMware Explore Las Vegas. Source: X

“GPUs are in every cloud and on-premise server everywhere. And VMware is everywhere. So, for the first time, enterprises worldwide will be able to do private AI. Private AI at scale deployed into your company is fully secure and multi-platform,” Jensen said. That will lead to an increased and optimized usage of Nvidia’s GPUs.

It could also relieve data center costs and address the GPU crunch during the ongoing AI gold rush. By harnessing the full potential of computing resources, encompassing GPUs, DPUs, and CPUs through virtual machines, the Private AI Foundation ensures optimal resource utilization, ultimately translating into reduced overall costs for enterprises.

Another critical advantage of the partnership is flexibility. “For any meaningful enterprise, their data lives in all types of locations. Distributed computing and multi-cloud will be at the foundation of AI; there’s no way to separate these two,” Raghuram explained.

The collaboration between VMware and Nvidia marks the emergence of on-premises generative AI, transforming the AI landscape and establishing a mutually beneficial partnership that brings advantages both to the companies and to the broader ecosystem.