Dynatrace Perform: here’s what you missed

• Observability solutions are key to making sound tech choices in 2024.

• In particular, without observability solutions, it can be hard to gauge the success of your GenAI investments.

• Dynatrace at Vegas announced its latest solutions to the problems enterprises are facing.

Last week the world went to Las Vegas – where Dynatrace held its annual conference. If you missed it, don’t worry too much: we can’t quite recreate the atmosphere of the main stage, but there were a ton of exciting announcements to share.

Dynatrace started out as just a product but over the years has grown into the giant it is today, making waves as an all-purpose business analysis and business intelligence platform. After a year of huge digital transformation and industry-wide tectonic shifts, it was only logical to make this year’s conference theme “Make waves.”

It’s easy to broadly gesture at the changes the past year saw, but Rick McConnell, Dynatrace CEO, summarized some megatrends that the whole industry bought into.

Rick McConnell explains why Dynatrace is different.

Cloud modernization. We’ve all established cloud services – kinda. The tech industry is, as of right now, 20% cloud-based. Outside of that, the world is only 10% there; the scale of cloud services at this stage has seen a reduction in cost and improved customer satisfaction and user experience.

Hyperscaler growth. Having grown by 50%, it’s no wonder that tis area has hit $200bn annual revenue.

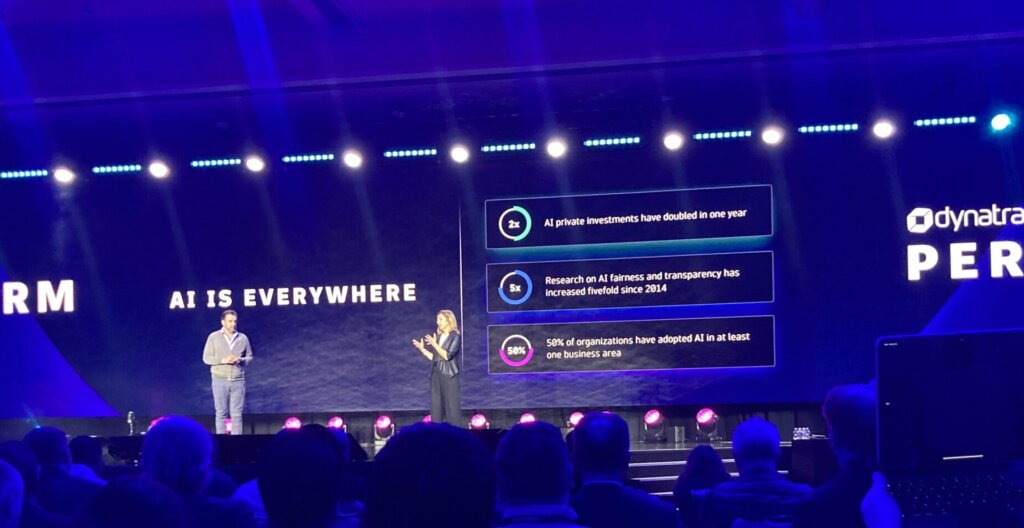

Artificial Intelligence. Yup, unsurprisingly. Dynatrace’s insights aren’t unsurprising, though: AI became as ubiquitous as digital transformation in 2023, growing to be an essential pillar in business.

Threat protection. AI is crucial to driving this. Threat protection is paramount in the current climate borne of exploding workloads and ways of accessing them: many professionals don’t realize that their business is operating on a huge great threat landscape.

It shouldn’t need explaining why cloud management is so important, then. Not only should it be closely monitored but optimized, too. Optimizing cloud cost isn’t only an economics game as the climate emergency makes environmental cost key to the bigger picture.

Bernd Greifeneder, Dynatrace CTO, admitted that often, environmental impact is the concern of younger engineers. That doesn’t mean they should be left alone to their green coding!

With stats like this, shouldn’t older engineers be worried too?

Optimized sustainability is enabled by visibility; you can’t change what you can’t measure. Observability solutions solve the visibility issue, but what else can be done?

That’s where the first big announcement comes in: Dynatrace is teaming up with Lloyds Banking Group to reduce IT carbon emissions. Using insights and feedback from Lloyds Banking Group, Dynatrace will further develop Dynatrace® Carbon Impact.

The app translates usage metrics – the likes of CPU, memory, disk, and network I/O – into CO2 equivalents (CO2e). That measurement is unique to Dynatrace, which stands out above other offerings that use only vague calculations to deliver a number representing CO2 usage.

With Dynatrace, energy and CO2 consumption is detailed per source, with in-app filters allowing users to narrow focus to high-impact areas, and actionable guidance provided to help reduce overall IT carbon footprint.

Our interview with Bernd details this more closely.

Observability solutions for artificial intelligence

We all expected AI announcements to some extent. What Dynatrace revealed, though, is a market-first: observability for AI.

So far, Dynatrace has stood out from the pack with its own AI usage: the Davis Hypermodal AI model with a core of predictive and causal AI has established user trust, something that the industry in general has seen lost to the hallucinations of generative AI.

The new Davis copilot, which utilizes GenAI, is offered alongside the existing core to let customers ask questions in natural language and get deep custom analysis in under 60 seconds.

Not all companies have applied AI capabilities in such a thoughtful way. In fact, in the rush not to be left behind, businesses have utilized AI – for lack of better phrasing – willy-nilly.

So, the leader in unified observability and security has stepped in to offer an extension of its analytics and automation platform to provide observability and security for LLMs and genAI-powered applications.

There’s money in AI, but businesses have no way of measuring return on their AI application – how can you tell whether GenAI is providing what your company needs?

Dynatrace AI Observability uses Davis AI to deliver exactly the overview necessary to ensure organizations can identify performance bottlenecks and root causes automatically, while providing their own customers with the improved user experience that AI offers.

With the not-so-small issue of privacy and security in the realm of AI, Dynatrace also ensures users’ compliance with regulations and governance by letting them to trace the origins of their apps’ output. Finally, costs can be forecast and controlled using Dynatrace’s AI Observability by monitoring token consumption.

Organizations can’t afford to ignore the potential of generative AI. Without comprehensive AI observability solutions, though, how can their generative AI investments succeed? How else would they avoid the risk of unpredictable behaviors, AI “hallucinations,” and bad user experiences?

If you’re skeptical about accepting this truth from the company selling the product, Eve Psalti from Microsoft AI gave her own advice on adopting the new technology: start small! Application needs to be iterated and optimized – by definition, LLMs are large! It’s literally the first L of the acronym. Observability, then, is the answer.

Achieving observability with Dynatrace

Ensuring that observability is complete means that any question should be answerable, near instantly. Scale should be adoptable in an easy, frictionless way. In the business of trust, Dynatrace provides low-touch automated response.

The complexity of the current environment and struggles with data ingest, including maintaining consistency, security, and keeping cost down have a solution: Dynatrace OpenPipeline.

The third announcement, saved for day two of proceedings, aims to be a data pump for up to 1000 terabytes of data a day. The new core technology provides customers with a single pipeline (hence the name, funnily enough) to manage petabyte-scale data ingestion into the Dynatrace platform.

Hey, you, get onto my cloud… Things the Rolling Stones never knew.

Gartner studies show that modern workloads are generating increasing volumes of telemetry, from a variety of sources. “The cost and complexity associated with managing this data can be more than $10 million per year in large enterprises.”

The list of capabilities is extensive: petabyte scale data analytics; unified data ingest; real-time data analytics on ingest; full data context; controls for privacy and security; cost-effective data management.

One particularly exciting offering, though, is the ease of data deletion. Few appreciate how difficult complete data deletion is, but with Dynatrace they may never have to learn. Data disappears at a click.

All external data that goes onto the Dynatrace platform is also vetted for quality, so observability comes with the assurance that everything is of high enough quality.

Customers can vouch for Dynatrace solutions

Don’t just take it from us, though. Throughout the event – peppered, by the way, with Vegas vibez – we heard from Dynatrace customers to get an idea of how companies can benefit from the various packages on offer.

One such client is Village Roadshow Entertainment – the name might ring a bell for movie lovers. The Australia-based company had been using disparate tools and was “plagued” by background issues; unable to identify what was causing system implosions, a ‘switch it off and on again’ approach meant almost weekly IT catastrophes.

Luckily, by the time the Barbenheimer flashpoint hit cinemas globally, Village Roadshow had Dynatrace’s help. The platform helped truncate unnecessary data, speeding up processes and allowing things to run smoothly – for cinema staff and customers – on the biggest day for the industry in years.

Just as importantly, onboarding with Dynatrace was smooth and didn’t require too much upskilling – a side of IT that is critical to businesses, but not acknowledged by many departments.

The Grail unified storage solution at Dynatrace’s core provides not just a way to visualize data but to make data exploration accessible to everyone, regardless of skill level and work style.

With templates enabling users to build an observability dashboard that makes sense to them, Dynatrace’s new interactive user interface coupled with Davis AI means deep analysis is available even to novices.

Segmentation allows data to be split into manageable chunks and organized contextually. Decision-making is thus accelerated, and the enhancements offered by Dynatrace speed up onboarding.

We were also lucky enough to speak to Alex Hibbitt of albelli Photobox, another Dynatrace client – he was awarded Advocate of the Year on the final day of the conference.

Five years ago, when he joined Photobox, the company was using a cloud platform that was primarily lift and shift. Now, Alex says the company’s on the road to building a truly cloud-native ecommerce platform to power what Photobox does.

“As an organization who sells people’s memories… the customer journey, the customer experience is really, really important to us, [and] drives fundamentals of how we make money.”

Before Dynatrace, huge amounts of legacy technology coupled with efforts to go cloud native created a behemoth that only a few engineers – Alex being one of them – had the context necessary to understanding it.

For the sake of his sanity, something had to change: Alex “couldn’t be on call 24/7 all the time – it was just exhausting.”

What Photobox needed was a technology partner that could cover the old and the new but, beyond the traditional monitoring paradigm, provide something truly democratized and take some of the strain off engineers.

Having already covered Dynatrace’s observability solutions – newly announced and not – it should be clear why they were the solution for Photobox’s issues and why Alex advocates for Dynatrace!

There’s more coverage on Dynatrace Perform on its way: come back for interviews with Bernd Greifeneder and Stefan Greifeneder.