Could optical neural networks be the future of inference?

|

Getting your Trinity Audio player ready...

|

Light has revolutionized global telecommunications, and those fast-propagating signals could benefit AI operations too. Progress in areas such as silicon photonics, which enables mathematical operations to be performed using light, could help to lower the energy consumption of querying large language models (LLMs). In fact, given their capacity to perform matrix vector multiplication – applying model weights to inputs – more efficiently than conventional GPUs, optical neural networks could turn out to be the future of inference.

AI inference at light-speed

Nicholas Harris – CEO of Lightmatter, a firm taking optical computing to market – claimed (in an interview with Tech Youtuber John Koetsier) that its light-based general-purpose AI accelerator can handle around eight times the throughput of a server blade from NVIDIA, but using a fifth of the power.

Lightmatter’s design runs on a single color of light, but Harris points out that – in principle – it’s possible to use multiple colors and feed the chips with multiple inputs at the same time, each one encoded with a different portion of the spectrum. In his view, the upper limit could be as high as 64 colors, which would dramatically increase the throughput and energy efficiency achievable using optical neural networks.

Lightmatter is pitching its range of products – the first of which could be based around adding high-speed, low-power optical interconnects to silicon chip designs – at suppliers of data center infrastructure. However, optical neural networks capable of carrying out low-energy inference could benefit portable devices too, where having a tiny power consumption is good news for battery life.

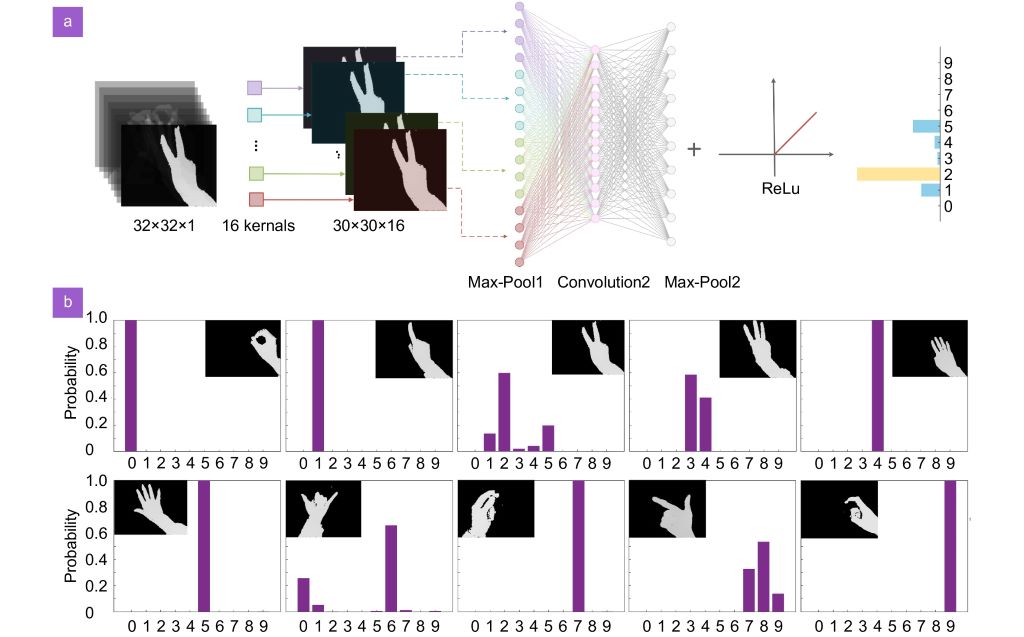

Researchers in China have built an integrated photonic convolution acceleration core for wearable devices, publishing their work in the journal Opto-Electronic Science, to highlight the feasibility of using light rather than electrons to drive AI algorithms. Their prototype chip measures just 2.6 x 2.0 mm and is capable of recognizing 10 different types of hand gestures, which could be used to control wearable electronics remotely.

Output from a prototype photonic chip created by Baiheng Zhao, Junwei Cheng, Bo Wu, Dingshan Gao, Hailong Zhou, and Jianji Dong. Image: Opto-Electronic Science.

Because optical neural networks are fast, they can generate predictions at high speed – for example, to recognize signs or other roadside features. Being able to perform AI model inference using light could benefit advanced driver assistance systems (ADAS), which have to react quickly to avoid hazards. A fast reaction time helps to extend the available vehicle stopping distance.

Things become more interesting still when you picture how optical neural networks work and integrate model weights – for example, those capable of differentiating between a dog and a cat.

Optical neural network could lead to intelligent cameras https://t.co/IHiuICpHnv #optics #Imaging #AI #neuralnetwork pic.twitter.com/1NONOSzRlH

— IS&T (@ImagingOrg) August 27, 2019

Optical neural networks have been considered for some time and the energy demands of LLMs could provide the impetus to bring designs to market.

To build a deep neural network, it’s necessary to feed the input layer with training data and propagate those values forward through the so-called hidden layers. Initially, the model weights are randomized and so the likelihood that the output layer prediction will match the training label is slim.

Backpropagation enables the model weights to be changed based on error functions so that, after a few cycles of running the model forwards and backwards, predictions and labels start to match more frequently.

This kind of adjustment is difficult to perform on an optical circuit and developers get around this by simulating their networks digitally using conventional silicon processors. However, once those weights have been established, and don’t need to be changed, they can be written into the optical chip.

Speaking at a TEDx event – Instagram Filters for Robots & Optical Neural Networks – Julie Chang, then a PhD student at Stanford’s Computational Imaging Lab and now an Imaging and Computer Vision Research Scientist at Apple – points out that each layer within a deep neural network can be likened to a filter.

“Information travels through these connected layers and filters are applied on top of each other until finally the network reaches a decision at a high level,” she told the audience gathered in Boston, US.

During the training process that we touched on above, the many-layered neural network is trying to figure out – by readjusting its weights – which is the optimum filter to create to identify all of the features of interest correctly. Doing so means that irrelevant information will be rejected, while useful signals become amplified relative to the noise.

Conventionally, running this inference process – using a trained model to generate a prediction from an input – involves performing a series of matrix multiplications. And due to the number (in some cases billions) of parameters involved, the energy required to do this can be significant.

However, now imagine being able to do this with light – as if you were placing a filter over a camera lens. You point the camera at the scene and the filter, which has been optically encoded with the series of model weights, recognizes objects within its view. It’s a simplification, but the analogy points to how fast and efficient things can be when you swap electrons for photons.

Could optical neural networks be the future of inference? This author thinks so.