Nvidia unveils H200, and three more chips tailored for China

- Nvidia just unveiled a successor to its H100–the H200 Tensor Core GPU set for 2Q24 release.

- The Nvidia H200 is the first GPU to offer HBM3e — faster, larger memory to fuel the acceleration of Generative AI and LLMs.

- Nvidia is also expected to launch AI chips tailored for China, responding swiftly to recent US restrictions on high-end chip sales to the Chinese market.

Around a year ago, the landscape of AI underwent a significant shift, capturing the attention of mainstream technology giants with the introduction of OpenAI’s ChatGPT. Before this advanced language model-based chatbot was unveiled, AI was a field many tech companies had explored but not prioritized. The launch of ChatGPT altered this dynamic entirely. It demonstrated to investors and major tech players that large language model (LLM) chatbots had progressed further than they had anticipated. It also revealed novelty and advancement that caught industry giants like Alphabet’s Google off guard.

In this narrative, Nvidia Corp plays a significant role, as it produces high-performance graphics processing units (GPUs), which were already in use by major tech players such as Google, Microsoft, Meta Platforms, and others for their AI data centers even before the introduction of ChatGPT. Today, some estimates put Nvidia’s AI market share at as much as 80%.

This week, Nvidia unveiled the H200, its newest high-end chip for training AI models. The GPU, a successor to the H100 unveiled in March 2022, comes at a time when the AI chip giant is striving to defend its dominant position in the industry. After all, Intel, AMD, and a slew of chip startups and cloud service providers such as Amazon Web Services are trying to capture market share amid a boom in demand for chips driven by generative AI workloads.

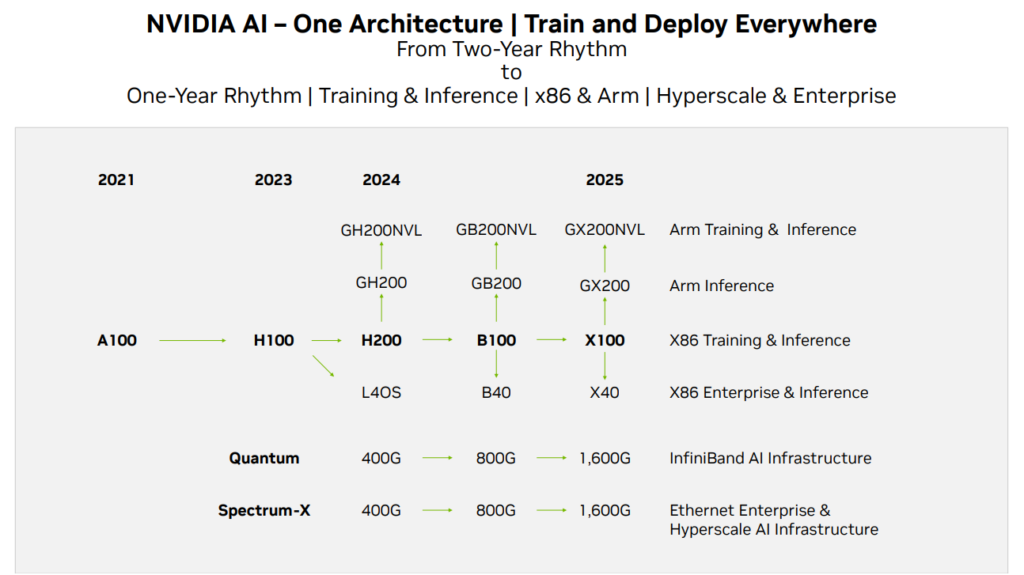

To maintain its lead in AI and HPC hardware, Nvidia last month told investors that it aims to accelerate the development of new GPU architectures, returning to an annual product introduction cycle. The H200, utilizing the Hopper architecture, marks the beginning of this strategy. As the successor to the H100, it now stands as Nvidia’s most powerful AI chip.

Nvidia plans to speed up development of new GPU architectures and essentially get back to its one-year cycle for product introductions, according to its roadmap published for investors. Source: Nvidia

Everything we know about the Nvidia H200

While the H200 seems similar to the H100, the modifications to its memory represent a significant enhancement. The new GPU introduces an innovative and faster memory specification known as HBM3e. This elevates the GPU’s memory bandwidth to 4.8 terabytes per second, a notable increase from the H100’s 3.35 terabytes per second. It expands its total memory capacity to 141GB, up from the 80GB of its forerunner.

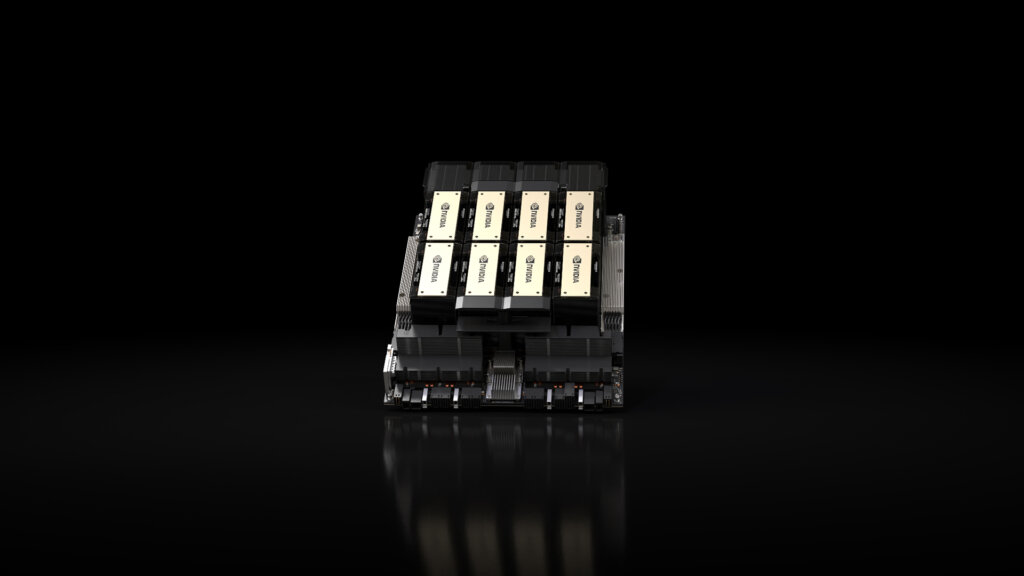

H200-powered systems from the world’s leading server manufacturers and cloud service providers are expected to begin shipping in the second quarter of 2024. Source: Nvidia

“The Nvidia H200 is the first GPU to offer HBM3e — faster, larger memory to fuel the acceleration of generative AI and large language models (LLMs) while advancing scientific computing for HPC workloads. With HBM3e, the NVIDIA H200 delivers 141GB of memory at 4.8 terabytes per second, nearly double the capacity and 2.4x more bandwidth compared with its predecessor, the NVIDIA A100,” the chip giant said.

Ian Buck, vice president of hyperscale and HPC at Nvidia, said that “With Nvidia H200, the industry’s leading end-to-end AI supercomputing platform, just got faster to solve some of the world’s most important challenges.” For context, GPUs excel in AI applications by executing the parallel matrix multiplications crucial for neural network functioning. They are vital in the training and inference phases, processing vast amounts of data efficiently.

Introducing the H200 will lead to further performance leaps, including nearly doubling inference speed on Llama 2, a 70 billion-parameter LLM, compared to the H100. On top of which, future software updates are expected for additional performance leadership and improvements with the H200.

Nvidia also said it will make the H200 available in several form factors. They include Nvidia HGX H200 server boards in four- and eight-way configurations, compatible with both the hardware and software of HGX H100 systems. It will also be available in the Nvidia GH200 Grace Hopper superchip, which combines a CPU and GPU into one package.

“With these options, the H200 can be deployed in every type of data center, including on-premises, cloud, hybrid-cloud, and edge. Nvidia’s global ecosystem of partner server-makers — including ASRock Rack, Asus, Dell Technologies, Eviden, Gigabyte, Hewlett Packard Enterprise, Ingrasys, Lenovo, QCT, Supermicro, Wistron, and Wiwynn — can update their existing systems with an H200,” Nvidia noted.

According to the US-based chip giant, Amazon Web Services (AWS), Google Cloud, Microsoft Azure, and Oracle Cloud Infrastructure will be among the first cloud service providers to deploy H200-based instances starting next year, in addition to CoreWeave, Lambda, and Vultr. At present, Nvidia is at the forefront of the AI GPU market.

But major players such as AWS, Google, Microsoft, and traditional AI and HPC entities like AMD are actively preparing their next-generation processors for both training and inference. In response to this competitive landscape, Nvidia has brought forward its B100 and X100-based product timelines.

Three new chips for China to circumvent updated US restrictions

When the US Commerce Department first imposed restrictions on companies to stop them supplying advanced chips and chipmaking equipment to China in October 2022, it impacted Nvidia’s A100 and H100 chips. But it didn’t take long before Nvidia unveiled the A800, a pared-down version of the A100, as a workaround for export restrictions. Subsequently, in March 2023, Nvidia introduced the H800 as a substitute for the banned H100 chips, aligning its performance with the criteria set by the Commerce Department.

For the US, the goal is simple: to curb China’s ability to produce cutting-edge chips for weapons and other defense technology. So, in an expected move, the US came up with fresh regulations in October this year, promptly limiting the export of both the A800 and H800 chips tailored for China. Nvidia’s initial strategy of getting an additional 30 days to fulfill more orders was rendered obsolete.

But as usual, Nvidia has remained determined to maintain its supply for China. After all, China’s revenue share of Nvidia’s data center business is around 20-25%, which grew 171% year-over-year last quarter, generating more than US$10 billion. Nobody lets that go easily – that’s just capitalism.

The demand for Nvidia’s chip is too prominent to be ignored. So much so that the cost of an Nvidia AI chip on the underground market in China this past June had hit US$20,000, double the retail price.

China’s most prominent internet players alone—Alibaba, Baidu, ByteDance, and Tencent—have spent a staggering US$1 billion to buy around 100,000 of Nvidia’s A800 processors in August. This means that Nvidia will not forgo China’s demand easily – so it decided to work on three newer chips to circumvent the US’ latest export ban.

Nvidia’s H200 chips are just the start of a policy to out-evolve US restrictions. Source: X

“When the US implemented updated AI restrictions, we thought the US locked down every single loophole conceivable. To our surprise, Nvidia still found a way to ship high-performance GPUs into China with their upcoming H20, L20, and L2 GPUs,” the chip industry newsletter SemiAnalysis shared, breaking the news to the public. In the newsletter, SemiAnalysis also shared that Nvidia has product samples for these newer GPUs, which will go into mass production within the next month.

“That yet again shows the company’s supply chain mastery,” Dylan Patel, chief analyst at SemiAnalysis, wrote. But Nvidia did experience its most significant stock decline in months when the Biden administration intensified its efforts to put the brakes on China’s development in October. The company also cautioned that its product development and customer supply capabilities could be impacted due to the recent restrictions.

Under the new US export control regulations, Nvidia can not ship its flagship consumer gaming graphic card, the RTX 4090, to China. Although Nvidia has said that it doesn’t anticipate an immediate financial impact from the recent restrictions, reports indicate the possibility of canceled orders worth billions of dollars from Chinese tech firms.

Possible implications

According to Patel, one of the China-specific GPUs is over 20% faster than the H100 in LLM inference and is more similar to the new GPU that Nvidia is launching early next year than it is to the H100. Spec details on Nvidia’s new GPUs show that the AI chip giant is “perfectly straddling the line on peak performance and performance density with these new chips to get them through the new US regulations,” he added.

According to a report by Reuters quoting a note by Wells Fargo analyst Aaron Rakers, all three of Nvidia’s reported chips appear to fall below the absolute caps on computing power. Still, one seems to be in the gray zone and will require a license.

Rakers said that while the introduction of these three new GPUs is positive, “we would expect investors to question whether [Nvidia] is being a bit too aggressive in its efforts to circumvent US restrictions and could ultimately just result in further [US government] moves going forward,” noting that Nvidia gets around a quarter of its data center chip revenue from China.