How is Nvidia dominating the AI boom with its chips?

While the AI revolution was slowly brewing with the emergence of ChatGPT, a silent titan was already driving tech disruption without actually producing any AI products. Nvidia Corp, the company behind most of the AI chips in the world today, knew that the market was heading where it was, and prepared itself for the AI boom.

That’s why today, Nvidia is best positioned for AI growth, as companies rely on its high-tech GPUs to power chatbots like ChatGPT and Bard. Nvidia had the technology prepared for the AI revolution, because its co-founder and CEO saw the future coming over a decade ago.

Experts call Nvidia a one-stop shop for what companies need to drive their AI ambitions. Essentially, the company controls the entire ecosystem, on both the hardware and software sides. That let the company surge past US$1 trillion in market capitalization. In March this year, Nvidia became the world’s most valuable chipmaker, signaling an apparent booming demand for AI-capable GPUs.

In other words, Nvidia has been the biggest beneficiary of the rise of ChatGPT and other generative AI apps, virtually all of which are powered by its graphics processors. Before that, Nvidia’s chips were also used to power “standard” AI systems, with demand for the chips seeing an uptick during the boom in cryptocurrency, since that industry’s systems also rely on Nvidia’s processing power.

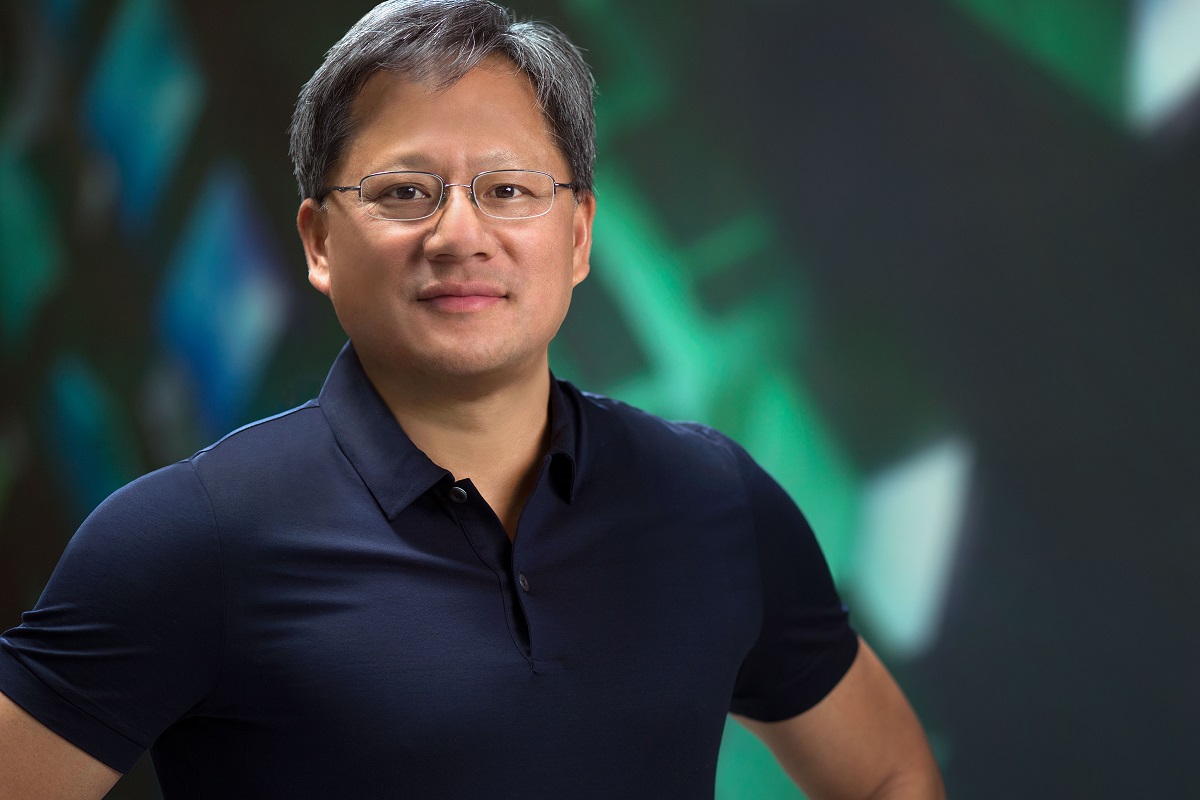

Nvidia has cornered the market on AI with its chips – and it didn’t do that by jumping on the bandwagon of the post-ChatGPT world. Nvidia’s founder and CEO, Jensen Huang, has always been so sure about AI that in the past, to those who couldn’t quite see what the future was gong to look like, it mostly felt like a lofty rhetoric or marketing gimmick.

How did Nvidia get to the inflection point of AI before anyone else?

Nvidia, led by Huang, has always been on the edge of development – with gaming, then machine learning, followed by cryptocurrency mining, data centers, and AI. Over the last decade, the chip giant developed a unique portfolio of hardware and software offerings to democratize AI, putting the company in pole position to benefit from adopting AI workloads.

But the real turning point was 2017, when Nvidia started tweaking GPUs to handle specific AI calculations. That same year, Nvidia, which typically sold chips or circuit boards for other companies’ systems, also began selling complete computers to carry out AI tasks more efficiently.

Some of its systems are now the size of supercomputers, which it assembles and operates using proprietary networking technology and thousands of GPUs. Such hardware may run for weeks to train the latest AI models. For some rivals, it was tough to compete with a company that sold computers, software, cloud services, trained AI models, and processors.

Tech giants like Google, Microsoft, Facebook, and Amazon were already buying more Nvidia chips for their data centers by 2017. Institutions like Massachusetts General Hospital use Nvidia chips to spot anomalies in medical images like CT scans.

By then, Tesla announced it would install Nvidia GPUs in all its cars to enable autonomous driving. Nvidia chips provide the horsepower underlying virtual reality headsets like those brought to market by Facebook and HTC. Nvidia was gaining a reputation for consistently delivering faster chips every couple of years.

Looking back, it’s safe to say that over more than ten years, Nvidia has built a nearly impregnable lead in producing chips that can perform complex AI tasks like image, facial, and speech recognition, as well as generating text for chatbots like ChatGPT.

The biggest upside? Nvidia achieved its dominance by recognizing the AI trend early, tailoring its chips to those tasks, and then developing critical pieces of software that aid in AI development. As The New York Times (NYT) puts it, Nvidia has gradually turned, for all intents and purposes, into a one-stop shop for AI development.

According to the research firm Omdia, while Google, Amazon, Meta, IBM, and others have also produced AI chips, Nvidia today accounts for more than 70% of AI chip sales. It holds an even more prominent position in training generative AI models. Microsoft alone spent hundreds of millions of dollars on tens of thousands of Nvidia A100 chips to help build ChatGPT.

“By May this year, the company’s status as the most visible winner of the AI revolution became clear when it projected a 64% leap in quarterly revenue, far more than Wall Street had expected,” NYT’s Don Clark said in his article.

The “iPhone moment” of generative AI: Nvidia’s H100 AI chips

“We are at the iPhone moment for AI,” Huang said during his GTC Conference in March this year. He pointed out Nvidia’s role at the start of this AI wave: he brought a DGX AI supercomputer to OpenAI in 2016, hardware that was ultimately used to build ChatGPT.

At the GTC Conference earlier this year, Nvidia unveiled its H100, the successor to Nvidia’s A100 GPUs, which have been key to the foundation of modern large language model development efforts. Nvidia flaunted its H100 as a GPU up to nine times faster for AI training and 30 times faster for inference than the A100.

While Nvidia doesn’t discuss prices or chip allocation policies, industry executives and analysts said each H100 costs between US$15,000 and more than US$40,000, depending on the packaging and other factors — roughly two to three times more than the predecessor A100 chip.

Yet the H100 continues to face a massive supply crunch amid skyrocketing demand and the easing out of shortages across most other chip categories. Reuters reported that analysts believe Nvidia can meet only half the demand, and its H100 chip is selling for double its original price of US$20,000. That trend, according to Reuters, could go on for several quarters.

YOU MIGHT LIKE

Nvidia and Chinese chip designers outfox the US chip ban

Undeniably, the demand surge is coming from China, where companies are stockpiling chips due to US chip export curbs. A report by the Financial Times indicated that China’s leading internet companies had placed orders for US$5 billion worth of chips from Nvidia. The supply-demand divide will inevitably lead some buyers to turn to Nvidia’s rival, AMD, which is looking to challenge the company’s most robust offering for AI workloads with its M1300X chip.

What’s next?

Today, the world, tomorrow – who knows?

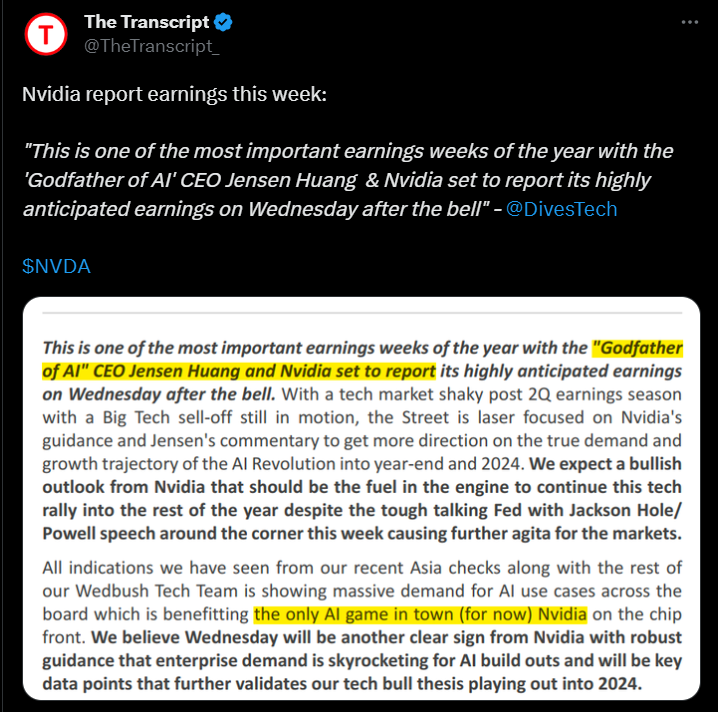

Since Nvidia shares have tripled in value this year, adding more than US$700 billion to the company’s market valuation and making it the first trillion-dollar chip firm, investors expect the chip designer to forecast quarterly revenue above estimates when it reports results on August 23.

Nvidia’s second-quarter earnings will be the AI hype cycle’s biggest test. “What Nvidia reports in its upcoming earnings release is going to be a barometer for the whole AI hype,” Forrester analyst Glenn O’Donnell told Yahoo. “I anticipate that the results will look outstanding because demand is so high, meaning Nvidia can command even higher margins than otherwise.”

Wall Street expects the chip company to guide for a rise of about 110% in third-quarter revenue to $12.50 billion, according to Refinitiv.

Source: Twitter

Nvidia won’t be the only provider in town. However, what sets the company on a higher pedestal than its competitors is its comfortable lead and patent-protected technology, that has been mooted in such an early stage. Some market experts believe that, for now, AMD is the ‘only viable alternative’ to Nvidia’s AI chips.