Microsoft completes AI puzzle with own chips

- Microsoft unveils two new chips to boost its AI infrastructure.

- Microsoft also extends partnerships with AMD and Nvidia.

- Can it navigate its latest personnel issues to push its AI agenda?

It’s been a busy week for Microsoft. Not only has the tech giant finally launched its own chips to empower AI infrastructure, but the company also had to deal with the drama unfolding at OpenAI.

Sam Altman, CEO of OpenAI, has been sacked and still remains sacked by the board. Since then, all the big news from Microsoft’s Ignite event has been overshadowed. It’s a big blow not just to OpenAI but to Microsoft itself, especially given the success OpenAI has had under Altman’s leadership.

In his keynote address at Ignite, Satya Nadella, Microsoft CEO said that as OpenAI innovates, Microsoft will deliver all of that innovation as part of Azure AI, by adding more to its catalog. This includes the new models as a service (MaaS) offering in Azure.

MaaS is a pay-as-you-go inference APIs and hosted fine-tuning for Llama 2 in Azure AI model catalog. Microsoft is expanding its partnership with Meta to offer Llama 2 as the first family of Large Language Models (LLM) through MaaS in Azure AI Studio. MaaS makes it easy for Generative AI developers to build LLM apps by offering access to Llama 2 as an API.

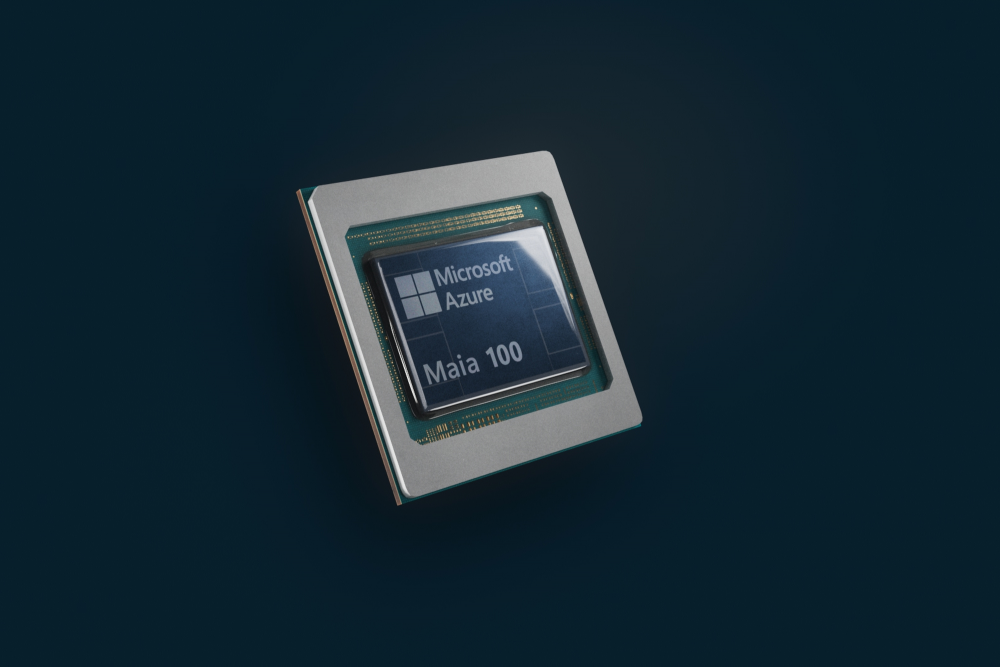

The Microsoft Azure Maia AI Accelerator is the first designed by Microsoft for large language model training and inferencing in the Microsoft Cloud. (Image by Microsoft).

Sam Altman joins Microsoft

While Altman was not present at the Ignite event, a report from Bloomberg said that executives at Microsoft were taken by surprise by the announcement. One person in particular who was left blindsided and furious by the news was Nadella.

As Microsoft is OpenAI’s biggest investor, Nadella is playing a central role in negotiations with the board of plans to bring Altman back. But it seems Altman’s ship may have sailed away.

It may still be early days, but the decision to remove Altman may not only impact the future of OpenAI but could also cause ripples on how Microsoft moves forward with its big AI plans.

The Information reported that Microsoft, OpenAI’s biggest backer, is considering taking a role on the board if ousted CEO Sam Altman returns to the ChatGPT developer, according to two people familiar with the talks. Microsoft could either take a seat on OpenAI’s board of directors, or as a board observer without voting power, one of the sources said.

However, in the latest development, Nadella tweeted that Altman will now join Microsoft instead.

“We remain committed to our partnership with OpenAI and have confidence in our product roadmap, our ability to continue to innovate with everything we announced at Microsoft Ignite, and in continuing to support our customers and partners,” Nadella tweeted.

Nadella’s tweet confirming Altman has joined Microsoft.

New Microsoft chips completes AI puzzle

Going back to Microsoft Ignite, the company announced two new chips to help boost the company’s AI infrastructure. Given the growing demand for more AI workloads, the data centers processing these requests need to be able to cope with the demands. That means having chips that can power the data center and meet customer needs.

While Microsoft is already in partnership with AMD and Nvidia for chips, the two new chips announced by the company may just be the final piece Microsoft needs to be fully in control of its AI infrastructure supply chain.

The two new chips are:

- The Microsoft Azure Maia AI Accelerator – optimized for AI tasks and generative AI. Designed specifically for the Azure hardware stack, the alignment of chip design with the larger AI infrastructure designed with Microsoft’s workloads in mind can yield huge gains in performance and efficiency.

- The Microsoft Azure Cobalt CPU – an Arm-based processor tailored to run general-purpose compute workloads on the Microsoft Cloud. It is optimized to deliver greater efficiency and performance in cloud-native offerings. The chip also aims to optimize Microsoft’s “performance per watt” throughout its data centers, which essentially means getting more computing power for each unit of energy consumed.

The chips represent the last puzzle piece for Microsoft when it comes to delivering infrastructure systems – which include everything from silicon choices, software and servers to racks and cooling systems – that have been designed from top to bottom and can be optimized with internal and customer workloads in mind.

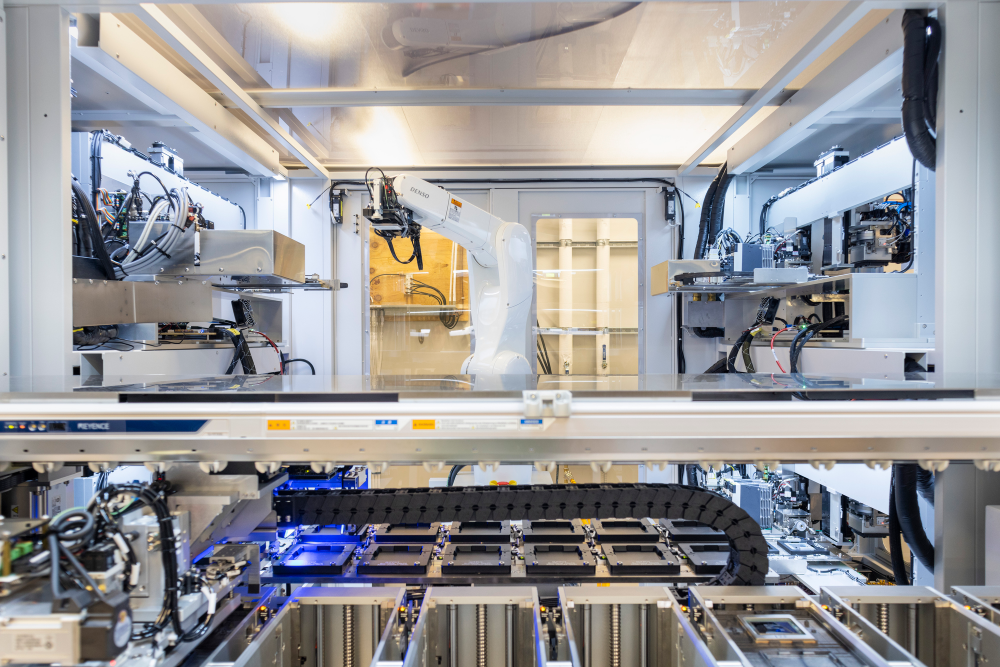

A system level tester at a Microsoft lab in Redmond, Washington, mimics conditions that a chip will experience inside a Microsoft data center. (Image by Microsoft).

According to Omar Khan, general manager for Azure product marketing at Microsoft, Maia 100 is the first generation in the series, with 105 billion transistors, making it one of the largest chips on 5nm process technology. The innovations for Maia 100 span across the silicon, software, network, racks, and cooling capabilities. This equips the Azure AI infrastructure with end-to-end system optimization tailored to meet the needs of groundbreaking AI such as GPT.

Meanwhile, Cobalt 100, the first generation in its series too, is a 64-bit 128-core chip that delivers up to 40% performance improvement over current generations of Azure Arm chips and is powering services such as Microsoft Teams and Azure SQL.

“Networking innovation runs across our first-generation Maia 100 and Cobalt 100 chips. From hollow core fiber technology to the general availability of Azure Boost, we’re enabling faster networking and storage solutions in the cloud. You can now achieve up to 12.5 GBs throughput, 650K input-output operations per second in remote storage performance to run data-intensive workloads, and up to 200 GBs in networking bandwidth for network-intensive workloads,” said Khan.

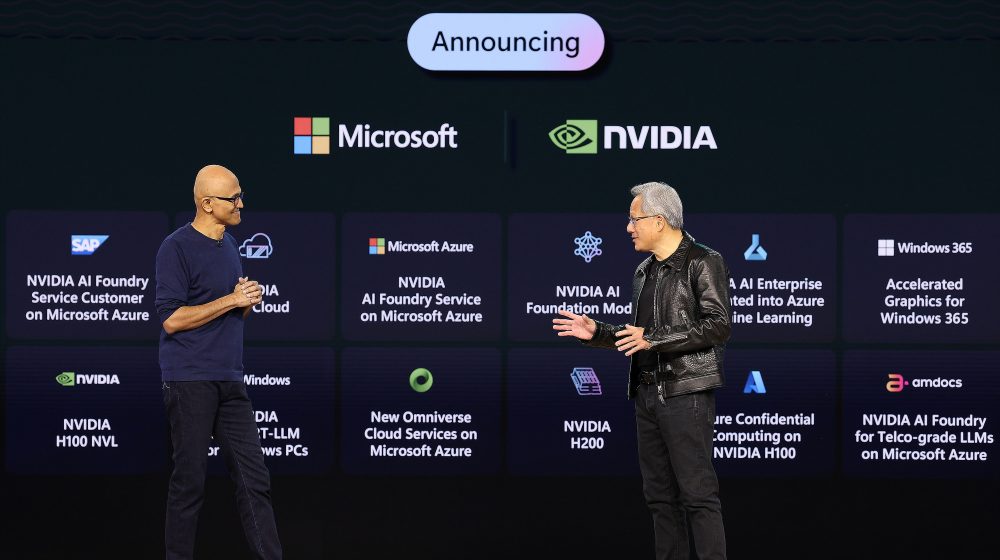

Chairman and CEO Satya Nadella and Nvidia founder, president and CEO Jensen Huang, at Microsoft Ignite 2023.(Source – Microsoft)

Chips for all

Microsoft joins a list of other companies that have decided to build their own chips, especially given the rising costs of chips as well as the lengthy wait time to get them. In fact, most major tech companies have announced plans to build their own chips to support the growing demand and use cases being developed.

For example, OpenAI had previously announced plans to have its own chips, despite relying heavily on Nvidia. In fact, Bloomberg reported that Sam Altman was actively looking for investors to get this deal going. Altman planned to spin up an AI-focused chip company that could produce semiconductors that competed against those from Nvidia Corp., which currently dominates the market for artificial intelligence tasks.

Other companies that are also designing and producing their own chips include Apple, Amazon, Meta, Tesla and Ford. Each of the chips is designed to cater to the company’s use cases.

Despite unveiling two new chips for AI, Microsoft also announced extensions to its partnerships with Nvidia and AMD. Azure works closely with Nvidia to provide Nvidia H100 Tensor Core (GPU) graphics processing unit-based virtual machines (VMs) for mid to large-scale AI workloads, including Azure Confidential VMs.

On top of that, Khan stated that Microsoft is adding the latest Nvidia H200 Tensor Core GPU to its fleet next year to support larger model inferencing with no reduction in latency. With AMD, Khan stated that Microsoft customers can access AI-optimized VMs powered by AMD’s new MI300 accelerator early next year.

“These investments have allowed Azure to pioneer performance for AI supercomputing in the cloud and have consistently ranked us as the number one cloud in the top 500 of the world’s supercomputers. With these additions to the Azure infrastructure hardware portfolio, our platform enables us to deliver the best performance and efficiency across all workloads,” Khan stated.