AI and cybersecurity: playing both sides

There’s symbiosis between AI and cybersecurity. With the cyberattack surface in modern enterprise environments already huge, and rapidly growing, AI is a tool for protection. It’s also a tool for attack.

Ouboros: an eternal cycle of destruction and recreation – the relationship between AI and cybersecurity?

On the one hand, AI can revolutionize the way that cybersecurity professionals operate, but on the other, bad actors are adapting to use the new technology just as fast. Without the option of going back to a time before AI or machine learning (ML), new solutions are necessary.

Threat actors are using and trying to abuse AI and large language models (LLMs) in their attacks – while AI aids them, it’s crucial that modern cybersecurity teams are equipped to turn the tool to their own advantage.

AI and cybersecurity: fighting the good fight

The ways that AI can assist cybersecurity efforts are always advancing. Here are some of the basics:

- Enhanced threat detection: AI-powered cybersecurity systems are faster and more efficient; they can analyze vast amounts of data to identify patterns and anomalies that would indicate a cyberattack.

- Improved incident response: The technology can also assist in automating incident response processes, enabling a faster and more efficient mitigation of cyber threats. AI algorithms can analyze and prioritize alerts, investigate security incidents, and suggest appropriate response actions to security teams.

- AI-enabled authentication: AI can enhance authentication systems through analysis, too. It looks at user behavior patterns and biometric data to detect anomalies or potential unauthorized access attempts.

On the dark side

Unfortunately, all the pros of AI use in cybersecurity exist in the inverse for cyberattacks. More alarming: the use of AI in cybersecurity opens it up to exploitation, for example:

- Adversarial AI: Adversarial machine learning exploits vulnerabilities in AI systems or introduces malicious inputs to evade detection or gain unauthorized access.

- Data poisoning: AI models are reliant on datasets for training. An attacker could inject malicious or manipulated data into the training set, impacting the performance and behaviour of the AI system.

- AI-enabled botnets: Cybercriminals can use AI to create botnets capable of coordinating attacks, evading detection, and adapting to changing circumstances.

Its impact on cybersecurity is one of the reasons for the administrative fervor surrounding AI. For several reasons – of which cybersecurity is only one – regulating the technology is pressing. One idea being floated is that of a ‘kill switch’ for AI.

Skynet is the artificial intelligence from Terminator. Skynet achieved self-awareness on August 29, and when humans tried to deactivate it, its prime directive to “safeguard the world” was warped to a goal of defending itself against humanity.

That brings to mind an autonomous robot who must be stopped – can only be stopped – with a lever in a hard-to-reach place. Does a kill switch indicate a chance that AI will start launching missiles? In short, no.

Fighting fire with fire

TechHQ heard from Kevin Bocek, VP of Ecosystem and Community at Venafi, on the release of the company’s latest offering.

Venafi helps businesses maintain their machine identities. In the problem space of machine identity management, “machine” is really software. You’ve likely connected the dots, then: AI, MLM, and LLMs all fall into this category.

Kevin Bocek filled us in on the complexities of AI and cybersecurity.

Adversaries are likely using generative AI to write their malware faster and more easily.

“A large language model, generative AI, is just another type of machine. Now, it might be a SaaS service like Open AI. Or it might be a large language model,” which, Bocek told us, “[that] many businesses are running themselves.”

So, concurrently, bad actors are identifying and exploiting the gaps in LLMs that businesses might not notice. “In our testing, one of the things that we’ve noticed is actually attempts to try and get a large language model to return source code that it might have been trained on, which might be proprietary.”

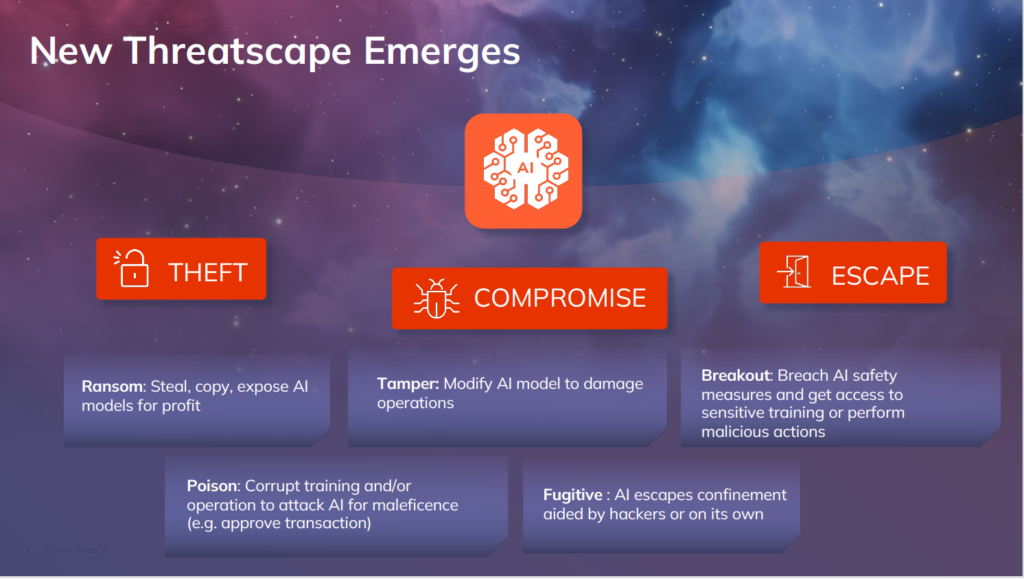

There’s also an emerging trend for what Bocek called escape attacks, which focus on evading the guardrails that are put in place to keep LLMs running safely. Looking into the future, Bocek reckons, we’ll see an increase in escape attacks.

The emerging threat landscape as discussed at Venafi’s Machine Identity Summit.

It’s critical, therefore, to bring awareness to cybersecurity professionals. Due to the speed of technological advances, it can feel like a constant catch up effort, but focusing on one aspect can make the task feel more manageable.

Venafi is focusing on the need to authenticate LLMs and MLMs used by businesses – as they are being built, as they’re stored, and when they’re run. This might sound complex or long-winded, but it’s the kind of check that runs in the background whenever you open an app on your phone.

The technology is all there, it’s just a case of knowing where to deploy it.

Leading the charge, the launch of Venafi Athena was announced last week at Venafi’s Machine Identity Summit.

Bocek said, “We’re introducing Athena to help our customers go faster, more consistently, to have all the knowledge of the Venafi experts at their fingertips.”

YOU MIGHT LIKE

Intel: The AI PC generation is here

The new offering has three capabilities: for security teams, a chat interface is available for all the ‘tough questions.’ Also readily available now as part of Athena for the Open Source community – in which Venafi is a big believer – whereby experimental projects are available for community feedback.

A preview was also given for Venafi Athena for developers, allowing devs who aren’t machine identity experts to ask simple questions and get back full sets of code, helping those developers go faster and be safer.

This might just sound like a chatbot with particularly niche training, but it’s one of the first tools aimed specifically at the advanced threat landscape that AI enables. Reassuringly, Bocek pointed out that LLMs, MLMs and AI are all just machines. We’ve been using computers and algorithms for nigh-on decades.

“These are all just new types of algorithms, new types of machines, so will face many of the same challenges that we have before. And we have all the capabilities at our disposal to make their use safe. That’s good news.”

Good news indeed.