Generative AI – the pace of change

|

Getting your Trinity Audio player ready...

|

• Generative AI has uses in business.

• Discovering the best use cases for it could be challenging.

• It will take the drudgery out of work within the next eighteen months.

In Part 1 of this article, we spoke to Dennis Winter, CTO at Solaris, an embedded finance firm familiar with the data issues inherent in adding generative AI chatbots like ChatGPT into fundamental company systems, to explore where generative AI might have a future in the financial sector.

Dennis explained that generative AI could work well to smooth operations by one company across international borders and international regulatory environments, freeing the companies themselves to focus on their core competencies.

While we had him in the chair though, we wanted to delve a little deeper into an issue he’d raised in Part 1 – the seeming “heaviness” of most large language models commercially available, which made them unwieldy for many businesses, and the subsequent taking up of the model by open-source coders.

Those coders have developed significantly improved models, allowing functionality that the multimillion-dollar versions from the likes of Google and the Microsoft-financed OpenAI cannot yet even dream of, but doing it on smaller, more focused scales along the board.

Generative AI can help smooth out international business practices.

Generative AI that could take just a couple of threadripper laptops to operate on very specific elements of business, rather than, to use Dennis’ phrase, models that “everyone was asking about how to bake their favorite cakes.”

The way of the future.

Was this, we asked, the way in which generative AI would be most functionally useful to businesses in the future?

DW:

Interesting idea. In recent weeks, we’ve shifted from those big heavy models that at the moment are too big for us to deploy, to this situation where we’re thinking maybe we can have ten in a single business, but in different areas of the organization or different use cases, trained with specific data, right where it is.

THQ:

That’s the issue, isn’t it? Just months ago, generative AI by OpenAI was the thing that was going to change the world. Now the business world seems to have shifted entirely on its axis.

Again.

The open-source community getting its hands on these models seems to have taken an offering from, as you say, being this huge thing that’s unwieldy and interesting, but which it’s actually quite difficult to get practical use out of, down to these practical microsystems, out of which companies can quite clearly see how to get the value in their business.

Who knows where we’ll be six months from now?

DW:

Exactly – it’s actually quite crazy. I had a conversation with one of our engineers – we had an AWS summit two weeks ago. And AWS also announced generative AI tooling.

It’s actually like Docker containers, you just deploy a service somewhere that is trained with a specific preset of data, you add your stuff on top of it, and there it goes. You have your AI, you have your API, and you’re integrating with it.

So from that perspective, as you said, what happens in the next six months will be very interesting in this regard, just in terms of what proper use cases can be derived from it.

THQ:

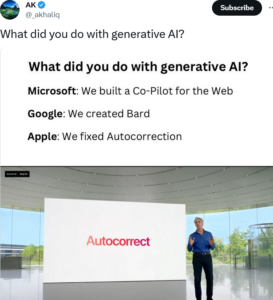

That shifts the whole perspective, doesn’t it, by massively reducing the amount of learning that organizations need to do to really get the best out of something like ChatGPT, or GPT-4, or Bard.

I said Generative AI – good God, y’all – what is it good for? Absolutely lots of things.

That’s really been miniaturized by the idea that if you just use a relevant few subsets of the whole available training data, and you add your project-specific data in, it will just do the things you want it to do. It might not tell you how to make the perfect strudel, but that’s what Google is for in any case.

Think about generative AI from a strictly business perspective – find out where it really can help.

So the whole thing has been sped up, shrunk down and made much more user-friendly in a matter of weeks since the open-source community got its ever-busy hands on it.

Showing our working.

DW:

Yeah. And in particular, what will be interesting to see, because we are heavily regulated, will be how we answer the question of showing our working.

I think, at the end of 2021, we had eight audits happening in parallel. And we are always looing at having two or three audits ongoing at the same time.

One of the things those audits are usually looking into is how we can explain how we reached a certain conclusion or a certain calculation. How did we end up with this result?

With generative AI, it’s going to be a little bit more complicated in this regard. Because we have this terabyte of data, and we gave the generative AI this question, and it answered that question based on the data, because it’s a pattern that it found.

So that’s going to be an interesting development in the whole regulatory field. What will the regulators say? There’s no way they can prevent that from happening.

And there’s always the arms race argument – if we don’t do these things, someone else will do it. It will show up somewhere, it’s out there.

So they will have to come up with some idea, and wrap their heads around how they can make sure that what is happening in there is actually okay, according to scale.

That will be an interesting conversation, as soon as we really use generative AI in a big way – and that will depend on the specific use case – whether we will have like deeper discussions with auditors or even regulators at some point about how to assess the outputs of systems like generative AI.

Because it’s different. If you use machine learning, you can probably correlate the start point and the end point, because they relate in some way, there’s a pattern. But a result derived from generative AI is something entirely new. So proving your work becomes increasingly challenging.

The abracadabra factor.

THQ:

Because you’ve basically employed a system to take that work out of your hands, and surprise you.

DW:

Exactly.

THQ:

With machine learning, as you say, there’s a pattern, a formula almost. It’s potentially enormously complicated, which is why we use ML to figure it out, but it’s not beyond us to see the steps. With generative AI, it really is as “magical” in its process as “We gave it this, it did that, and abracadabra, here’s the result.”

How do regulators get beyond that and ask “Well, yes, but is that result the right result?”

DW:

It really will depend on the specific use case, because usually, in software engineering, what you’re supposed to do is test the system. So you write a piece of software, and then you want to check whether it’s doing what you expect it to do.

Now, if you put such a service in place, you explicitly want it to do something that you expect it to do, because otherwise there is no use to it.

So the question becomes which use cases will actually be improved by the solution you have. There are a lot of products out there, where it 100% makes sense to use generative AI – customer support agents, for instance, where you have a chatbot that answers 90% of the questions that most people ask, and just the last 10% remains for humans to address.

But in organizations in a more traditional industry, it’s more about efficiencies – how to make things bigger and better and more efficient. And there the question will be what in particular will be the use case for switching to use generative AI?

That’s going to be quite interesting, just to discover how the organization itself functions, and where maybe also technology has maybe missed out on really helping people understand how to make things better.

A misty vision of the future.

THQ:

That’s the thing, isn’t it? In terms of how we go forward, things are perversely a lot less clear now than they used to be.

DW:

Yeah. And this is basically what these technologies do. You have a very complex world out there. More complex than it’s ever been. These systems break down insane amounts of data for you, and give you the gist of it. As long as it’s right.

Unfortunately, we’ve played around quite a lot with ChatGPT, and sometimes it’s pure BS coming out of it, right?

THQ:

We always use a quote from someone who was making chatbots before it was cool to call them chatbots, who said that generative AI has no objective sense of truth, and so it can be “persuasively wrong.” And unless you know in which ways it’s wrong, you’d never necessarily spot it being wrong.

That’s always been the question at the heart of the matter. How you get truth models for it?

What’s interesting is that the new, open-source versions you can get will be a lot less cumbersome to have to check through, because it’s focused data, dedicated data.

Getting the mixture right.

How do you see the mixture going forward? Between the big versions, ChatGPT, Bard and the like, and the smaller, more dedicated, more user-friendly versions?

DW:

I see two distinct branches, for at least for the next two or three years.

Google, Microsoft and the multimillion-dollar club need to figure out how to monetize this if it’s going to ever repay their investment in it. Which depends on discovering the ways people are actually digesting the data, and the ways to make the interface simpler so it becomes adopted widely – like Spotify, maybe.

Those agents and assistants are already out there, and generative AI will be even more integrated into more than one interface, where you tell it “Do this, do that,” but it gives you more input options and more output for your effort.

And the other thing is that everything that has to do with optimizing an organization or optimizing the processes or integrating certain processes with each other, I would expect to be the first big step of this technology through the business world – technology that replaces the mundane work.

I think there will be movement in the job market that you can actually see, with some tasks becoming way more efficient than they have been so far. That’s how I would expect the next year and a half to go in the generative AI world.

THQ:

We’ll come back to you in eighteen months, and see how things actually went.

Dennis Winter of Solaris.