Airbnb for GPUs – decentralized IaaS for generative AI

|

Getting your Trinity Audio player ready...

|

Graphics processing units (GPUs) may have their origins in enriching the display of arcade game visuals, but today their parallel processing capabilities are in demand across a wide range of business applications. The popularity of OpenAI’s ChatGPT is driving large language model (LLM) integrations that could radically transform sectors such as the provision of legal services (Goldman Sachs estimates that more than two-fifths of legal tasks could be carried out by AI). And this race to capitalize on generative AI, which requires significant computing resources, has got developers thinking about various IaaS models, including so-called Airbnb for GPUs.

What is Airbnb for GPUs?

Cloud computing providers such as AWS, Microsoft Azure, Google Cloud Platform, Alibaba Cloud, IBM Cloud, and other major IaaS vendors make GPUs available for users to train, fine-tune, and apply generative AI services. But the charges can soon add up for customers. OpenAI’s GPT-4, its most advanced commercially available LLM, can handle more than 25,000 words – generating time-saving summaries of long business documents. But these tasks are computationally expensive, given the complexity of the latest AI models.

Putting idle silicon to use: text-to-image generative AI image created using algorithms running on distributed computing.

However, just because services provided by major cloud computing vendors have become the common way for users to access large-scale GPU infrastructure doesn’t mean that other options don’t exist. And one of the most interesting alternative IaaS models is a decentralized computing approach dubbed Airbnb for GPUs.

In the same way that staying in online rental property gives tourists an alternative to using hotels, Airbnb for GPUs makes idle hardware available for use through distributed computing approaches, often at a fraction of the cost of services offered by major cloud computing providers.

To picture the scene, it’s useful to think of successful citizen science initiatives such as Folding@home. Citizens volunteer computing time on their personal machines to run simulations of protein dynamics that help researchers to understand the behavior of diseases and develop treatments. Back in 2007, Sony updated its PlayStation software to enable console owners to participate. And over the next five years, more than 15 million PlayStation 3 users donated over 100 million computation hours.

“The PS3 system was a game changer for Folding@home, as it opened the door for new methods and new processors, eventually also leading to the use of GPUs,” commented Vijay Pande, Folding@home research lead at Stanford University on Sony’s PlayStation blog. The success of Folding@home and other distributed computing projects that pooled hardware, which was otherwise just sitting idle as owners were out at school, work or sleeping, has inspired a variety of cloud alternatives based on an Airbnb for GPUs type model.

One of those is Q Blocks, which was founded in 2020 by Gaurav Vij and his brother Saurabh. The firm uses peer-to-peer technology to give customers access to crowd-sourced supercomputers. And the company enables owners of gaming PCs, bitcoin mining rigs, and other networked GPU hardware to make money from idle processers by registering as hosts.

Systems need to be running Ubuntu 18.04, have a minimum of 16 GB of system RAM and 250 GB of free storage per GPU, and various other prerequisites, including that GPUs should be maintained 24/7. And by sharing these resources, users can help machine learning developers to build applications at a much lower cost.

Gaurav Vij had witnessed firsthand how quickly AWS bills can add up, having bootstrapped a computer vision startup. And his brother, who had worked as a particle physicist at CERN – another beneficiary of volunteer computing through its LHC@home program – recommended that they team up to build an Airbnb for GPUs. Using Q Blocks distributed computing services, Vij’s startup development costs were reduced by 90%.

More recently, the Q Blocks team has added a generative AI platform to its crowdsourced GPU offering dubbed Monster API, which gives users affordable access to a wide range of generative AI models. Signing up to Monster API allows users to explore Stable Diffusion’s text-to-image and image-to-image capabilities. Other generative AI services include API access to Falcon 7B – an open-sourced alternative to ChatGPT.

Generative AI text-to-speech: Bark examples

Monster API also lets users carry out text-based image editing via InstructPix2Pix, transcribe speech using Whisper, and convert text to an audio file using the Suno AI Bark model. Just considering the opportunities of text-to-speech, which has been described as ‘the ultimate audio generation model’, there are loads of business use cases to explore. For example, generative AI text-to-speech makes it easy to produce multilingual content, music, background noises, sound effects, and non-verbal communications.

Suno.ai, which has a waitlist for its foundation models for generative audio AI, has examples produced using Bark text-to-speech. And they are very impressive – so much so that this writer will be using Monster API to put the algorithm to the test for themselves.

How to use Monster API

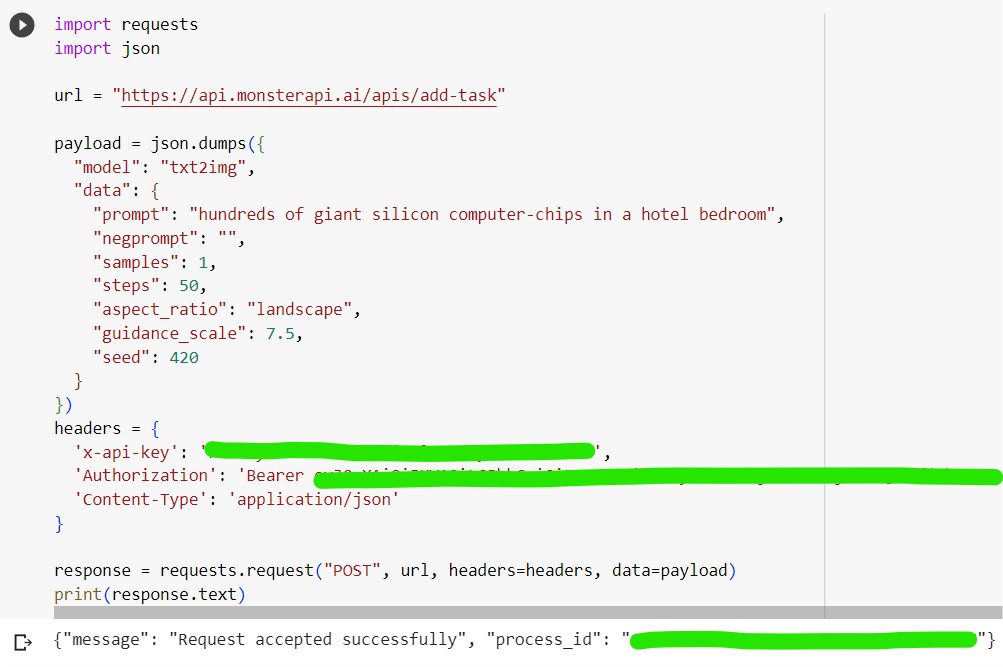

Signing up to Monster API gets 2500 free credits, and TechHQ used the distributed computing service to generate the images seen in this article (pictures created in landscape mode used 7 credits, based on our research). To access the services you need first to generate an API key and bearer token and then cut and paste the code examples for the model that you want to use. In our case, we fired up Stable Diffusion’s text-to-image generation.

Send request: using Google Colab notebook to run Python snippet passing model parameters to text-to-image generative AI over Monster API.

Google’s colab notebooks are a convenient way to run Python in a browser. And pasting the API key and bearer token details into the code, together with the text-to-image prompt and other model parameters, successfully produced a POST request sent to the distributed cloud compute with a process id.

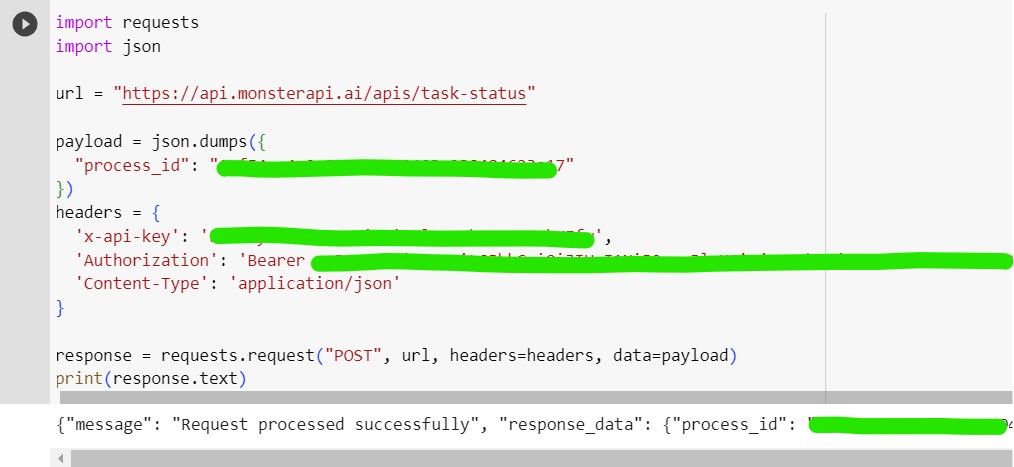

Collecting the image results: once you have the process id, you can issue a fetch request and download the generative AI model output.

The next step, to retrieve the generative AI image, is to issue a fetch request – again using a snippet of Python code – including the process id, as well as API key and bearer token details. Running the colab notebook cell produces a message saying that the fetch request has been processed successfully, and the status is changed to completed. The output includes a weblink that allows the user to access and download the text-to-image generative ai model result.

So, before considering a mainstream cloud computing provider, you might – if the idea of Airbnb for GPUs appeals – consider a distributed, crowdsourced alternative. And the payback could be attractive if the business model works as promised.