ChatGPT screening: OpenAI text classifier versus GPTZero app

|

Getting your Trinity Audio player ready...

|

What used to take hours of painstaking research and crafting, can now be generated in seconds. AI writing tools have put an end to writer’s block – no more staring at a blank screen; wondering what to type. In sales, marketing, and other business communications, AI-generated text and writing suggestions offer a productivity boost. And the uplift in email open rates, read time, and other metrics sells the benefits. King of the hill, currently, is OpenAI’s wildly successful chatbot, ChatGPT. And its text autocompleting API is attracting a wave of product developers, allowing a variety of apps to easily harness the powerful capabilities of large language models such as GPT-3, which has a mind-blowing 175 billion parameters. But as machines speed ahead, humankind has some catching up to do. And some experts worry that AI-generated text makes it too easy to spread misinformation. The OpenAI text classifier, released just a few days ago, provides an online tool for checking whether articles have been written by humans or generated using AI. However, it may provide little comfort – at least based on the testing carried out by TechHQ.

Putting the OpenAI text classifier to the test

To understand the capabilities of AI content detectors to differentiate between human-written prose and machine-generated text, we fed a series of five different samples into the OpenAI text classifier. OpenAI cautions that its text classifier isn’t fully reliable in detecting AI content, particularly on shorter documents with fewer than 1000 characters (200 – 250 words). All of the text samples tested against OpenAI’s screening tool were substantially longer than this lower limit.

Details on each of the test samples fed into the OpenAI text classifier are given below, together with the output results.

- Sample 1: news article from a publisher experimenting with using AI-writing tools as part of its content generation process.

‘The classifier considers the text to be possibly AI-generated.’

- Sample 2: news article from a publisher experimenting with using AI-writing tools as part of its content generation process.

‘The classifier considers the text to be unlikely AI-generated.’

- Sample 3: tech story generated by ChatGPT based on the prompt – ‘write an 800-word news item about telecommunications in the style of a human’.

‘The classifier considers the text to be possibly AI-generated’.

- Sample 4: 100% guaranteed human-written tech story published on TechHQ.

‘The classifier considers the text to be very unlikely AI-generated’

- Sample 5: machine-generated email produced using an AI powered sales enablement tool.

‘The classifier considers the text to be unclear if it is AI-generated.’

Analysing the results of AI content detection

It’s a small sample of results, but there are already trends that jump out. The OpenAI text classifier appears to lean towards cheerleading human-written content rather than calling out articles generated using a chatbot. OpenAI’s development team notes that the tool is configured to minimize the number of false positives – in other words, the number of times that human-written text is misclassified as being generated by AI. And these settings appear to make the OpenAI text classifier more cautious and less willing to point the finger, even for documents that are fully machine-generated, as was the case for sample 3.

Our results using the OpenAI text classifier aren’t isolated. The Poynter Institute, a US-based not-for-profit promoting responsible journalism, put itself in the shoes of potential bad actors and created a fake news website in minutes using available AI tools. Feeding examples of the machine-made content into OpenAI’s classifier tool, the digital judge remained on the fence, only considering the text to be ‘possibly’ AI-generated.

“I’m always skeptical about tech freak-outs,” commented Alex Mahadevan, Director of Poynter’s MediaWise program. “But, in just a few hours, anyone with minimal coding ability and an axe to grind could launch networks of false local news sites — with plausible-but-fake news items, staff and editorial policies — using ChatGPT.”

GPTZero to the rescue?

At this point in the story, we could do with some good news. And it may turn out to arrive in the shape of GPTZero, an AI content detector developed by Edward Tian – a computer scientist studying at Princeton University, US. GPTZero – which debuted on the data app sharing platform, Streamlit, and can now be found at gptzero.me – produced some strikingly honest results when tested using our sample data. The AI content detector highlights passages of text considered to be more likely to have been generated by a machine than written by a human. And the screening tool provides two accompanying scores dubbed ‘perplexity’ and ‘burstiness’. According to Tian’s descriptions, Perplexity is a measurement of the randomness of the input text. And burstiness indicates the variation in perplexity.

GPTZero isn’t perfect at differentiating AI-generated text from human prose – it can struggle if sentences are very short – but, in this writer’s opinion, it did a much better job than the OpenAI text classifier. The AI content detector doesn’t hold back in calling out text that it believes (based on perplexity and burstiness scores) to have been generated by a machine. Recall that OpenAI’s text classifier was ‘unclear’ on whether the sales enablement email (sample 5) had been generated by a human or an AI chatbot. In contrast, GPTZero cautioned that the sample text ‘may include parts written by AI’ and flagged suspect sentences in yellow.

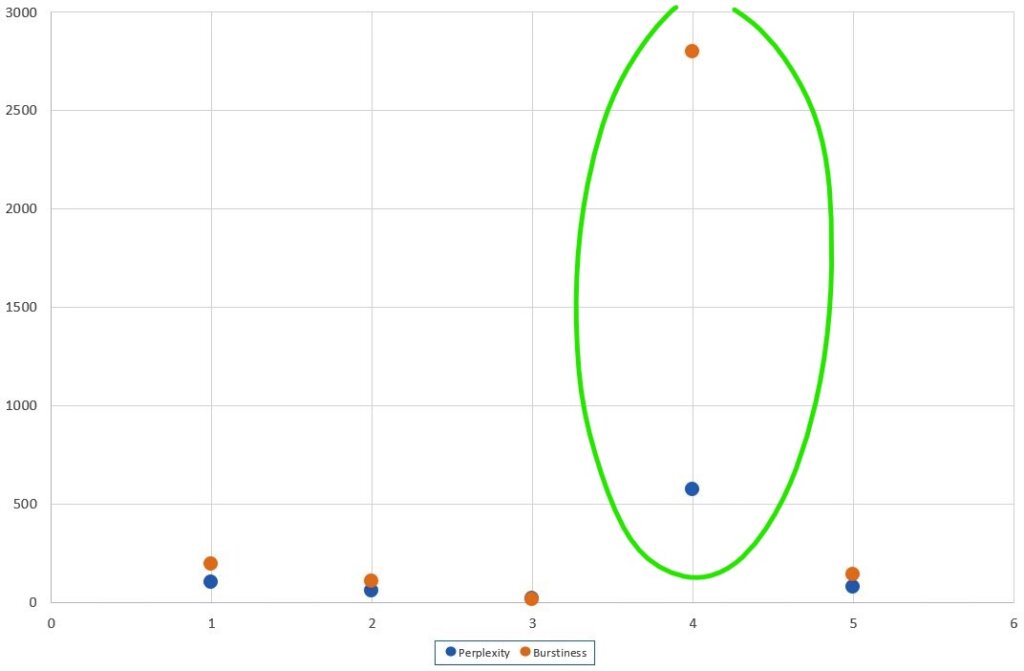

The internal workings of GPTZero are a mystery, but plotting our test scores on a graph (see below), it’s clear that human-written text is an outlier when comparing perplexity and burstiness scores for human versus machine. The observation could point to more predictable, more consistent behavior exhibited by AI-writing tools. After all, machine learning has a statistical basis and GPT-3’s autocompleting skills are guided by the statistically most likely candidates.

Human behaviour is reassuringly easy to spot, if you know what to look out for. Data: TechHQ.

Other AI content detectors – Writer.com and Copyleaks

Making predictions can be risky, but forecasting a growth in AI content detection feels like a safe bet. In addition to the OpenAI text classifier, and GPTZero, readers wanting a third or fourth opinion on whether web-pages, or other documents, have been machine-generated can utilize Writer.com’s AI Content Detector, as well as the AI content detector offered by Copyleaks, which is currently available in beta.

Running our test samples through both the Writer.com and Copyleaks AI content detection tools, the screening software did an excellent job of correctly identifying that sample 3 had been created using AI (ChatGPT). However, both tools still attributed a portion of the text as being human-generated, when in fact there had been a 0% contribution, aside from the initial text prompt fed into the advanced AI chatbot.

The 100% guaranteed human-written tech story passed with flying colors and was classified as ‘human text’. But the danger with screening tools, as OpenAI warns, is that AI-written text can be edited to evade detection. Also, even GPTZero could struggle if chatbots learn how to mimic the ‘perplexity’ and ‘burstiness’ of human writers, which can’t be ruled out with GPT-3’s successor likely whirring away somewhere in the background.