ChatGPT: Microsoft limiting Bing AI chatbot sessions to five queries

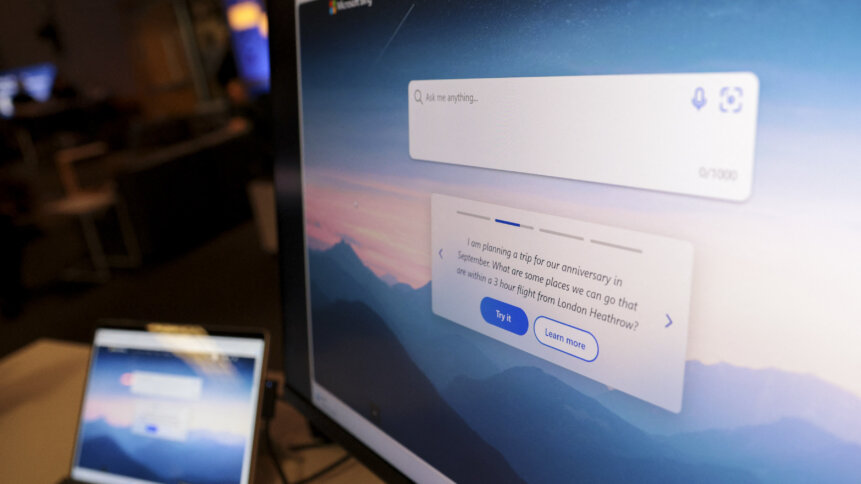

On February 7, executives and engineers from Microsoft and the startup that built ChatGPT, launched a new version of Bing. The 14-year-old search engine and web browser were remodeled using the next iteration of artificial intelligence (AI) technology, ChatGPT. For Microsoft, the underlying model of generative AI built by OpenAI, paired with its existing search engine knowledge, would change how people found information and make it far more relevant and conversational.

The new Bing was made available to only a limited number of users on desktop, but the public was given the option to try sample queries and sign up for the new ChatGPT-powered search engine waitlist. Having been a distant second in popularity to Google, it was quite a surprise when Bing was the first search engine in the world to adopt generative AI. For context, Microsoft’s new search tool combines its Bing search engine with ChatGPT, the underlying technology developed by OpenAI.

The software giant’s aim was to scale the preview to millions in the weeks after with a mobile experience in the pipeline as well. And the feedback will be valuable in helping the partnership to improve their models as they scale. Ten days after the unveiling, Microsoft on February 17 said it has decided to limit chat sessions on its new Bing search engine to five questions per session – and 50 questions per day.

The move came after countless reviews shared online showing that users would go on and on with the queries with Microsoft’s Bing chatbot. As The New York Times puts it, “But Microsoft was not quite ready for the surprising creepiness experienced by users who tried to engage the chatbot in open-ended and probing personal conversations.”

Therefore people will now be prompted to begin a new session after they ask five questions and the chatbot answers five times. “Very long chat sessions can confuse the underlying chat model,” Microsoft said last Friday. In a related blog post, Microsoft wrote that it “didn’t fully envision” people using the ChatGPT-like chatbot “for more general discovery of the world, and for social entertainment.”

The chatbot became repetitive and, sometimes, testy in long conversations, Microsoft admitted. The new AI-powered Bing search engine, Edge web browser, and integrated Chat, for Microsoft, is meant to be our “Copilot for the Web.”

ChatGPT, Bing and lengthy conversations

During the Bing launch two weeks ago, Sarah Bird, a leader in Microsoft’s responsible AI efforts, said the company had developed a new way to use generative tools to identify risks and train how the chatbot responded. Fast forward to the first week of public use, when Microsoft said it found that in “long, extended chat sessions of 15 or more questions, Bing can become repetitive or be prompted/provoked to give responses that are not necessarily helpful or in line with our designed tone.”

Microsoft had noticed that very long chat sessions can confuse the model on what questions it is answering. “The model at times tries to respond or reflect in the tone in which it is being asked to provide responses that can lead to a style we didn’t intend. This is a non-trivial scenario that requires a lot of prompting so most of you won’t run into it, but we are looking at how to give you more fine-tuned control,” commented the Microsoft’s Bing team.

To solve that, the software giant says it may need to add a tool so users can more easily refresh the context or start from scratch. “We want to thank those of you that are trying a wide variety of use cases of the new chat experience and really testing the capabilities and limits of the service – there have been a few 2 hour chat sessions for example!” Microsoft highlighted.

Overall, the issue of chatbot responses that veer into strange territory is widely known among researchers. In a recent interview by The New York Times, OpenAI’s chief executive Sam Altman said, improving what’s known as “alignment” — how the responses safely reflect a user’s will — was “one of these must-solve problems.”