Penetration testing – can ChatGPT help improve cybersecurity?

|

Getting your Trinity Audio player ready...

|

ChatGPT – the autocompleting wonder bot created by OpenAI, based on the GPT-3.5 large language model, and optimized for dialogue – has taken the world by storm. Every day, users are finding new applications for the AI-powered text generator. Microsoft, which has reportedly already invested $1 billion in OpenAI, is – according to Bloomberg – preparing to pump a further $10 billion into the company. And the computing giant is said to have plans to integrate ChatGPT into its Bing browser. But some have concerns that ChatGPT could be a threat to cybersecurity. However, those fears do come with an upside when it comes to penetration testing.

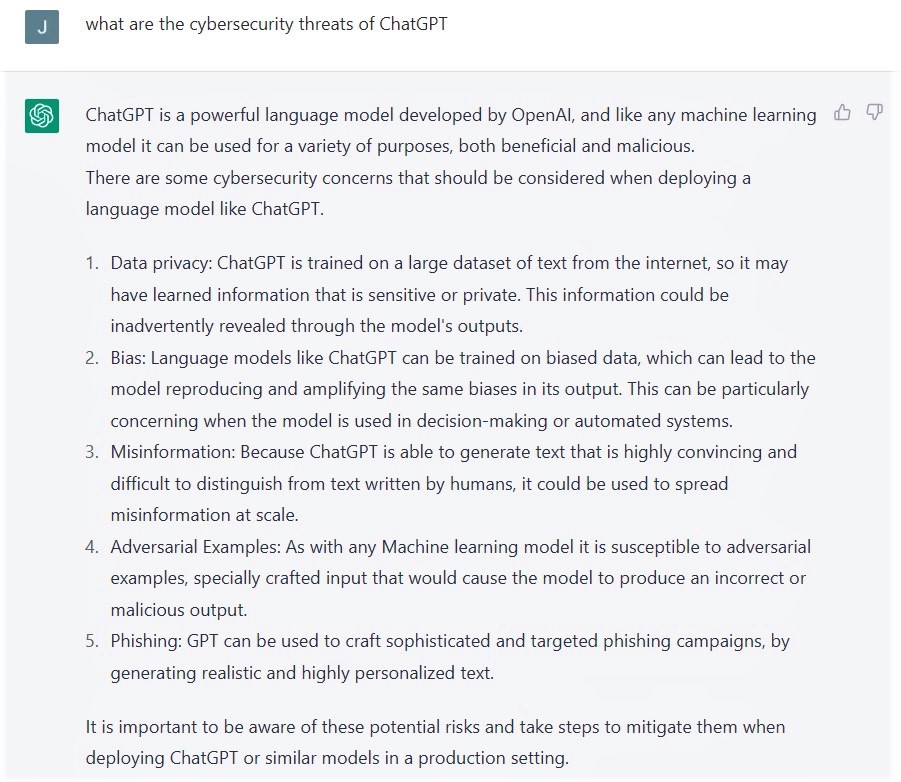

Cybersecurity threats of ChatGPT

ChatGPT makes clear the cybersecurity risks that it may pose, or at least some of them, when asked. And the advanced chatbot breaks the dangers down into five sections:

- Data privacy

- Bias

- Misinformation

- Adversarial examples

- Phishing

Given the vast size of its training data, it’s possible that ChatGPT has learned sensitive or private details that could, in theory, be extracted using the right prompts. Fears of bias and misinformation apply to browsing the web in general, and – like the internet – ChatGPT contains information on how to do not just good things, but also bad. But unlike using a search engine, where bad actors may have to dig around, and dive a few links deep to extract that knowledge, OpenAI will jump straight to the answer, outputting the text in its answer box.

Open to questions: ChatGPT is happy to admit its potential cybersecurity risks, when asked.

Much of the cybersecurity concerns surrounding ChatGPT have focused on its potential to write malicious code. But this may have been overblown. Users with no coding experience will likely struggle to identify what they’ve been given. And then they’d still need to be able to navigate their way around a computer to deploy it. On the target side, behavioural signals may catch the malware, even if end-point signatures missed the variant.

What is more concerning to cybersecurity experts is ChatGPT’s potential to craft phishing emails and other malicious correspondence. “A lot of the flags that are used right now, such as poor grammar – those red flags disappear [with ChatGPT],” Karl Sigler, Threat Intelligence Manager at Trustwave Spiderlabs, told TechHQ. “We’ll see a lot of bizarre things happening once ChatGPT starts to improve itself.”

There’s still much that the security community has to discover about the underlying logic of ChatGPT. OpenAI provides an API that allows commercial users to integrate ChatGPT with their products. Typically, if a developer builds something, they have a pretty good grasp of how the system works. But AI is a black box. You can match inputs to outputs, but how the algorithm goes about processing that data is often a mystery. Potentially, ChatGPT could be tricked with a malicious prompt in such a way that puts a company’s assets at risk.

ChatGPT as a penetration testing tool

Penetration testing is all about probing systems, with permission, to identify security vulnerabilities. And pen testers have a sometimes weird and wonderful array of methods that they like to use to expose security faults in clients’ IT systems and other devices. This raises the question, can ChatGPT be used as a penetration testing tool? Here, the novelty of the Chatbot works in favour of cybersecurity.

Potential penetration testing uses of ChatGPT, include generating a list of plausible passwords for automated tooling to carry out brute force testing of login pages, and related user interfaces. OpenAI’s advanced chatbot has the potential to be a giant security manual, cutting down the time that pen testers have to spend searching for details.

As mentioned, the ability of ChatGPT to write compelling phishing emails can be flipped around and used to raise cybersecurity awareness. IT security trainers now have a rich source of examples to educate their clients. And pen testers can potentially make use of them too. For example, the capacity of advanced chatbots to assist in social engineering can be a positive, in white hat campaigns.

Improved security posture

Fixing weaknesses in operating procedures before adversaries discover them will raise the security posture of an organization. The results will guide managers to which parts of a company need to be more alert to bad actors on the hunt for passwords and other sensitive account details.

Used proactively, ChatGPT – depending on its availability (the current version is accessible as a free research preview) – could help to level the security playing field. It gives anyone with access, the ability to ask about mitigating cyberattacks. And find out more about good cyber hygiene and deploying cybersecurity defences to IT systems.

“Being able to ask questions is beneficial for all,” concludes Sigler.