Is the ‘single source of truth’ for data impossible?

Companies are on a mission to manage their data. Of course they are… this is the age of the data-driven business, the era of the cloud-native application and the generation of workers that expect real-time, immediate, ubiquitous access to information. This core truism has led many firms to pursue an information management mission designed to deliver what the IT industry likes to call a ‘single source of truth’.

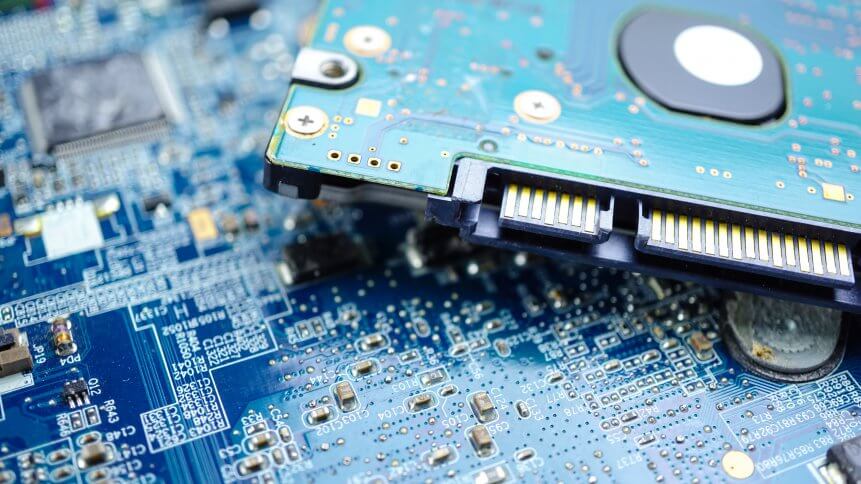

The centralized data model

The single source of truth seeks to create a centralized data model. One where information is held either on-premises using ‘terrestrial’ servers running private clouds, in a public cloud datacenter, or in some hybrid combination of the two.

Highly trained data experts and information management specialists will look after the single source of truth making sure the organization’s core data repository is up to date, valid, deduplicated, parsed into appropriate structures and sometimes amplified with additional value based upon the algorithmic logic upon which the company runs its business model.

The idea here is one that centers around control. We know where all our information is, we know who needs it, we know when they need it… and we know what level of sensitivity it has, so we can apply the appropriate levels of security provisioning and access policy controls.

Looking into the cooking pot

Centralized data management also allows us to know what ‘shape’ our data estate looks like because we can pretty much open the cooking pot and see how many ingredients we have bubbling around at any given point in time.

Even if we have channels of unstructured data filling up our ‘data lake’, we know how big a storage tier we need in order to manage that flow.

Detailed knowledge of the data estate also means we can scale more accurately when we need to.

We usually scale upwards, but we sometimes scale downwards depending on various divestment actions and other corporate strategies. You can’t build an extension on your house until you know how big your south-facing wall is; the analogy is that straightforward.

The centralized data model that drives to create a single source of truth for the business has obvious benefits; you know where stuff is, how much stuff you have and how that stuff is behaving. The ‘stuff’ in this case being information in various forms of data, obviously.

The ‘we know where our stuff is’ factor is great for regulatory compliance, governance and all-round robust reporting. This is a happy place for the data manager who wants to keep his/her house in order.

YOU MIGHT LIKE

Business leaders aren’t getting ‘hands-on’ with data

Decentralization realization

But as happy ever after as data centralization sounds, it’s not always fully feasible in a world where mobility reigns, compartmentalization is spiraling (of technology, and of people) and the Internet of Things (IoT) has pushed some our data out to the ‘edge’ i.e. on the device itself.

Let’s take the IoT first. The first wave of ‘smart’ devices for homes and factories wasn’t that smart. These devices often needed to perform a connection ‘handshake’ back to their datacenter connection in order to perform anything resembling a meaningful task in the long term.

That trend has been bucked. We now require the IoT to perform more of its data analytics ‘on-device’ out at the so-called computing edge.

This is great for immediacy and it allows devices to perform much faster real-time functions so that they can interact with the people and other machines around them. This is not so great for the centralized data model, because some computing is happening outside of the mothership.

These devices will ultimately share their calculations back to the data center, but they may not do so in the time-scale that the data engineers would like. The single source of truth is still there, but it may not be wholly accurate until updates have been executed.

Compartmentalization situations

A similar level of detachment is happening as a result of compartmentalization. Cloud technology is benefiting from compartmentalization in the form of containers and microservices, but we’re not talking about that directly in this instance.

Compartmentalization may also occur on a departmental or team-based level where individual business units take a snapshot of a dataset from the core data repository and work on it as an experimental project.

Again, the single source of truth is still there, but now it has an R&D adjunct that may or may not wish to be integrated back to the core, depending upon the success of the project or team in question.

Multiple views, or lose

These trends are quite real and they do bring benefits to organizations working in fast-moving markets that need to experiment and explore new performance structures based on what might be quite esoteric approaches… all of which may be underpinned by unconventional data management behavior.

The best way to cope with the new reality of the single sources (plural) or data that any organization will now have to cope with is to look for ways to manage, report on and further compute with multiple views of data. Data visualization firms are being bought by the industry behemoths and those that remain independent concerns are coming of age.

You can still search for the single source of truth, just remember that it could be a more multi-layered fabric going forward.