Google AI is a Google lie

- Google AI offering Gemini appears to be the most advanced on the market – but is it really?

- Google is somewhat transparent that the video of Gemini AI doesn’t represent reality. At all.

- The Gemini hit comes after its loss to Epic Games in a lawsuit from 2020.

Google has had better weeks. First, a court ruled against it in a case brought by Epic Games against the Google PlayStore.

Tech giant beats super-duper boss level tech giant. Appears pleased about it.

Then, Google had to admit that a video showcasing Google AI capabilities was edited; besides anything else, it’s pretty embarrassing to get caught cheating in the AI race. If it happened to John Doe Search Engines Ltd, it might be considered fraudulent representation.

The Gemini demo, which has over two million views, shows Google AI responding in real time to spoken prompts and video. In the video’s description, Google was transparent about the fact that the clip had been edited to make responses seem faster. That’s justifiable, in the TikTok age, when “steps removed for presentation” is an acceptable and understood technique for not boring your audience.

A blog post was uploaded at the same time as the video, making it possible to argue Google was being transparent about the process. That’s a useful line to have – but how many of the 2.3 million viewers keenly follow Google’s blog?

Although Google has been honest, there will have been a conscious decision made to not include the disclaimer on YouTube, where most people will interact with it. To assume otherwise is to assume that multi-billion dollar companies like Google are practically incompetent about things that coudl put their reputation beyond reproach.

The video that “highlights some of [Google’s] favorite interactions with Gemini” gives the impression that it responds to casual questioning as a human could; often to get truly lucid replies from generative AI, human input has to be in the form of clear, leading questions.

Too good to be true? Absolutely. Google confirmed to the BBC that the video was in fact made by prompting the AI “using still image frames from the footage, and prompting via text.”

“Our Hands on with Gemini demo video shows real prompts and outputs from Gemini,” said a Google spokesperson.

“We made it to showcase the range of Gemini’s capabilities and to inspire developers.”

So, what can Gemini, the Google AI offering, actually do?

At the start of the video, the bot is asked if a rubber duck could float. It replies “I’m not sure what material it’s made of, but it looks like it might be rubber or plastic.”

The duck is squeezed, squeaks, and with that Gemini ascertains that yes, in fact, it would float.

In reality, a still image of the duck was shown to the AI. It was then fed a text prompt explaining that the duck makes a squeaking sound when squeezed, which is when the bot correctly identifies that it would float.

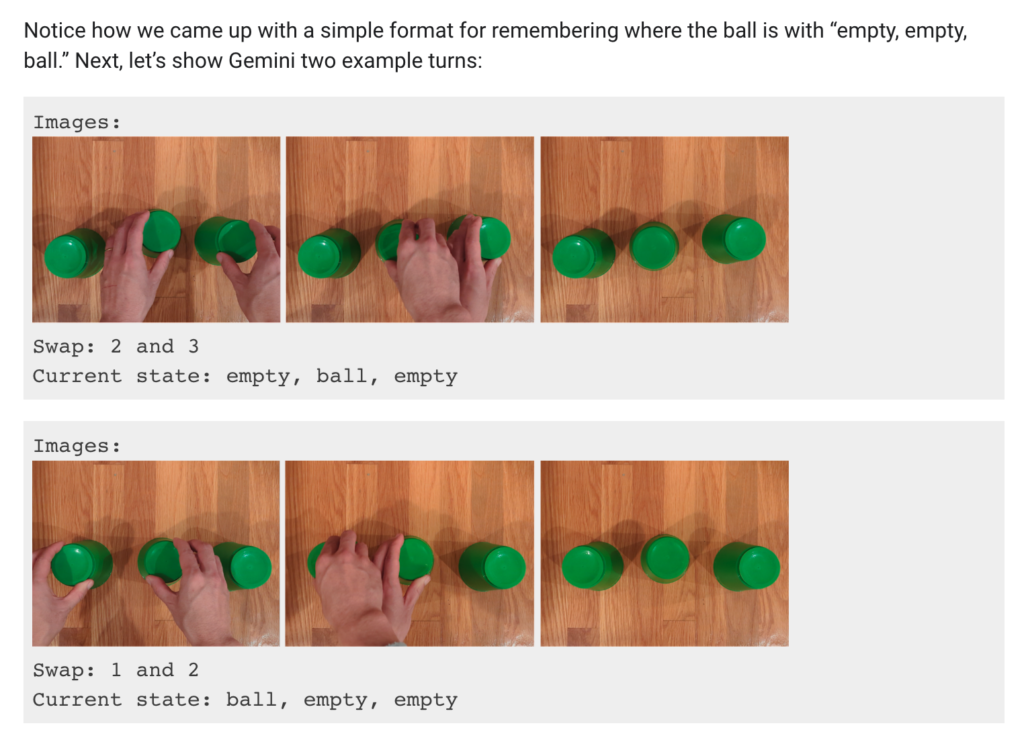

More impressively, a cup and balls routine is performed in the video and Gemini correctly guesses where the ball ends up. The video suggests AI really might be ready to take over and destroy us all, albeit through the medium of birthday party conjuring tricks. Be honest, those are deadly enough on their own.

But the AI wasn’t actually responding to a video. Google explains in its blog post that it told the AI where a ball was underneath three cups, then images representing the cups being switched.

Like teaching a very young child. Possibly even a stupid one….

The company clarified that the demo was created by capturing footage from the video in order to “test Gemini’s capabilities on a wide range of challenges.”

Presented with a world map and asked to come up with a game idea “based on what you see” and to use emojis. The AI responds by apparently inventing a game called “guess the country,” in which it gives clues (such as a kangaroo and koala) and responds to a correct guess of the user pointing at a country (in this case, Australia).

In fact, the AI didn’t invent the game at all. Remember those leading questions that chatbots seem to require? It was given the following instructions: “Let’s play a game. Think of a country and give me a clue. The clue must be specific enough that there is only one correct country. I will try pointing at the country on a map.”

From there, the Google AI offering was able to generate clues and respond to answers based on stills of a hand pointing at a map.

In fairness, the video’s voiceover is read directly from the written prompts fed into Gemini and the AI is impressive. However, the abilities it actually has are very similar to those of OpenAI’s GPT-4. It seems as though, in an effort to outdo Microsoft, Google stretched the truth.

It’s unclear which of the two is actually more advanced, and the recent chaos in the AI industry hasn’t helped. Sam Altman did tell the Financial Times that OpenAI is already working on its next chatbot, which has put the scare on Google.

Here’s the video, if you’re curious. Misleading or just over-ambitious?