- Facial recognition lookup flawed by poor training

- FBI law enforecemnt officers rely on biased data

- False arrests and mistaken identities common

The attainment of expertise in facial recognition requires a deep understanding of the technology that underpins it: learning models, bias, attenuation, and, probably most crucially, knowledge of the algorithms’ failings and ensuing false positives. Yet, according to reports in Wired, only five of the 200 FBI agents with access to the Bureau’s internal facial recognition facilities have received any training in the tech. Furthermore, a report from the GAO (Government Accountability Office) states that the training itself is but a three-day course – barely enough to scratch the surface of this deeply complex and nuanced subject.

The FBI currently uses two facial recognition lookup systems: Thorn, developed by a non-profit to combat sex trafficking and abuse, and ClearView AI. The model behind the latter was trained by internet scrapes of publicly-available images. Despite the Bureau’s widespread use of the technology, no laws exist in the US that require officers of any rank to be trained before using facial recognition systems.

The GAO report notes that in 2022, DOJ (Department of Justice) officials considered updating its policies to allow the application for search warrants solely on the basis of a facial recognition ‘hit.’ No such plans have come to light since.

The author of the GAO report, Director of Justice and Homeland Security, Gretta Goodwin, has also said in emails that she has found no evidence of a wrongful arrest by any federal law enforcement body. Yet since the high-profile wrong arrest of Robert Williams in 2022, State and local forces across the US have had access to facial recognition technology that aids them in daily law-keeping. This has led to several mistakes caused by false positives thrown up by the facil recognition systems used by several police departments.

YOU MIGHT LIKE

Facial recognition – going beyond Big Brother

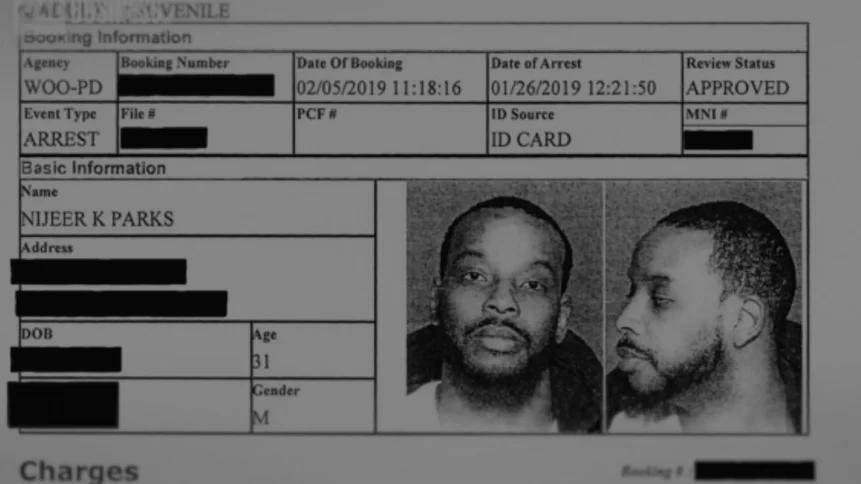

Among the first people whose face was flagged for potential criminal activity was Nijeer Parks, who was arrested in 2019. He spent nine days in jail and spent a year trying to clear his name before the authorities relented. Parks, Nigel Oliver (similarly misidentified around the same time and area), and Williams are Black.

Williams has spoken publicly about his ordeal and the detrimental effects the events have had on him, his family and friends. Williams’s daughters were particularly traumatized by the sight of their father being arrested and taken away from their front lawn, Wired reported at the time.

Facial recognition lookup’s race bias

According to a NIST report, misidentification issues derived from facial recognition technology particularly affect Black people, Asian Americans, and darker-skinned women. The consistency of subjects with regard to race and skin color used by learning models to train AIs will affect outcomes once recognition systems are put into production. In short, feed an AI only a few pictures of non-whites, and the resulting system isn’t very good at recognizing non-whites.

The bias of facial recognition lookups reflect bias in the American judicial system. Research from the Pew Research Center, published in 2020, states that “The racial and ethnic makeup of US prisons continues to look substantially different from the demographics of the country as a whole. In 2018, black Americans represented 33% of the sentenced prison population, nearly triple their 12% share of the US adult population. Whites accounted for 30% of prisoners, about half their 63% share of the adult population. Hispanics accounted for 23% of inmates, compared with 16% of the adult population.”

Without attenuating either the algorithms or the learning corpora of facial recognition systems, ill-trained or untrained law officers at all levels in the US will reinforce existing bias drawn from the training data, and reflect or even exaggerate the existing bias in America’s criminal justice system. “While their rate of imprisonment has decreased the most in recent years, Black Americans remain far more likely than their Hispanic and white counterparts to be in prison,” the Pew research also states.

This situation is one in which correctly executed data science can actively promote racial equality and reduce bias. Untrained law enforcement officers using software that wrongly skews query results is a combination that besmirches the American justice system further and says little positive about the technologists who supply it.

YOU MIGHT LIKE