Here’s the latest: AI income, regulation and infighting

• Latest developments include watermarking AI-generated art.

• A corporate version of ChatGPT is coming.

• Senator Chuck Schumer will chair the latest summit of high-stakes AI players.

With the number of headlines on the subject, we thought we’d round up some of the latest AI changes in the tech world.

This month, OpenAI launched a corporate version of ChatGPT, as – so unsurprisingly it’s almost not newsworthy – the company nears $1 billion of annual sales.

Backed by Microsoft, OpenAI is earning a monthly revenue of around $80 million, according to a person briefed on the matter who asked to speak anonymously.

Since debuting its bot in November, 2022, OpenAI has worked with companies from fledgling firms to major corporations to incorporate the technology into their businesses and products. It’s considered among the first genuine launches of generative AI.

The rollout of ChatGPT Enterprise, which has added features and privacy safeguards, is a step in OpenAI’s plans for monetizing the ubiquitous chatbot. ChatGPT, for all its popularity, is hugely expensive to operate.

As part of the revenue push, there are also options for a premium subscription to ChatGPT, and for paid access to its application programming interface, which developers can use to add the chatbot to other apps.

Having observed the success of ChatGPT, tech companies and startups globally set about working on their own generative AI offerings. The result has been a slew of machine-generated content of varying levels of reliability.

The unreality of the output that many chatbots generate has often battled to be known, as the presumption that robot = hyperintelligent overlord has given unwarranted credibility to AI generated answers.

Alongside chatbots, AI-generated images have entered the arena. Initially, the only debate that triggered was surrounding art; would human creativity be left by the wayside given the speed at which a bot could “paint”?

Yes, it’s AI-generated…but is it art?

Arguably an art form in its own right, the images that generative AI initially output were far from realistic. Yet, as the technology evolves, it’s becoming increasingly difficult to tell the difference between real images and artificially-generated ones.

BBC Bitesize has a quiz demonstrating this.

Besides the issue of copyright and ownership, online image search results are increasingly saturated with AI-generated “photos.” False images of celebrities and political figures have also inundated social media, and earlier this year Amnesty International came under fire after using AI-generated images in reports.

Latest Google solution to AI art.

Now, Google is trialling a solution.

Developed by DeepMind, the AI arm of the tech giant, SynthID will identify images generated by machines. To do so, it embeds changes to individual pixels in images – this creates a watermark invisible to the human eye, but detectable by computers.

Watermarks are commonplace enough – often a logo or text is added to an image to make it harder to copy and use without permission. The issue with this is that they can be relatively easily cropped or edited out.

There’s also a method called hashing, used by tech companies to create digital fingerprints on certain content. These too can be corrupted.

Google’s watermarks will need its software to identify whether an image is real or not. DeepMind says it’s not “foolproof against extreme image manipulation.”

Pushmeet Kohli, head of research at DeepMind, told the BBC its system modifies images so subtly “that to you and me, to a human, it does not change.”

Unlike hashing, he said even after the image is subsequently cropped or edited, the firm’s software can still identify the presence of the watermark.

When is a cow not really a cow? Ask Google.

“You can change the colour, you can change the contrast, you can even resize it… [and DeepMind] will still be able to see that it is AI-generated,” he said.

But he cautioned this is an “experimental launch” of the system, and the company needs people to use it to learn more about how robust it is.

In July, Google was one of seven leading AI companies to sign an agreement to ensure safe development and use of AI – included in which was assurance that watermarks would be implemented to enable people to identify artificial images.

Supposedly, the move by Google is in keeping with this agreement, although some say more needs to be done.

Claire Leibowicz, from campaign group Partnership on AI, said there needs to be more coordination between businesses.

“I think standardization would be helpful for the field,” she said.

“There are different methods being pursued, we need to monitor their impact – how can we get better reporting on which are working and to what end?

“Lots of institutions are exploring different methods, which adds to degrees of complexity, as our information ecosystem relies on different methods for interpreting and disclaiming whether the content is AI-generated,” she said.

READ NEXT

America’s plans for AI regulation

Schumer to chair latest attempt to achieve AI concensus

Addressing AI concerns futher, on September 13 Senator Chuck Schumer is bringing together several technology industry chiefs to discuss the ramifications of artificial intelligence.

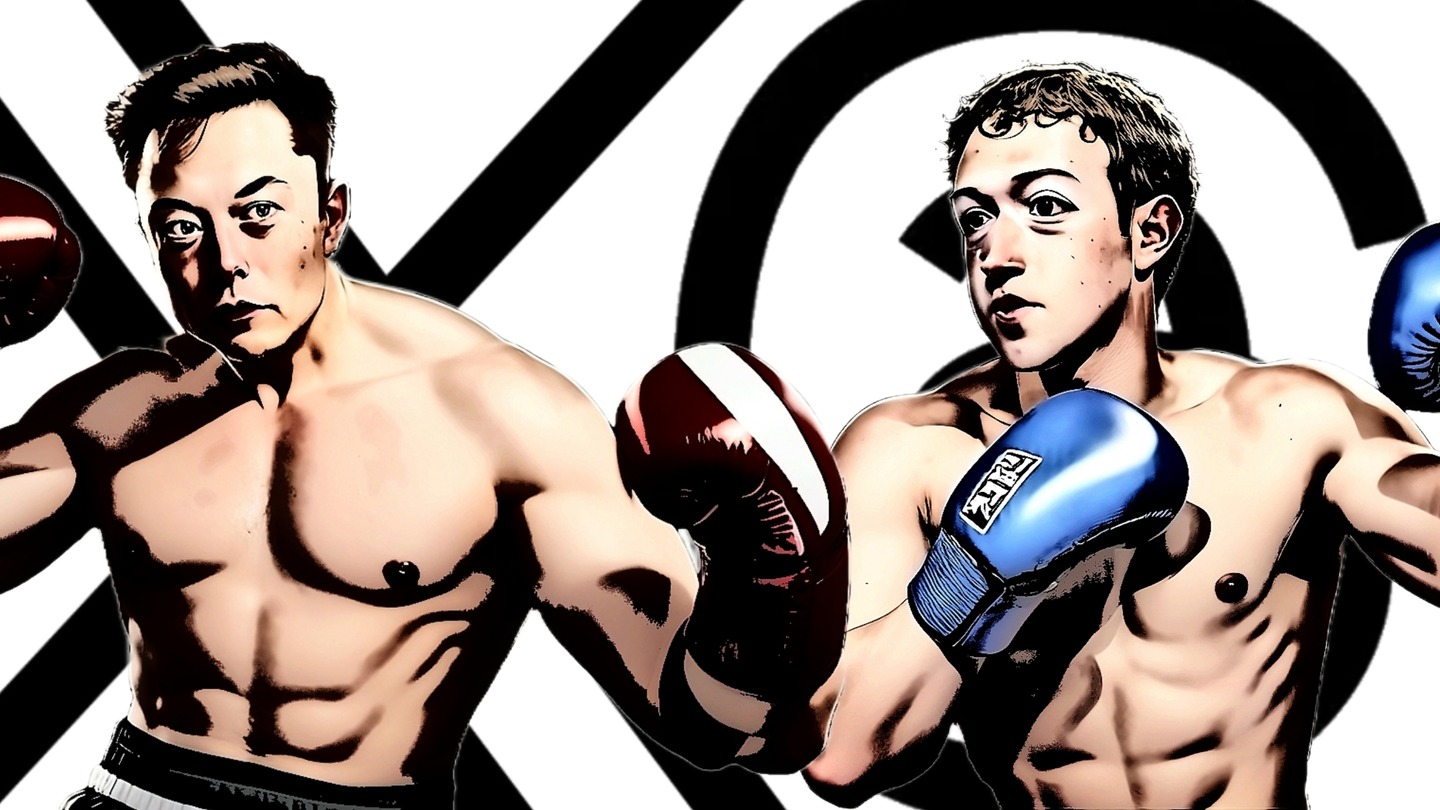

It’s possible that fights will break out, as both Elon Musk and Mark Zuckerberg plan to attend.

I'm telling my kids this was the Musk vs. Zuckerberg fight. pic.twitter.com/MCZJfe3wZU

— Ben Swann (@BenSwann_) August 23, 2023

The rivalry between the two CEOs received renewed attention this summer with reports of a cage fighting match between them.

“No biting, no gouging, no firing your content moderation staff in an election year…”

Others attending the meeting are Sundar Pichai, the chief executive officer of Alphabet Inc.’s Google; Microsoft Corp. CEO Satya Nadella; Nvidia Corp. co-founder Jensen Huang, and former Google CEO Eric Schmidt, according to Schumer’s office.

In June, Schumer rolled out a policy framework to aid lawmakers as they begin regulating AI. In a speech, he said Congress should promote American innovation while protecting consumers from dangers posed by the technology.