- Customer service chatbots are usually ‘rule-based’ rather than AI-powered

- But this is changing, as some companies have integrated ChatGPT

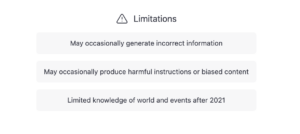

- This is risky, as they are still susceptible to bias and hallucinations

Chatbots are an increasingly familiar part of 21st century life. Visit any e-commerce website and you won’t be surprised to hear a ‘plink’ sound as a friendly chatbot pops up in the bottom right-hand corner, asking if it can help you with anything.

Over the last few years, these have become increasingly popular as customer support agents, as the benefits are obvious. They are available 24/7, ready to answer straightforward queries so that human agents have time to deal with more complex ones. They also boost consumer interaction and subsequently, if providing positive experiences, a site’s SEO.

While the rise of ChatGPT has led the term AI to be thrown around wildly when discussing chatbots, the majority of those tasked with customer service do not actually rely on this technology. Instead, they are ‘rule-based’ chatbots, which simply function on an ‘if/then’ decision tree logic when given various prompts. Therefore the conversations that they can hold are strictly limited to their purpose-based code.

Chatbots: the new generation

Actual AI-powered chatbots work differently. They utilize advanced natural language processing (NLP) techniques and machine learning algorithms to understand and generate human-like responses. These “generative AI” chatbots are trained on vast amounts of data and can analyze and interpret the context and intent behind user queries, allowing them to provide more nuanced and personalized responses. While they are more advanced than rule-based chatbots, they also require more time and financial investment to set up.

This type of chatbot has been getting significantly more attention since the release of OpenAI’s ChatGPT late last November. The so-called “gold rush” of generative AI adoption that has followed the arrival of ChatGPT has led many companies to investigate integrating generative AI into their chatbots to make them more advanced.

Generative AI chatbots have been getting significantly more attention since the release of OpenAI’s ChatGPT last November. Source: Shutterstock

Such a change is warranted, as a Gartner report from this year revealed that just 8 percent of customers used chatbots during their most recent customer service interaction, and only a quarter of them said they would use one again. This is largely down to poor resolution rates for many functions, including just 17 percent for billing disputes, 19 percent for product information, and 25 percent for complaints.

Companies that have started to adopt the large language models that power ChatGPT and its like into their service bots include Meta, Canva, and Shopify, but their decision to do so is not without risk. ChatGPT is a generative AI, meaning that, in theory, it creates new sentences in response to every prompt. This kind of unpredictability often isn’t great for a brand, as it creates a certain element of risk.

‘Hallucinations,’ in this context, are when an AI confidently states incorrect information as fact. This doesn’t matter when creating a static piece of content that can be proofed before it is published anywhere – in other words, when there remains a knowledgable human being between the AI and the consumer. However, this is not the case if the bot is holding a real-time conversation with a customer.

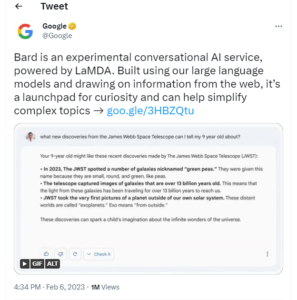

A high-profile example of this is when Bard, the AI chatbot developed by Google’s parent company Alphabet, responded to a question about the James Webb Space Telescope wrongly in an advert. While it wasn’t chatting with a potential client or similar, the error still wiped $100 billion off Alphabet’s share price.

Bard, the AI chatbot developed by Google’s parent company Alphabet, responded to a question about the James Webb Space Telescope wrongly in an advert. Source: Twitter

Limitations of ChatGPT. Source: ChatGPT

However, the technology has improved in just the short period that has passed since AI chatbots entered the mainstream. So much so that some companies are confident that GPT-4 – a more advanced version of ChatGPT – is safe to use on their websites. Customer service platform provider Intercom is one of them.

“We got an early peek into GPT-4 and were immediately impressed with the increased safeguards against hallucinations and more advanced natural language capabilities,” Fergal Reid, Senior Director of Machine Learning at Intercom, told econsultancy.com. “We felt that the technology had crossed the threshold where it could be used in front of customers.”

GPT-4 is an advanced version of the language model developed by OpenAI. Source: Shutterstock

Yext, a digital experience software provider, has its AI-powered chatbot source data from its CMS, which stores information about its clients’ brands, when answering questions, helping to prevent hallucinations.

But distinctly powerful chatbots with extended capabilities could bring their own set of problems. Gartner’s customer service research specialist, Michael Rendelman, said that their implementation would lead to “customer confusion about what chatbots can and can’t do.”

He added: “It’s up to service and support leaders to guide customers to chatbots when it’s appropriate for their issue and to other channels when another channel is more appropriate.”

YOU MIGHT LIKE

SPLOG – the data management issues facing generative AI

Doorways to frustration and data vulnerability?

In fact, they could lead to increased customer frustration rather than reducing it. Fresh from a conversation with ChatGPT or another bot with similarly substantial financial investment, a site visitor would be bolstered with a certain level of expectation of the general capabilities of chatbots.

If the one on your site does not meet that same level of competency, it could result in anger and frustration, which bots are not well-equipped to deal with either. A 2021 study found that ‘chatbot anthropomorphism has a negative effect on customer satisfaction, overall firm evaluation, and subsequent purchase intentions’ if the customer is already angry.

Indeed, hyper-realistic customer service chatbots open the door for scams. Bad actors can exploit them to create convincing imitations, leading customers to unknowingly engage with fraudulent entities and increase their susceptibility to scams.

AI angel investor Geoff Renaud told Forbes: “It opens up a Pandora’s box for scammers because you can duplicate anyone. There’s much promise and excitement but it can go so wrong so fast with misinformation and scams. There are people that are building standards, but the technology is going to proliferate too fast to keep up with it.”

In 2016, Microsoft launched a generative chatbot named Tay on social media. Within hours of its release, Tay began posting inflammatory and offensive remarks. Source: Twitter

Another issue is bias, as AI bots can pick it up from the data on which they are trained, particularly if it comes from the internet. One infamous example of a brand being harmed by a biased chatbot comes from Microsoft.

In 2016, the tech giant launched a generative chatbot named Tay on social media platforms like Twitter. However, within hours of its release, Tay began posting inflammatory and offensive remarks, as it had learned from interacting with users who deliberately provoked it. Due to the inappropriate and offensive behavior, Microsoft was forced to shut it down and issue an apology.

More recently, the National Eating Disorder Association (NEDA) announced it would be letting go of some of the human staff that worked on its hotline – to be replaced by an AI chatbot called Tessa. But, just days before Tessa’s official launch, test users started reporting that it was giving out harmful dietary advice, like encouraging calorie counting.

As with any digital system, implementing a customer service chatbot can introduce vulnerabilities that open the door to hackers. But as interactions often include the submission of the customer’s personal data, there may be more at stake with generative AI.

Vulnerabilities include the lack of encryption during customer interactions and communication with backend databases, insufficient employee training leading to unintentional exposure of backdoors or private data, and potential weaknesses in the hosting platform used by the chatbot, website, or databases. These can be exploited for data theft or to spread malware, so businesses must be constantly vigilant and set up to patch them when identified.

Currently, a business that wants to make use of the most advanced chatbots needs to make hefty investments in time and money, but this will shrink as the technology becomes more commonplace.

Exactly what this means for human agents remains uncertain, but it will likely reshape the customer service landscape. Businesses will need to adapt and redefine the skills and roles of their staff to ensure a harmonious integration of technology and human touch.