Deepfakes ranked most serious AI crime threat by experts

- A study led by UCL asked 31 experts to rank the biggest AI crime threats

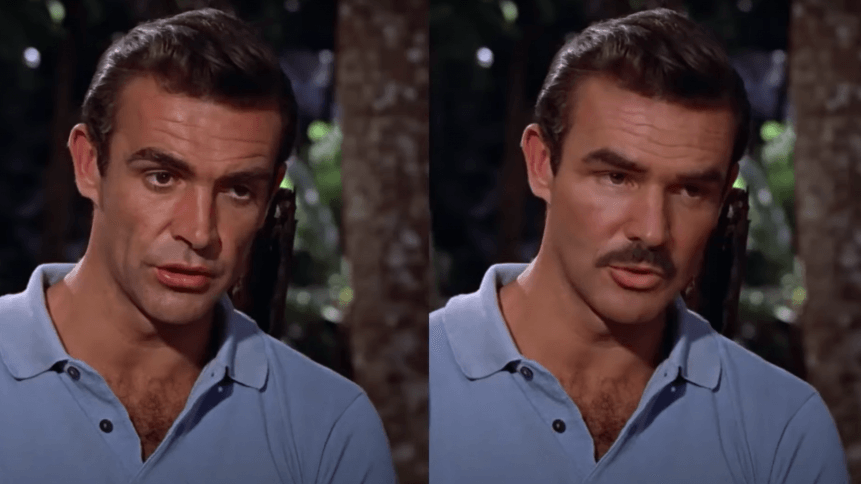

- AI-manipulated videos, or deepfakes, were ranked number one

Deepfakes represent the biggest current threat posed by artificial intelligence (AI) technology in terms of applications for crime or terrorism, according to experts cited in a report published by University College London (UCL).

Funded by UCL’s Dawes Centre for Future Crime, the study identified 20 ways AI could be used to nefarious ends over the next 15 years, asking 31 AI experts to rank them based on their potential for harm, including money they could make, how easy they were to use, and how hard they were to stop.

The experts – who represented AI experts from academia, the private sector, police, government and state security – agreed deepfakes represent the largest threat.

As deepfake technology continues to advance, specialists said that fake content would become more difficult to identify and stop, and could assist bad actors in a variety of aims, from discrediting a public figure to extracting funds by impersonating a couple’s son or daughter in a video call.

Such uses could undermine trust in audio and visual evidence, the authors said, which could have great societal harm. Worryingly, both those aforementioned scenarios have played out – or similar versions of them.

Last year, the potential power of AI fakery drew mainstream focus, when a deepfake video emerged on Facebook of the platform’s founder Mark Zuckerberg discussing the power of holding “billions of people’s stolen data.”

The same year also saw scammers leveraging AI and voice recording to impersonate a business executive, adding his “slight German accent and other qualities” to successfully request the transfer of hundreds of thousands of dollars of company money to a fraudulent account.

Javvad Malik, Security Awareness Advocate at KnowBe4, told TechHQ: “The use of technology to impersonate a chief executive has some scary implications, especially given the fact that it is not inconceivable that coupled with video, the same attack could be played out as a video-call.”

The rise of deepfakes has been rapid within the last couple of years. A report by Deeptrace in 2019 found that videos had doubled in quantity in year-on-year, with nearly 15,000 uploaded online. The ‘deepfake phenomenon’, the researchers said, could be attributed to an increase in the commodification of tools and services that lower the barrier to non-experts.

Discussing the results of the latest report, lead author Dr Matthew Caldwell said that with people now spending large parts of their lives online, “online activity can make and break reputations.”

“Such an online environment, where data is property and information power, is ideally suited for exploitation by AI-based criminal activity,” he said.

“Unlike many traditional crimes, crimes in the digital realm can be easily shared, repeated, and even sold, allowing criminal techniques to be marketed and for crime to be provided as a service. This means criminals may be able to outsource the more challenging aspects of their AI-based crime.”

YOU MIGHT LIKE

Deepfakes are coming for your LinkedIn feed

Aside from fake content, five other AI-enabled crimes were judged to be of high concern. These included using driverless vehicles as weapons, helping to craft more tailored phishing messages (spear phishing), disrupting AI-controlled systems, harvesting online information for the purposes of large-scale blackmail, and AI-authored fake news.

Other crimes of lesser concern included the sale of items and services falsely labelled as ‘AI’, including in cybersecurity and advertising, which could help drive profits.

Those of lowest concern included ‘burglar bots’ – small robots used to gain entry into properties through access points such as letterboxes or cat flaps – which were judged to be easy to defeat, for instance through letterbox cages, and AI-assisted stalking, which, although extremely damaging to individuals, could not operate at scale.