Mozilla in space – a show of how humans could work alongside robots

Advanced physical robotics systems offer huge potential in assisting workers, particularly in manual industries like manufacturing, mining, agriculture and logistics, in carrying out tasks more efficiently and safely.

Given the countless sci-fi parallels, it’s an appealing vision – we picture a mechanic under a car, for example, taking a wrench from his robot-assistant, or scenes like this one from Iron Man:

But given the technology’s pace of development, it’s also a viable vision, even if it comes by degrees.

ABI Research estimated the deployment of four million commercial robots across 50,000 warehouses by 2025 due to the increased demand for same-day delivery services in e-commerce.

And while you might imagine these surely vastly expensive systems will be the reserve of titans like the carmakers whose production lines they were born on, rest assured we’ll see them become accessible in flexible XaaS packages, ready to be quickly tuned up for your specific needs.

But while robots are generally programmed to carry out repetitive tasks in isolation as part of a production line – 422,000 industrial robots shipped around the world do just that in 2018 – research and development is increasingly targeting how these robots can augment the roles of human workers on-the-fly.

For that to happen, humans must be able to interact with their machine counterparts in a way that’s both natural and efficient. Robotics systems must be able to interpret these commands seamlessly. Bristol University, for one, has carried out exciting research into these future relationships.

But the mechanics of these relationships are also being developed in ‘the field’.

Hands-free robotics commands

As reported by ZDNet, this week Mozilla (the creator of the Firefox web browser) unveiled a project with the German Aerospace Center, or Deutsches Zentrum für Luft- und Raumfahrt (DLR), to integrate its speech recognition technology into moon robotics.

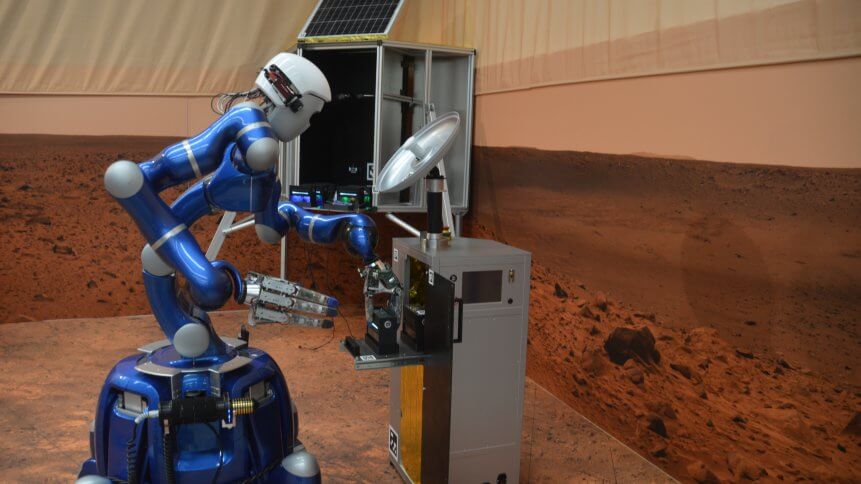

Robotics systems are used in space to support astronauts with tasks such as maintenance, repairs, photographs and sample collections – while future applications could include mining. In this inherently remote and inhospitable, this technology can be vital in helping astronauts achieve certain tasks.

But they can also be improved, and one area is the ability of the astronauts to control them efficiently while they are focused and engaged with the task in hand, to ensure work is carried out as effectively, safely and quickly as possible.

This is where Mozilla’s Deep Speech automatic speech recognition (ASR) engine comes into play, providing voice control of robots when astronauts have their hands occupied.

Reuben Morais is a Senior Research Engineer working on the Machine Learning team at Mozilla, and explained: “Deep Speech is composed of two main subsystems: an acoustic model and a decoder.

“The acoustic model is a deep neural network that receives audio features as inputs, and outputs character probabilities. The decoder uses a beam search algorithm to transform the character probabilities into textual transcripts that are then returned by the system.”

The German space agency DLR is reportedly integrating Mozilla’s Deep Speech program into its hardware, while the product will also be contributed to and enhanced by the agency’s own tests, speech samples and recordings.

It’s unconfirmed what hardware the Deep Speech upgrade will be integrated with, although ZDNet notes that the DLR is behind the design for a two-armed, humanoid built to test astronaut and robot collaboration across difficult terrains called Rollin’ Justin.