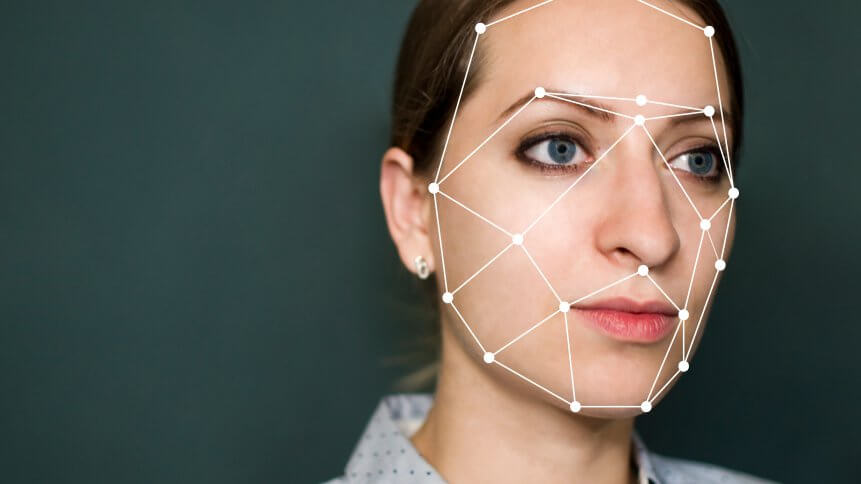

Deepfake videos double in less than a year

The creation of deepfakes has surged, with the number near doubling in the last nine months alone.

Deepfakes refer to videos that have been manipulated using AI programs. Researchers from cybersecurity firm Deeptrace found 14,698 videos came online this year, compared to the 7,964 reported at the end of last 2018.

Despite plenty of these appearing unrealistic, the firm said, the technology is being developed at such a rate that it will become increasingly difficult to determine certain doctored videos from genuine ones.

‘Deepfake phenomenon’

While many of the fears around the technology are stemming from its potential use in the dissemination of ‘fake news’ for political purposes, Deeptrace reported that 96 percent were pornographic in nature.

Videos frequently featured the computer-generated face of a celebrity overlaid onto that of the original actor in a pornographic scene. All videos featured women.

Deeptrace attributed the rise of the “deepfake phenomenon” to an increase in the commodification of tools and services that lower the barrier to non-experts. The report noted a “significant contribution” to the creation and use of these tools in China and South Korea.

Following the alleged impact of fake news on the result of the 2016 presidential elections and EU referendum in the UK, many predicted that deepfakes could provide a dangerous new medium for “information warfare”, helping to spread misinformation.

However, the technology’s increasingly heavy use in the creation of non-consensual pornography is just as, if not more, troubling. Deeptrace said that the top four websites contributing to deepfake pornography accounted for more than 132 million views on videos targeting hundreds of female celebrities worldwide.

That’s not to say deepfakes haven’t been used in the political sphere though. Two landmark cases in Gabon and Malaysia, which received minimal coverage in the west, were linked to an attempted government coup and an ongoing political smear campaign.

Digital subversion

In a blog post, Deeptrace CEO and Chief Scientist, Giorgio Patrini, said that the rise of deepfakes is forcing us towards an “unsettling realization” that video and audio are no longer tenable “records of reality”.

“Every digital communication channel our society is built upon, whether that be audio, video, or even text, is at risk of being subverted,” said Patrini.

Patrini also noted that, outside of pornography and politics, the “weaponization of deepfakes and synthetic media” is affecting the cybersecurity landscape. Deepfake videos of prominent leaders could be used in elaborate spear-phishing attacks, for example, while fake ‘sex tapes’ could be used for corporate blackmail.

In March this year, scammers were thought to have leveraged AI to impersonate the voice of a business executive at a UK-based energy business, requesting from an employee the successful transfer of hundreds and thousands of dollars to a fraudulent account.

Paul Bischoff, Privacy Advocate at Comparitech.com told TechHQ that while making convincing deepfakes requires hundreds of photos of the subject and special skills, the “barriers are getting lower” and “we need to prepare for a world where deepfakes are commonplace.”

It would be unwise and impractical to make deepfake software illegal, and there’s no stopping it spread at this point. What we need to do is build awareness, good judgment, and a healthy sense of scepticism,” said Bischoff.

“Always consider the source when viewing a video of someone,” he said, adding that those concerned about falling victim to them could consider posting less “selfies” on publicly accessible social media.

Patrini said: “Deepfakes are here to stay, and their impact is already being felt on a global scale.”

“We hope this report stimulates further discussion on the topic and emphasizes the importance of developing a range of countermeasures to protect individuals and organizations from the harmful applications of deepfakes.”