How the demands of AI are impacting data centers and what operators can do

The growth of AI applications has revolutionized the data center industry, but it hasn’t come without challenges. One of the foremost concerns is the increased power consumption and high-power density environments that AI demands, significantly impacting the physical infrastructure requirements of data facilities.

In the late 1970s, data center power density was generally between 2 kW and 4 kW, but now, it is not uncommon to exceed 40 kW to accommodate AI or HPC (high-performance computing) workloads. In November, Silicon Valley Power revealed that its forecasted annual data center load in 2035 “almost doubles the current system load.”

Colm Shorten, Senior Director of Data Centers at JLL Real Estate, said: “It’s true to say that data center infrastructure hasn’t changed a great deal in 20 years, so there are models of design that are used repeatedly, whether they’re based on uptime or five-nine availability.

“The fundamental thing was always making sure the data center runs, has power, has network, has cooling, and has security. They typically would have been running in the medium to high single digits, about 8 to 12 kW, and 19 kW would be considered high.

Source: Legrand Data Center Solutions

“What AI has done in a disruptive sense is that it’s challenged what those power requirements are, so rack density and rack power requirements have increased. If you generate a lot of power, you generate a lot of heat. If you generate a lot of heat, you’ve got to dissipate that heat and get rid of it.”

“There needs to be a mind shift now on how we cool these racks and how we deliver power to the racks based on the demands from AI,” added David Bradley, the Regional Director of Ireland and Central Eastern Europe at Legrand Data Center Solutions.

The challenges AI places on the data center industry

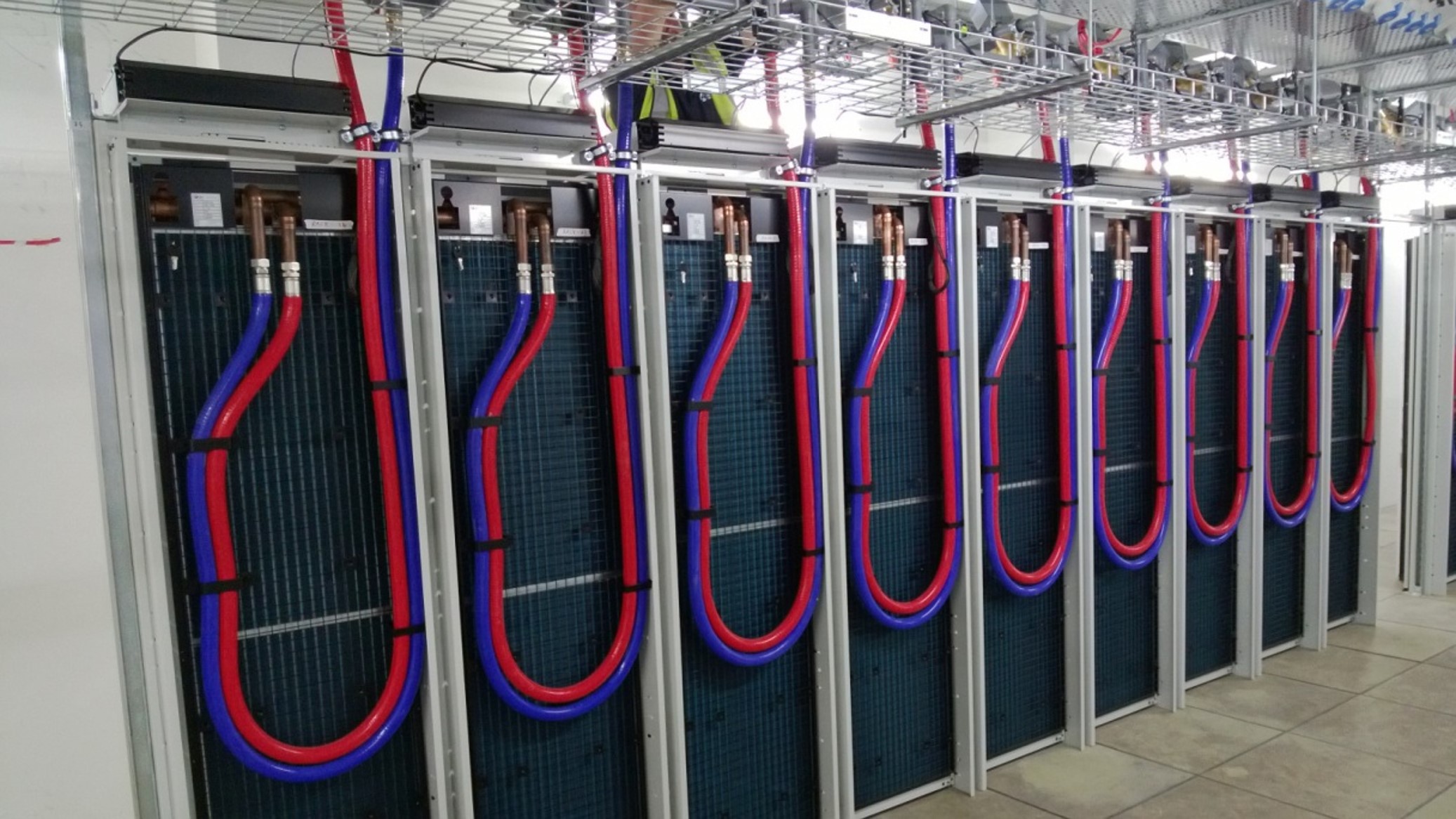

As computing power and chip designs advance, equipment racks double in power density every six to seven years. According to the Uptime Institute, more than a third of data center operators say their densities have “rapidly increased” in the past three years. Densification of AI server clusters requires a shift from air to liquid cooling, bringing challenges such as site constraints, obsolescence risks, installation complications, and limited sustainable fluid options. Specialized cooling methods like rear door heat exchangers also become necessary to address maintain redundancy and efficiency.

The multifaceted nature of AI workloads adds another layer of complexity. Training demands less redundancy but emphasizes cost-efficiency. Mr. Bradley said: “Training AI is not latency-dependent, so it might mean that you could actually deploy new data centers somewhat outside of the central FLAPD region.”

Mr. Shorten added: “In the past, we would typically find a site that’s within either a cloud region or a densely populated metro, build a data center there, and bring the power to it. Now you bring the data center to the power. AI training sites are less latency-sensitive than a traditional cloud model. That means we can get access to power that traditionally wouldn’t have been available.”

The surge in AI demands intensifies network requirements too, placing added stress on data centers to ensure robust connectivity and low latency. At the same time, the imperative for power redundancy and resilience amplifies. This necessitates high-reliability mechanisms and seamless switching between power sources to avert downtime risks for the data center at large. Operational risks such as power surges and harmonic distortions caused by non-linear components pose continual threats to efficiency and safety, often culminating in overheating issues.

According to Mr. Shorten, the evolving nature of data center requirements for AI workloads means operators need to think about future-proofing facilities. He said: “We have to develop what’s called a hybrid solution because if we build a pure traditional model, there’s a risk of it becoming obsolete in two to four years’ time.

“When you think that building and developing your data center costs somewhere between $7 million and $10 million a MW – you build a large 100 MW data center, you’re into billions. Then you need that asset to last 15 to 30 years. Admittedly there’s a technology refresh in between, but if you have to change your cooling technology or your power distribution after year six or seven when some of these AI components that we’re finding today become almost commoditized, then you’re going to have a challenge.

“Some of these machines and applications are physically heavy, so if I built a data center 20 years ago, I’d have 12 kN in a floor and would have to go up two stories. If I’m adding on rear door heat exchangers and other infrastructure, that may go up to 15 to 20, 30 kN. It’s very difficult to re-engineer and retrofit that – i.e., print a new floor – in year two or three.”

Mr. Bradley added: “You could add 200 to 300 kg of weight on a rack. Now, what you’ve got is a floor that cannot take that. Then there’s a knock-on effect; you do one thing to retrofit that, then that affects something else, and that affects something else.

“So you have to look at the requirements of AI – fundamentally, the power and the cooling demands of AI – and then your design goes around from there.”

However, not all the challenges of surging AI demands relate to physical infrastructure. Regulators can struggle to predict the technology’s trajectory, leading to varied regulatory approaches like the EU’s AI Act and the NIS2 Directive. This makes it difficult for data center operators to navigate compliance requirements and adapt their infrastructure accordingly.

Similarly, it becomes more difficult for data centers to meet their sustainability goals. According to the 2022 Data Center Industry Survey by the Uptime Institute, 63 percent of data center operators expect mandatory sustainability reporting in the next five years. The Corporate Sustainability Reporting Directive (CSRD) will start to impact some EU-based businesses from January 1, 2024, and will necessitate reporting new metrics like water and carbon use effectiveness. That’s more pressure to prolong infrastructure, recycle coolants, partner with sustainable suppliers, and implement renewable energy sources.

As data volume increases, so do security risks, with AI introducing new threats like automated attacks and vulnerability identification. But these are not always malicious, as, according to a recent study from the Uptime Institute, nearly 40 percent of organizations have suffered a major outage due to human error since 2020. Of these, almost 85 percent were caused by staff failing to follow procedures or flaws in the processes followed. Data centers must implement advanced encryption, biometric authentication, and cybersecurity solutions to counter unauthorized access and monitor for anomalies.

Mr. Shorten said: “From a security standpoint, AI is kind of a double-edged sword. The positive is that AI is very good if you were to apply it to look at changes in patterns. So, if there was a cyberattack or somebody was breaking into your environment, from a network standpoint it can pick up abnormalities. On the flip side, it’s very, very powerful and can be used in a bad way by bad actors.

“The guys who are cybercriminals and the guys who are building security and protection are constantly battling with each other to develop either their protection or penetration.”

Solutions to the challenges

As the number of challenges has grown, so have the available solutions. Mr. Bradley said: “You need to address the demands that AI brings from both a power and cooling perspective and we, Legrand, have those solutions.”

Innovative designs like USystems’ Rear Door Coolers optimize thermal management while addressing challenges associated with space constraints and sustainable cooling options. They ensure optimum thermal and energy performance by removing the heat generated by active equipment at source, preventing hot exhaust air from entering the data room. The coolers allow load removal of up to 92 kW per cabinet and have been recognized with the award of the UK’s most prestigious business prize: the Queen’s Award for Enterprise: Innovation.

Source: Legrand Data Center Solutions

Robust systems cool racks that run AI applications. Minkels’ Nexpand cabinets come with airflow management accessories designed to seal gaps, manage cable entry, and create an airtight environment for effective airflow control. Liquid cooling solutions, such as direct-to-chip or immersion cooling, are also increasingly adopted to manage high-density environments, dissipating heat more effectively than traditional air-cooling methods.

Intelligent rack power distribution units (PDUs) like the Raritan PX4 and the Server Technology PRO4X rack PDUs have been designed to handle the high power consumption and density AI brings. These best-in-class PDUs offer industry-proven high density outlet technology and ground-breaking intelligence features that cater to complex AI requirements. Modular solutions and customizable cabinets provide the flexibility and scalability required to accommodate future growth.

Track Busway solutions with monitoring points can identify potential energy efficiency and reliability improvements, helping to make power distribution more responsive to dynamic needs. Busways designed with oversized neutral conductors and power meters – for example, the Starline Critical Power Monitor – can also mitigate the operational risks of power surges and harmonic distortion. The Track Busway from Starline also helps cut electrical installation time by 90 percent, thanks to its first-of-its-kind access slot, which allows for flexible layout changes without service interruption. High-density fiber solutions such as Infinium acclAIM can meet any low-latency requirements of AI inference alongside other network demands, ensuring quick response times and efficient data transmission between metropolitan hubs and data centers.

Intelligent cabinet locking systems, like Nexpand’s Smart Lock, meet regulatory compliance mandates from PCI DSS, SOX, HIPAA, GDPR, and EN50600. Cabinets can be opened remotely or let a user monitor who opened a cabinet and work in tandem with video surveillance solutions. Physical security like this helps, but operators should consider intelligent PDUs equipped with the latest network security protocols, and that offer diverse options for user authentication, password management, and best-in-class data encryption methods.

Energy-efficient hardware adoption and renewable energy sources are crucial to reduce data center operating costs and carbon footprint. Environmental monitoring devices, like SmartSensors from Raritan, can track entire facilities’ temperature, humidity, and airflow, enabling precise cooling management that minimizes energy waste. Monitoring data helps predict potential equipment failure, reducing the likelihood of unexpected downtime, and informing decisions regarding infrastructure upgrades, layout changes, or equipment replacements that will reduce energy usage.

Mr. Shorten said: “Between power, because of their Starline busways, and between what they do in rear door heat exchangers, Legrand has very innovative solutions that are helping us on that journey.

“They’re listening to the customer and providing solutions that we think are both innovative and that are going to help us meet the needs and demands that AI and high-performance computing bring in a sustainable way.”

With customizable, modular designs tailored for increasing power consumption, Legrand offers scalable solutions to meet evolving demands. Its approach ensures reliability, security, and energy efficiency for future-ready data centers. Legrand’s expert team assists in navigating complexities, optimizing every stage from design to management.

Learn more about how Legrand can prepare your business for AI by visiting its website here.