Computing alpha: finance sector benefits from custom AI chips

Massively parallel custom artificial intelligence (AI) chips are providing innovators with a flexible processing platform to unlock breakthroughs across a wide range of fields. Uses for the technology include helping researchers to better understand climate science, and supplying financial analysts with insightful tools for extracting ‘alpha’ – or a competitive edge – from stock markets.

Designed from the ground up for performing machine learning and AI operations, a specialist technology platform has been developed by UK firm, Graphcore. The processing tasks whirring away inside the chips can be visualized as a graph – which is where Graphcore takes its name – comprising many interconnected, and sometimes looped, data-holding and compute-holding objects.

Learning efficiently from data

Rather than tell computers what to do step by step, as is the case in conventional computer code, Graphcore’s designs are configured to let machines learn efficiently from data, and then infer results based on that data. This philosophy helps to explain the distinction between Graphcore’s Intelligence Processing Units (IPUs) and regular CPUs and GPUs – the chips found inside our laptops and elsewhere.

Source: Graphcore

If you put an IPU under the microscope, you would find not just one processor, but more than a thousand. And each of those can run multiple programmes, which provides massively parallel processing capabilities for machine learning. Onboard communication channels inside the IPU allow all of the cores – the processing engines used to read and understand incoming data – to collaborate efficiently. Critically, at the wafer scale, the IPUs have been configured to not just support known machine learning methods efficiently, but to also allow for the discovery of new algorithms – a feature that makes the hardware more flexible in comparison with standard architectures.

Major breakthroughs in the ability of machines to understand or ‘generalize’ properties of large data sets have captured the interest of financial firms such as Man Group, an international investment management company headquartered in London, UK. Investment managers, supported by teams of quantitative researchers, have long been interested in how algorithms can be applied to financial data – for example, to understand how price changes can propagate based on market events. The sector was one of the first to explore the commercial potential of machine learning.

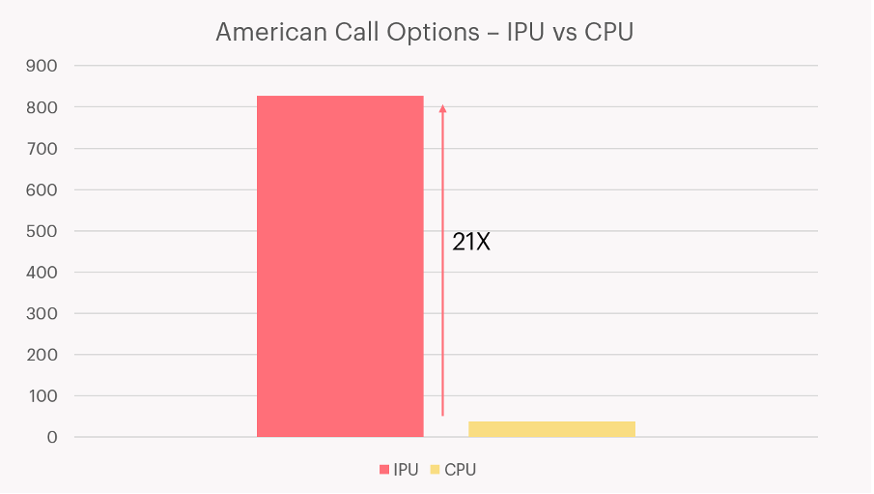

Up until now, running data through artificial intelligence models has relied on a combination of CPUs and their graphics rendering equivalents, GPUs. But neither product is designed specifically for the task of inferring from data buried in gigabytes of information. Using dedicated IPUs puts you on a direct route from A to B rather than having to navigate a more tortuous, and much less efficient processing path.

By advancing the speed of training and processing, users of IPUs can deploy applications in production (and iterate thereon) much more quickly.

Pushing AI models to new heights

Making the most out of what artificial intelligence has to offer increasingly means having to process vast data sets. Layered statistical models can require billions of inputs to make sense of the signals within the noise by testing and retesting their parameters until algorithms achieve high accuracy. With regular computing hardware, this can take a huge amount of time. But, thanks to Graphcore’s IPUs – which have been designed for exactly this specific set of tasks – customers can gather results much more quickly than their competitors and push their AI models to new heights.

IPUs provide higher floating point operations per second (FLOPS) – the most relevant measure of computer performance in this context – in comparison with conventional CPUs. But at the same time, they still suit multiple instruction, multiple data (MIMD) programming models that will be familiar to developers used to working with multiple independent processors.

Dedicated hardware is making great gains in being able to “normalize” artificial intelligence in computing by becoming much more closely matched to the unusual processing workloads that AI routines demand. At the same time, graphical interfaces (that display memory use and time spent on code execution and communication) give developers an overview on understanding how applications are performing to maximize the utilization of IPUs. And the next piece of the puzzle is making those processing advances available to clients, such as financial institutions and other customers, affordably at scale – which is where the cloud fits in.

Try before you buy

To allow clients to explore the programming interface in more detail and access high-end IPUs from their browsers, Graphcore has teamed with cloud-based machine learning platform, Paperspace. The platform gives users access to IPU hardware in the cloud through familiar online tools such as Jupyter Notebooks (among other popular choices for analysts), and allows users to investigate a range of AI models out of the box.

Source: Graphcore

In finance, IPUs have helped firms to train their AI models more quickly, allowing companies to be more responsive to insights. The hardware also helps financial institutions to gather information from large data sets such as hundreds of thousands of pages of company reports, which would take human analysts years to digest. AI techniques such as natural language processing can be deployed to detect changes in sentiment over time, for example, to pick out trends that could be missed by the competition.

Backers for Graphcore’s technology include a range of investors, many from the world of finance, such as Baillie Gifford, Fidelity International, M&G Investments and Schroders. The total funds raised by the custom AI chip-maker now total more than $700 million.

To discover how Graphcore’s custom AI chips can benefit your application, visit – graphcore.ai.