Artificial Intelligence Model Training Times Halved in Protein Design Research

Scientific research is increasingly using AI (artificial intelligence) in positive ways for the benefit of humanity. An antibody research company, LabGenius, is discovering advanced treatments for cancer and specific inflammatory diseases using machine learning. It also discovered that the principles of the technologies used can be applied far more widely across healthcare and life sciences.

The London-based company uses a combination of synthetic biology, automation in the laboratory, and AI to develop antibody therapies. In the past, LabGenius had to wait as much as a month for functioning models to develop using GPU-based AI processing. However, its recent use of IPU (Intelligence Processing Unit) systems halved the compute time needed to run the training stage of model building.

Researchers at LabGenius used standard PyTorch to address the BERT transformer model and ran it on a dedicated IPU system from Graphcore.

“With Graphcore, we reduced the turnaround time to about two weeks, so we can experiment much more rapidly and see the results quicker,” said Dr. Katya Putintseva, Principal Data Scientist at LabGenius.

Designing or finding existing proteins to treat medical conditions is complicated. However, medical science has recently produced small molecules designed using machine learning fit to reach clinical trials, marking a new era in drug design.

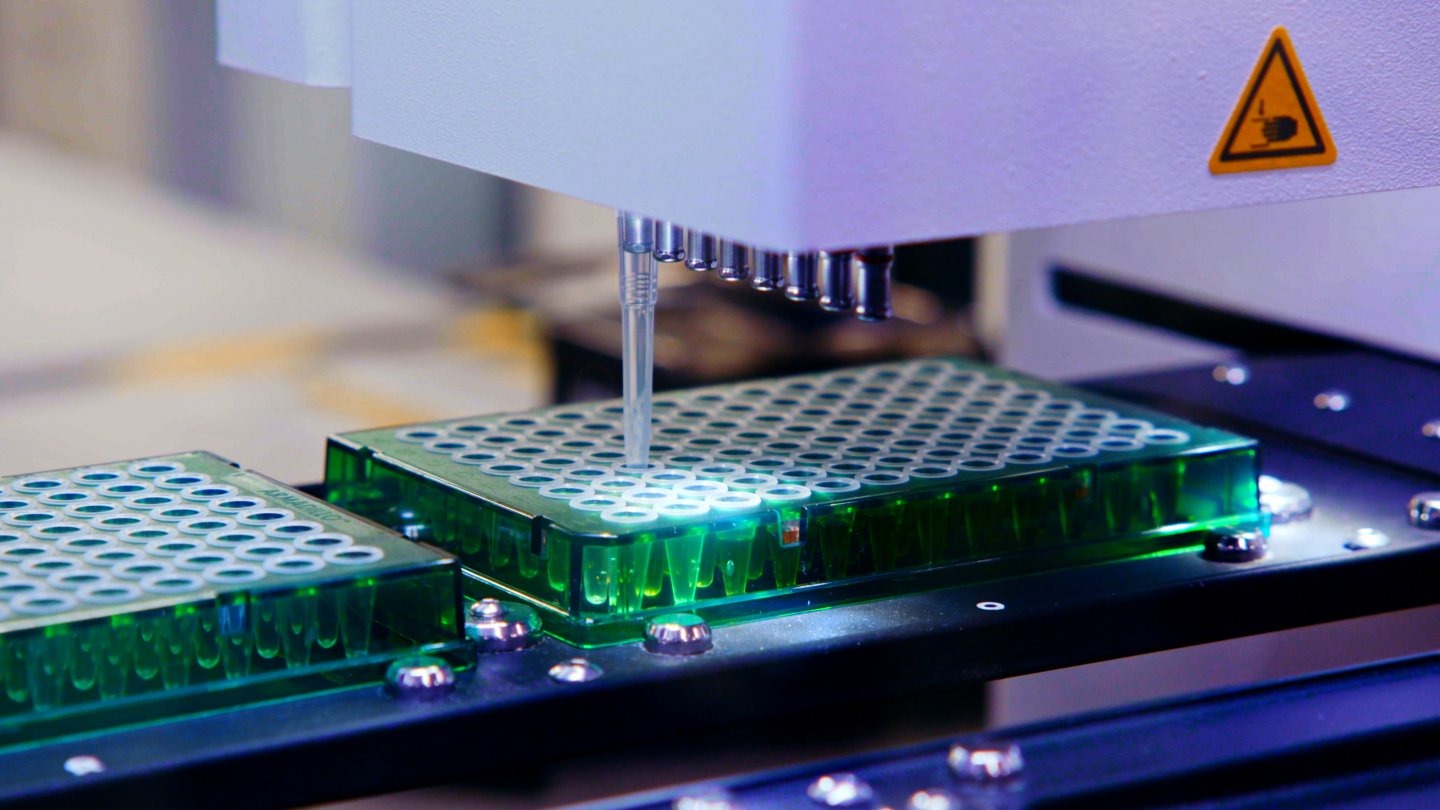

Knowing how to adjust a protein’s constituent amino acids to improve its function is highly challenging, even more so when complemented by more conventional computer software. However, the iterative nature of protein design is well suited to AI modelling. To use this technology, LabGenius created an automated system that manages experimental iterations and some of the physical processes typically involved in laboratory tests of new proteins.

Their software helps sequence, analyze, modify, and resynthesize proteins, and robots physically work with sample trays around a traditional “wet” lab. This is where innovative laboratory experimentation meets cutting-edge data science. Yet computational challenges still remain:

“The biggest problem of any biological challenge within the AI space, if you compare it to natural language processing or image recognition, is the scarcity of high-quality data [representing] enough of the features of interest,” said Dr. Putintseva.

“You can find a lot of data out there, but the devil is in the details. How was that dataset generated? What biases does it contain? How far can the signal extracted from it be extrapolated within the sequence space?”

Source: Graphcore

LabGenius applies AI to solve two of the greatest challenges in protein therapy development. The first will be familiar to any machine learning developer: how to optimize many variables in highly complex systems.

“We call [this] co-optimization or multi-objective optimization,” says Tom Ashworth, LabGenius’s Head of Technology.

“You might be trying to optimize potency, which might be about the molecule’s affinity, how sticky it is to its target, but at the same time you don’t want to destroy its safety or perhaps some other characteristic like its stability.”

Machine learning also informs how LabGenius iterates on experiments themselves. Ashworth said:

“[The system] is looking across different features we could change about the molecule — from point mutations of simpler constructs to the overall composition and topology of multi-module proteins. It’s making suggestions about what to design next… to learn about a change in the input and how that maps to a change in the output”.

LabGenius leverages Graphcore IPU compute in the IPU cloud to train BERT, the transformational model best known for NLP (natural language processing).

Researchers take a large set of known protein data and ask BERT to predict masked amino acids from the training data in a process that effectively teaches the software the basic biophysics of proteins. The researchers use PyTorch open-source implementations of BERT [GitHub]. By changing just a few lines of code, researchers can focus their attention on the dataset rather than building routines from scratch.

The fact that the Graphcore IPUs were able to cut the time spent training models in half places LabGenius is at a substantial advantage in this competitive industry. According to Tom Ashworth:

“Graphcore has changed what we’re able to do, accelerating our model training time from weeks to days. For our data scientists, that’s […] transformative.”

LabGenius is now looking to extend its use of IPU-trained BERT models in discovery phases to gain deeper insights into the developability of molecules. The biotechnology firm is also working with new and advanced AI models on Graphcore systems, such as graph neural networks (GNNs). Here, Graphcore IPUs (Intelligence Processing Units) leverage their inherent architectural advantages over traditional CPU and GPU systems.

Get in touch to learn more about healthcare and biotechnology organizations using Graphcore IPU hardware in the cloud.