Researchers reach for real-time financial predictions with Graphcore silicon

In financial trading, new AI techniques to analyze market data in the form of LOBs (limit order books) have the potential to better predict stock price fluctuation. However, the design of today’s traditional computer chips makes the real-time use of multi-horizon machine learning methods unviable in practice.

Now, researchers at the Oxford-Man Institute (OMI) have devised algorithms that run on hardware designed from the ground up for AI computation. With their iterations on Attention and Seq2Seq running on IPU arrays (Intelligence Processing Units), the pre-alpha research achieved an 80% predictive success rate in time frames equivalent to around 30 seconds to two minutes of live trading.

Inside specific parameters, machine learning algorithms have the capability to create increasingly accurate predictions given enough data. Even the most random-seeming data sets may yield previously unseen patterns, given enough processing power and time.

But where best predictions are necessary in the space of a few seconds or less, the capabilities of more traditional computing hardware are stretched. Even with rack upon rack of dedicated GPU processors (which are prohibitively expensive to run at scale), code that can predict multiple outcomes and increase accuracy over time is best deployed in environments where time is less of a precious commodity.

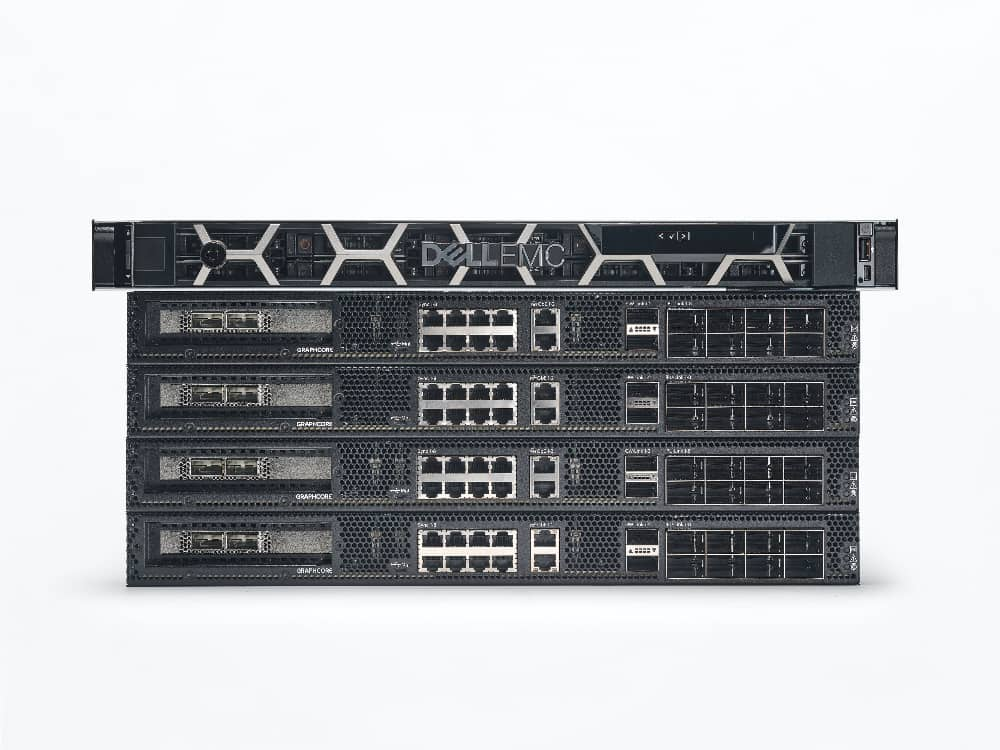

Source: Graphcore

Money markets are already dominated by algorithmic decision engines, with silicon traders executing on their given positions with millisecond accuracy. While the capabilities of machine learning are well-known in the sector, there are significant limits to autonomous trading.

One of the two authors of the OMI paper “Multi-Horizon Forecasting for Limit Order Books: Novel Deep Learning Approaches and Hardware Acceleration using Intelligent Processing Units,” Stefan Zohren, stated, “In […] multi-step forecasting, we […] have a model which is trained to make a forecast at a smaller horizon. But we can feed this information back into itself and roll forward the prediction to arrive at longer-horizon forecasts.”

With built-for-AI hardware from Graphcore, the research team achieved high predictability on when and at what price points to make successful trades over 100 tick periods, with the speed of ticks depending on the frequency of buy/sell events in a live market.

Multi-horizon forecasting is particularly relevant in areas where many factors may affect outcomes and where there are relatively low occurrences of useful signals. However, to refine the eventual best prediction, multi-horizon results were fed back by researchers’ code into algorithms inspired by NLP (natural language processing) techniques. In typical compute environments, GPU arrays are given this type of workload, but their single-instruction, multiple data processor design is not suited to the massively parallel processing required by this type of algorithm.

The research team developed variants of existing learning models, Seq2Seq and Attention, which they named DeepLOB-Seq2Seq, and DeepLOB-Attention. The Graphcore hardware running these models outperformed all other architectures by “a factor of ten,” predicting market movements 100 ticks in the future with around 80% accuracy in timescales viable for live trading.

Multi-horizon algorithms have significant potential in seemingly chaotic contexts, where billions of data points are created quickly, and code is required to predict at speed as conditions change (such as in financial markets). Producing required outcomes quickly enough to process large, rapidly changing data sets is the challenge solved by Graphcore IPU technology.

When organizations need to process large training runs fast that complete in minutes and seconds, not weeks and months, Graphcore’s dedicated hardware is ready for data center deployment right now. For example, BERT natural language models train in just over nine minutes, and ResNet-50 typically in 14-15 minutes (see full performance results here.). Different tiers of Graphcore IPU-PODs are available for different workloads, from the 4-petaFLOP IPU-POD16 for research and exploration all the way up to the 64-petaFLOP IPU-POD256 for datacenter-scale production.

Source: Graphcore

While the hardware’s underlying architecture may be very different, it is straightforward to use for the AI/ML practitioner. Graphcore’s Poplar software integrates with standard machine learning frameworks including PyTorch, TensorFlow, PyTorch Lightning, Keras, Hugging Face and ONNX, as well as lower-level programming options in Python and C++.

Compared to similarly priced GPU arrays, Graphcore systems typically deliver far greater performance-per-dollar, even on legacy AI models that have been developed on and refined for running on GPUs. Running any ML workload on specially designed silicon makes clear sense for any organization investing in advanced AI processing. Whether your motives are rooted in millisecond advantage over competing trading houses, medical applications, or research into advanced learning models, Graphcore’s innovative IPU technology can advance your projects.

The Oxford-Man team’s code is available on GitHub, and Graphcore representatives will be happy to steer channel partner queries or provide information on their Graphcloud service and IPU systems range.