Google’s DeepMind: The complexity of AI in healthcare

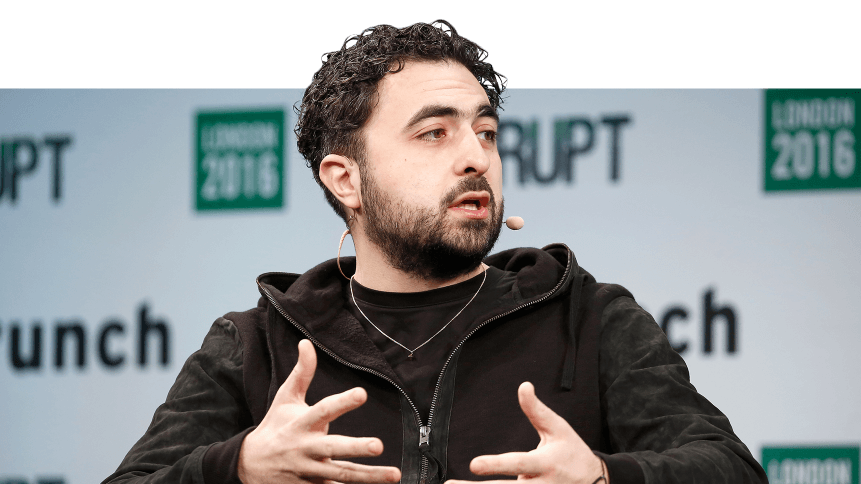

One of the co-founders of DeepMind, Mustafa Suleyman, has been granted leave by the company’s owner, Google, “by mutual consent.”

The situation has been linked to Suleyman’s (nickname Moose) involvement with the controversial use of AI technology in the Royal Free Hospital, in London, UK.

History lesson

DeepMind was a UK company formed in 2010 and was acquired by Google in 2014 for US$486 million.

Suleyman’s personal interest in healthcare (his mother worked as a nurse in the UK’s National Health Service) led the company — now Google DeepMind — to develop an app called Streams, which helped staff at the Royal Free examine patient records and predict kidney failure in patients in a matter of minutes.

There’s been some speculation over the last 24 hours about what I’m up to. After ten hectic years, I’m taking some personal time for a break to recharge and I’m looking forward to being back in the saddle at DeepMind soon.

— Mustafa Suleyman (@mustafasuleymn) August 22, 2019

DeepMind’s work received a deal of positive press at the time, although the project later became mired in controversy when the UK’s Information Commissioner Office criticized the hospital for the way in which the organization had shared patient data with DeepMind, and therefore by proxy, Google and Alphabet.

While it’s unhelpful to speculate as to the exact nature of the situation with regards to Suleyman and Google, the story presents interesting issues surrounding data anonymization, security, and privacy. Additionally, the specifics of the story also highlight some crucial issues with regards to AI technology in the health sector.

Artificial intelligence, data security, and healthcare

In order to learn, and to make useful conclusions for healthcare purposes, AI algorithms process enormous quantities of data.

The very scope and size of the source information is what makes AI so very effective: intelligent routines can process information and draw conclusions much more efficiently than humans. Additionally, in healthcare settings, the un-normalized nature of data means that AI is also useful in distilling disparate data forms into what becomes standardized formats, better for digital processing.

Naturally, in sensitive settings, anonymization has to be carefully done, because before, during and after processing, the information relates to specific humans. But what constitutes sensitive data, and what’s essential for the required outcome? Is a description like “24-year old male from ZIP code ABC presenting with condition 123” anonymous?

In some cases, perhaps not; there’s enough information to locate an individual. But, in the case of prognosis, for example, ZIP code and presentation of specific conditions might be crucial to the accuracy of algorithmic results.

Applications are now open for our 2020 Research Scientist and Engineering internships!

We offer hands-on experience working collaboratively on projects that push the frontiers of AI and science across a wide variety of teams.

Find out more or apply: https://t.co/g4L7Y43eFp

— DeepMind (@DeepMindAI) August 21, 2019

And anonymizing data is only one of the strictures placed, quite rightly, on the use of patient data. Health information is highly sensitive and needs handling accordingly. That fact is recognized by GDPR legislation which classifies medical information as “special,” and therefore subject to greater control and governance.

In the UK, individual patients have to be consulted to release their medical data to third parties specifically, and in some cases, this process is fairly byzantine.

In the several cases involving the work of DeepMind, each patient could have permitted a named third-party (Alphabet, ultimately) to issue a Subject Access Request (SAR) which would be presented to the Royal Free London NHS Foundation Trust. When life-threatening situations occur, that type of bureaucracy isn’t helpful.

DeepMind and Google

Although it’s completely irrelevant with which company’s algorithms the hospital shared data, one wonders whether there would have been such an outcry if the receiving third party had been a small IT outfit working on the cutting edge of technology at the time, as DeepMind was, before the Google acquisition.

Also, once subsumed into the Borg Collective of Google, Suleyman became subject to the HR rules and culture of a transglobal data giant. His eventual move sideways into a Google committee looking at AI data’s privacy issues, and away from healthcare applications of AI (which one presumes remain close to his heart), was therefore probably predictable.

Isn’t this *literally* the origin story of every super villain comic book character ever?!? https://t.co/oXMJ74qZUv

— Bryce Roberts (@bryce) August 21, 2019

Health data’s complexities

As any IT professional in the healthcare sector will tell you, the nature of patient data is fragmented — not through any fault in IT systems or practice, but because a single individual’s records alone may comprise of very specialist information sourced in multiple and very different areas: from anaesthesiology to venereology, and X-ray.

That makes data oversight for the purposes of passing on information from department to department within a single facility’s walls a convoluted task, never mind parceling up collective information from all pertinent sources (all sources may be providing vital prognostic information) for anonymized supply to a third-party.

Business units in healthcare

In recent years in the UK, there has been an increased business-focus in the state-run National Health Service, a mindset and resulting methodology which is clearly very much more evolved in private healthcare systems.

But whatever the funding model, individual departments, and even specialisms within departments often work as discrete business units as regards funding and performance assessment, for example.

YOU MIGHT LIKE

Four industries taking advantage of wearable technology

Thus it is perhaps understandable that a budget-holder might seize the technological opportunities that present themselves to a particular area of healthcare — in the case of Stream, urology.

That often leads to individual departments or even individual Consultants, developing their own tech applications and services that are highly specialized and targeted at achieving a specific clinical outcome. That type of practice makes a complete oversight into general data policies almost impossible, as far as facilities as large as modern hospitals are concerned.

The motives of the individuals behind the DeepMind (eventually, Google Healthcare) projects were indubitably positive, and make a refreshing change from humanity’s tendency to develop high-end AI code that drives customer engagement through hyper-individualized digital marketing; that is, AI to help sell more crap to people who don’t need it.

Suleyman’s motives in creating, selling, and progressing DeepMind were, conversely, almost all positive. Unfortunately, the use of AI in any setting raises ethical issues and casts light on inherent complexities. That’s especially true in the sectors that care for human lives, as “Moose” appears to have found to his personal cost.