Revolutionary data concepts mean real business impact: software abstraction to HCI

Speak to any systems architect at the moment, and you’ll find his or her eyes shine with enthusiasm when virtualization is mentioned. And although here at TechHQ we cut the very technical types a great deal of slack, it’s a subject that’s difficult to explain to anyone who’s more motivated by the business bottom line than the concept of software abstraction.

Virtualization in all its forms is an absorbing technology – and some aspects of it we consider below – but it’s also very exciting for enterprise managers who’ve never stepped inside a data center, purely for the business positives the tech can bring.

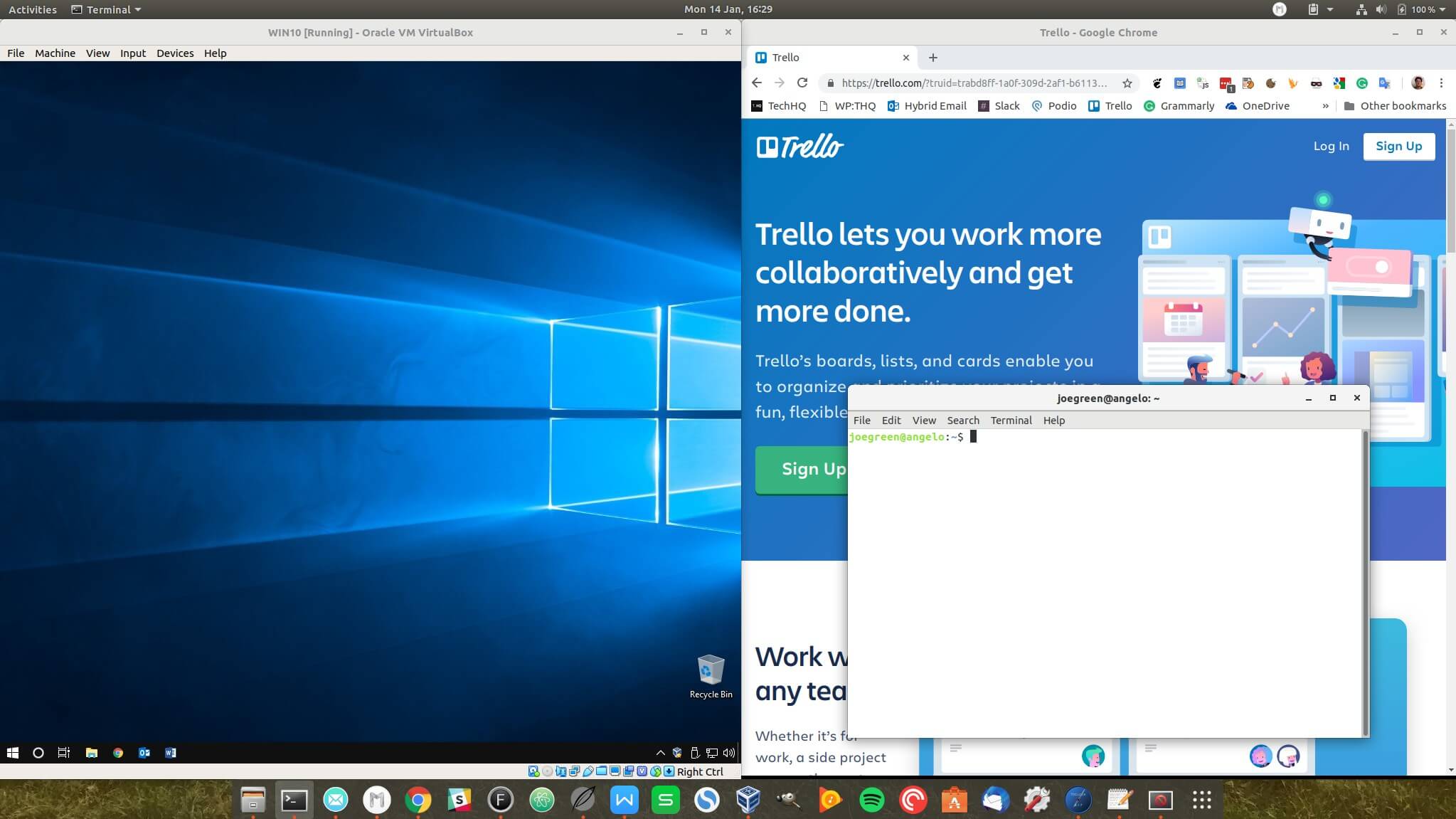

In short, virtualization (or hyperconvergence or software abstraction or software-defined service) is a technology which places a layer between the end-user and the often highly-complex underlying technology that’s providing a service or application. Here’s a picture of an elementary example:

Here the user’s desktop (complete with a browser and command-line terminal on the right) contains a virtualized operating system running on the left. The software layer places a window around the Windows 10 instance and allows the user to interact with the machine as if it were a separate application. Similarly, containers in orchestration environments like Kubernetes present discrete spaces with their own required (& modularized) resources that run independently from their foundation.

In the same way, servers today run many virtualized operating systems. This has significantly lowered the cost of hosting services, and also gives advantages like the almost instantaneous switching to backup ‘images’, or the scaling up of the numbers and power of deployed servers, according to peaks and troughs in demand.

In its latest guises, virtualization has extended to the data center’s infrastructure, not just the servers therein. In hyperconverged data centers, the network hardware, such as routers, load balancers, switches, firewalls and so forth, are all represented virtually, in what are known as hyperconverged infrastructure (HCI). Not only does this mean that resources can be allocated according to need with just a few clicks of a mouse, but it also means that enterprises can unify several data centers and (for example) their internal servers into one, unified platform. The actual technical routing of information and protocol handling is done by the abstraction software, rather than a small army of T-Shirt-ed technicians plugging cables from box A to trunking point B.

Here, then, is the reason why both IT professionals and business function managers and directors are all excited by virtualization. The IT professional loves the technology because it makes life more straightforward, and the business professional loves the fact that he or she doesn’t have to worry about a new, business-critical application running out of steam as soon as it goes live. Resources can be switched in and out simply, quickly and autonomously, according to the business need or demand spikes, rather than according to IT infrastructure constraints.

Virtualization doesn’t begin and end with the data center, the single desktop, or the server rack. Software abstraction is creating a new generation of business-centric solutions that make considerable sense, especially in the commercial world that’s now oriented towards the XaaS (anything as a service), pay-on-demand model.

Some enterprises are switching to ‘thin’ clients whose desktops are virtualized from a central, powerful server array – a VDI (virtual desktop infrastructure). Similarly, there’s SD-WAN (software-defined wide area networks) that present enterprise users with a view onto every digital service, whether its resources are located on servers in the next room or in a data center on the other side of the world.

Here at TechHQ, we’re looking at three companies that have a virtualization offering that can make a significant impact both on your business’s bottom line, as well as simplifying the provision of IT systems that would otherwise be costly to manage.

The technology behind Nutanix was developed, and is still in daily use, by Google – the company whose name seems to be used interchangeably when talking about the cloud, and sometimes, the internet itself. The Google File System which allows the simple management of massive, and massively distributed, computing and storage resources, was developed by one of Nutanix’s founding partners.

The company’s credo is to provide technology that creates simple deployment of scalable, powerful applications for businesses and organizations. The Nutanix platform is largely platform agnostic, in that it will run and manage hardware from multiple vendors, across different OSes and virtualization software.

The result is a framework on which it’s simple to develop, deploy and run apps. So when the app needs more speed, more storage, or more memory, the framework seamlessly provides these. It’s simple yet powerful. Conversely, when demand falls away, resources can be (or are, autonomously) redeployed. Nutanix’s platforms provide software abstraction, therefore, over the entire IT infrastructure. Its software runs on either its own hardware as part of a turnkey offering or on the brands of server hardware you’d expect to be on the procurement records of technologically-literate enterprises.

The company has developed a vast customer base from its first shipping date back in 2011, with over 11,000 customers in 145 countries to date, from public sector bodies like national and local government departments to businesses and not-for-profits of every shape and size.

To learn more about Nutanix, click here.

Hewlett Packard Enterprise’s SimpliVity solutions offer cutting-edge hyperconvergence technologies that reduce cost and complexity, and can power many areas of business-critical importance in any sized organization. In this context, hyperconvergence (a term coined by a technology journalist rather than any of the companies featured here) refers to the software abstraction of the infrastructure found in data centers: servers, network hardware, gateway devices, and security systems.

Purely in terms of IT disaster recovery, hyperconvergence improves recovery point objectives (RPOs) and recovery time objectives (RTOs), reducing backup and recovery times to seconds and vastly improving the ratio of logical data to physical storage. However, it’s HPE SimpliVity’s ability to provide malleable, scalable and powerful IT infrastructure, that’s simple to manage, that make its solutions so attractive to business.

Hewlett Packard Enterprise is the world’s largest provider of enterprise data center solutions, with over 80 percent of Fortune 500 manufacturing companies using its data center and infrastructure products. HPE’s aim is best summed-up as IT-as-a-service (ITaaS).

When the enterprise can adopt an ITaaS model, IT resources can be quickly provisioned for any workload, and maintain the management and control needed across the entire infrastructure – irrespective of platform. This capability allows the business to dictate change as it requires, and have IT respond as a strategic player.

The Indianapolis-based Scale Computing offers true turnkey virtualization solutions, via its HC3 platform range. Instead of providing software that runs on other companies’ hardware, HC3 saves its users time and money – the latter especially in virtualization licensing costs. Anyone who’s born the brunt of a VMWare licensing fee invoice may find Scale’s offering immediately attractive, therefore. The hypervisor shipped with HC3 comes baked in, and any updates to it and any other aspect of the offering result in significantly lower downtimes than in non-proprietary hyperconvergence solutions. Plug and play, therefore, for the next-gen data center.

HC3 is particularly suited to the expanding business wishing to deploy powerful solutions at the edge, but retain the type of simple, overarching IT management structure (with low TCO) that this type of technology can bring.

With compute ranging from six to 60+ cores, terabytes of HDD or SSD storage and fully built-in backup, recovery, and security, this is hyperconvergence in a rack-mounted unit (or multiples of units – as the enterprise expands).

The complexity of multi-vendor abstracted systems are an unpleasant reality, according to Scale, and the company’s low cost of entry to the world of massive abstraction will be very attractive to many developing companies wishing to dip their toes into the hyperconvergence waters.

*Some of the companies featured in this article are commercial partners of Tech HQ.